K3s vs K8s: The Best Kubernetes Home Lab Distribution

You may have seen Kubernetes distros referred to as K3s and K8s. These are actually different. They are both certified Kubernetes distributions. One is the original “stock” Kubernetes version and the other is a lighter weight distro that many are using for production and home lab. Which is the best home lab Kubernetes distribution? Let’s compare k3s vs k8s and see which distro is the best.

Table of contents

k3s a lightweight design

K3s is a unique Kubernetes distribution that can be run as a single tiny binary. This, in my opinion has opened up a world of possibilities for the home lab environment and runs great on lightweight hardware that doesn’t have much horsepower.

This tiny binary reduces resource usage and dependencies, making it an excellent fit for edge devices with limited resources, remote locations, and home labs.

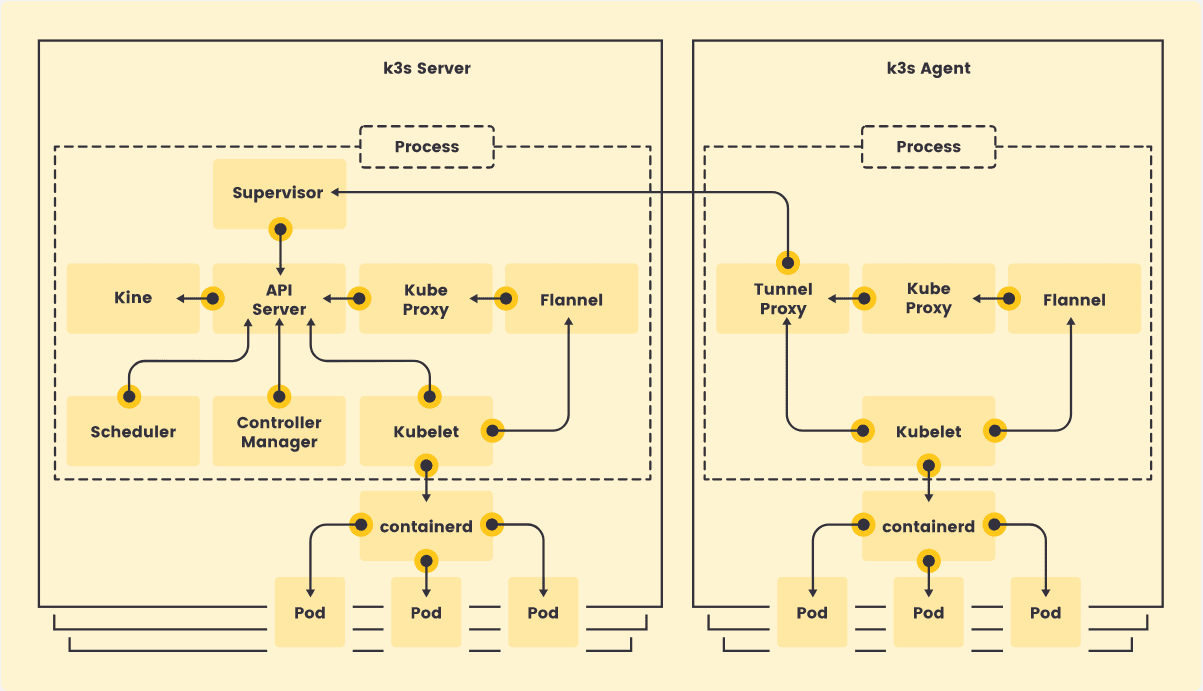

To achieve this streamlined architecture, k3s removed certain features and plugins not required in resource-constrained environments. It also combines the control plane components into a single process, providing a minimal attack surface.

Note the architecture overview of K3s below:

The resulting binary weighs significantly less than k8s, leaving fewer unused resources and enhancing performance.

Understanding k8s

K8s, the upstream Kubernetes, is more feature-rich and suited for production environments, cloud provider integrations, and large-scale deployments. It supports a full range of Kubernetes API and services, including service discovery, load balancing, and complex applications.

While it may have a steep learning curve compared to k3s, it provides advanced features and extensibility, making it ideal for large clusters and production workloads. And, honestly, with the right guides and using tools like kubeadm, you can easily spin up a full K8s cluster running on your favorite Linux distro.

Its control plane operates as separate processes, providing more granular control and high availability. However, this also means it can be more resource-intensive than k3s, requiring more computing power, memory, and storage.

Resource consumption

When running Kubernetes in environments with limited resources, k3s shines. Its lightweight design and reduced resource usage make it ideal for single-node clusters, IoT devices, and edge devices. By default, this makes it a perfect candidate for home lab environments. Running k3s on Raspberry Pi devices works very well and is perfectly suited for labs.

K3s is also an excellent choice for local development and continuous integration tasks due to its simplified setup and lower resource consumption.

While k8s has a more substantial resource footprint, it’s designed to handle beefier production workloads and cloud deployments, where resources are typically less constrained. It’s an ideal fit for complex applications that require the full Kubernetes ecosystem.

Ingress Controller, DNS, and Load Balancing in K3s and K8s

The lightweight design of k3s means it comes with Traefik as the default ingress controller and a simple, lightweight DNS server. In contrast, k8s supports various ingress controllers and a more extensive DNS server, offering greater flexibility for complex deployments.

Installation and Setup

K3s offers an easier installation process, needing only a single binary file, making it compatible with existing Docker installations and ARM architecture, as mentioned earlier with Raspberry Pis. It also supports running batch jobs and worker nodes more efficiently, thanks to its fewer dependencies and more straightforward declarative configuration.

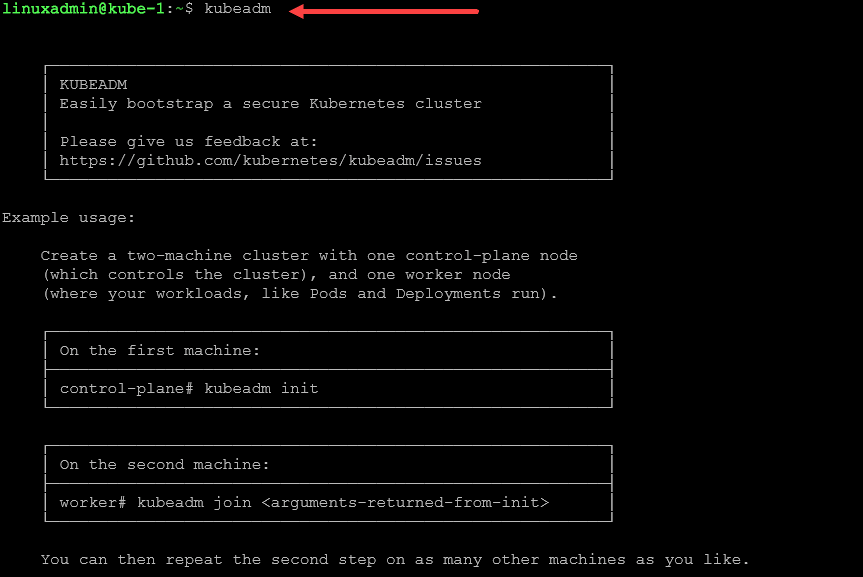

In comparison, setting up k8s can be more complex, especially for large clusters and virtual machines, as it provides more advanced features, control plane components, and cloud provider integrations.

I want to specifically call out a few projects and tools that I have used in the home lab that allow you to install k3s very easily, and these are:

K3D

k3sup

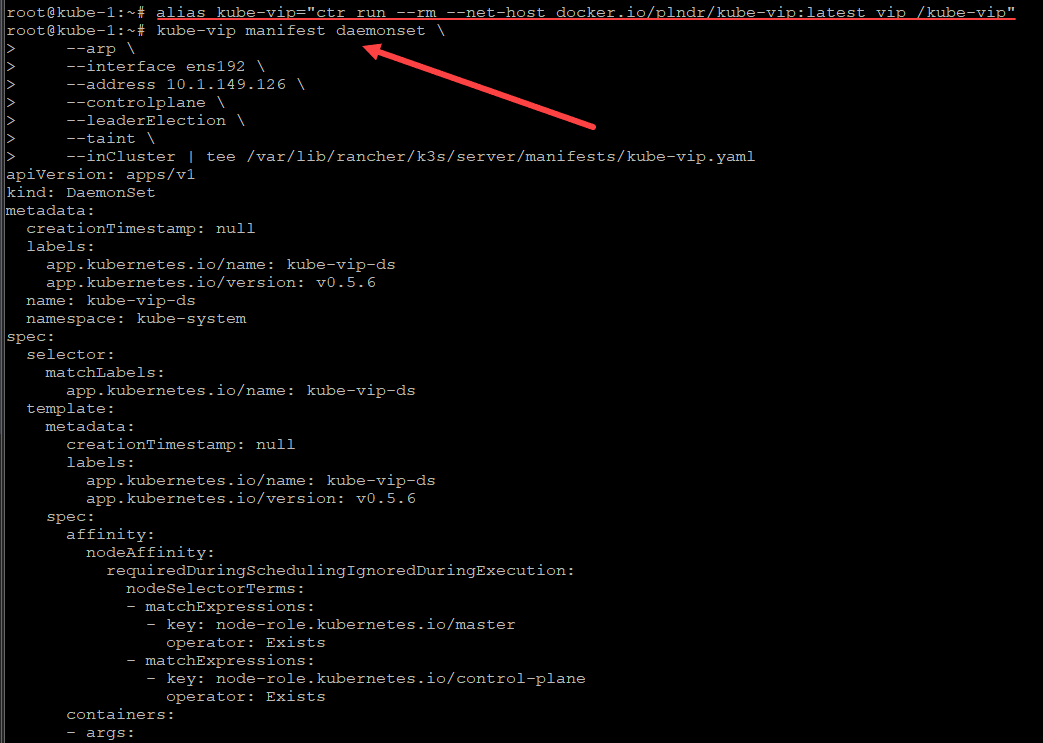

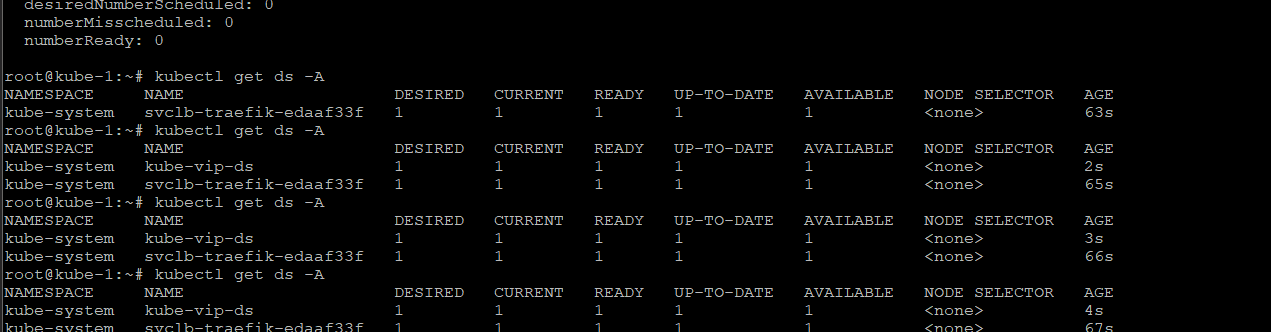

kubevip

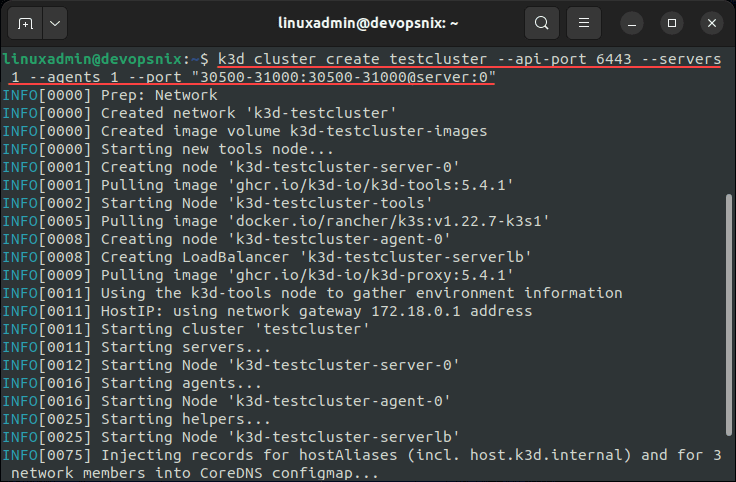

Below is a screenshot of spinning up a new k3s cluster using K3D. You can read my blog post covering the topic here: Install K3s on Ubuntu with K3D in Docker.

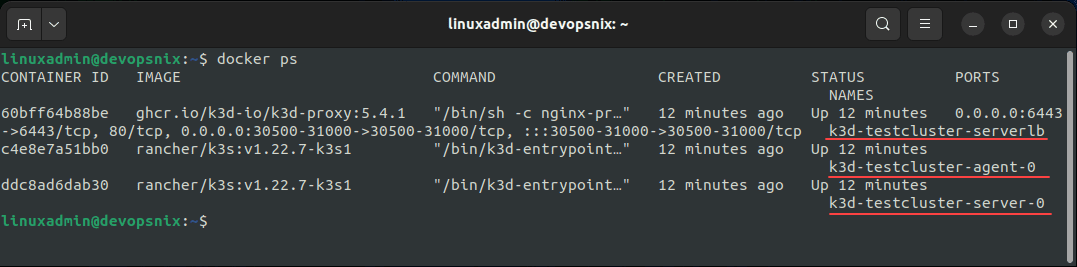

Viewing the k3s nodes running in Docker containers.

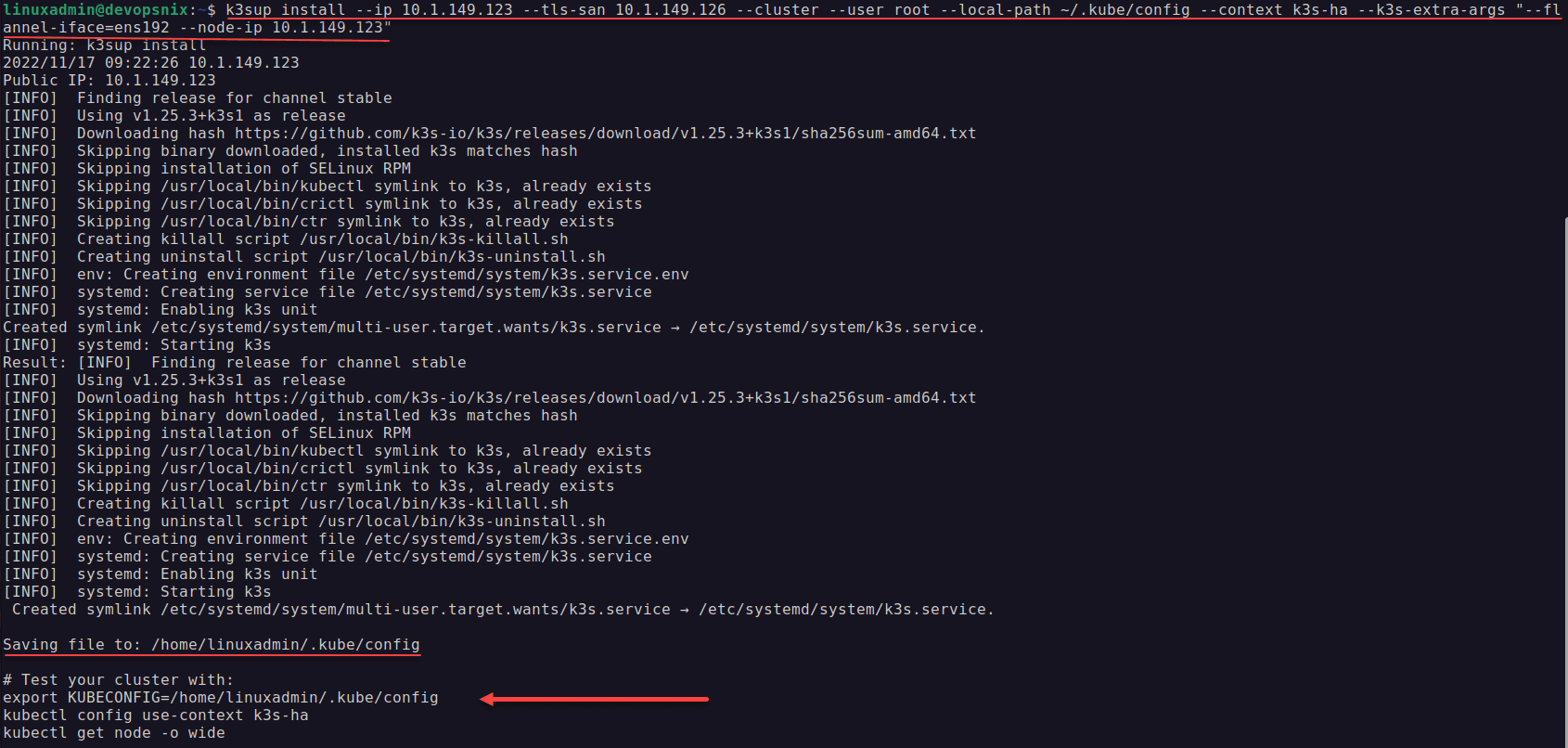

K3sup is another awesome project you can use in conjunction with K3s. Check out my blog post covering this here: K3sup – automated K3s Kubernetes cluster install.

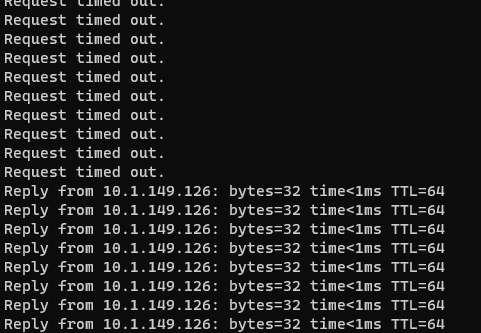

You can use Kubevip in conjunction with K3sup to have a highly available Kubernetes API. Check out the project here: Documentation | kube-vip.

Also, you can easily use kubeadm, as mentioned earlier, to spin up a full k8s cluster which isn’t too difficult either. All-in-all, you will find more community-based projects that support k3s and use this project as opposed to k8s.

Kubespray is another awesome project that will take much of the heavy lifting out of creating your Kubernetes cluster. It uses Ansible to deploy your cluster. Check out my write up here: Kubespray: Automated Kubernetes Home Lab Setup.

High Availability

High availability is one of the reasons to run a Kubernetes distribution. K8s shines here, with native support for complex, high-availability configurations. It provides advanced features like load balancing, distributed databases, and service discovery, making it a great choice for production workloads in the cloud and large-scale deployments.

However, k3s takes a simpler approach. While it still supports high availability through a distributed database (SQLite by default), its goal is to reduce resource usage by minimizing control plane components and focusing on resource-constrained environments.

This makes it ideal for edge computing, IoT devices, and single-node clusters where high availability is not the primary focus. Again, due to these reasons it is a great solution for home labs due to the footprint and simpler implementation. There is also projects like KubeVIP that provide an easy way to spin up HA for your API.

Scalability

K8s is designed for large-scale deployments, and it can handle growth efficiently betrween the two, scaling up to support thousands of nodes and complex applications.

It’s the go-to for cloud deployments and production environments that require robust scalability features.

K3s, on the other hand, is more suited to smaller-scale, resource-constrained environments. However, it can still scale to support Kubernetes clusters with hundreds of nodes, making it a viable option for robust Kubernetes use cases.

Ease of Use

The learning curve for k8s can be steep. With its rich features and advanced configurations, k8s requires a deep understanding of the Kubernetes ecosystem and its components to take full advantage of all that it offers. The upstream Kubernetes provides all the flexibility and complexity inherent in the original Kubernetes project.

K3s, as a lightweight Kubernetes distribution, simplifies the running of Kubernetes clusters. Its single binary file installation process and reduced dependencies make it an excellent choice for local development, continuous integration, and scenarios where a streamlined, easy-to-use Kubernetes solution is desired.

Ecosystem and Community

The Kubernetes ecosystem and community are significant factors when choosing a Kubernetes distribution. K8s, being the original Kubernetes project, has a vast ecosystem of extensions, plugins, and a large, active community.

It’s backed by the Cloud Native Computing Foundation and supported by multiple cloud providers, making it a rich resource for developers and operators.

K3s, while newer and smaller, has a growing ecosystem and community. As a certified Kubernetes distribution, it’s gaining recognition for its efficient use of resources and suitability for edge computing.

It may not have the vast resources of k8s, but its niche appeal and growing popularity provide a strong support network for users.

Which is right for Home Labs?

K8s is known for its rich features and high availability. So, it is an excellent choice if you’re looking to run what many are running in a full production environment. The Cloud Native Computing Foundation backs it and offers robust support for complex deployments. However, running Kubernetes with k8s requires a few more resources and can have a steeper learning curve which more moving parts.

On the other hand, k3s is a lightweight Kubernetes distribution designed to be fast and efficient. This makes it well-suited to environments with fewer resources or edge environments. Its simpler than k8s and has less resources that you need, and single binary installation process.

With k3s, you can run Kubernetes on lightweight hardware, including environments like old laptops, virtual machines, or even Raspberry Pi. Plus, k3s is a certified Kubernetes distribution, so you’ll still be working with a version of Kubernetes that adheres to standards set by the Cloud Native Computing Foundation.

For me, I think k3s wins the battle for the home lab since it is easier to spin up and there are many community projects that you can use with it, like Kubespray, KubeVIP, and others.

Wrapping up k3s vs k8s

Either way you go, if you install k3s or if you go full blown K8s, you will be able to learn either way and run your workloads in a way that is highly available and that can allow you to self host containers in your home lab or production. K3s definitely has some advantages. Why not use and learn both?