Advantages of Running Kubernetes K8S on VMware vSphere

Today you would have to have your head in the sand to not have heard about containers and Kubernetes. Containers have been the hot topic in virtualization for the past couple of years very strongly and even before then. Kubernetes has emerged as the defacto solution for container orchestration and management. Kubernetes is the hot topic in this space. Many organizations are looking at Kubernetes to perform their container orchestration. In a world where in the past decade and longer, we have been using hypervisors, containers changes all of that right? Many have heard or think that containers solve all problems with high availability even by shifting things back to bare metal. There is a really great session that took place at VMWorld 2018 in Barcelona this week entitled “Deep Dive: The Value of Running Kubernetes on vSphere (CNA1553BE). This was presented by Frank Denneman and Michael Gasch and is an extremely valuable presentation in understanding the advantages of running Kubernetes K8S on VMware vSphere.

History of Kubernetes and Its Origin

Kubernetes primer – The origins of Kubernetes began with Google trying to solve distributed cloud-native application problems when growing and operating at scale. There was no physical hardware that could solve the problems that Google was up against. Instead, Google decided to put the intelligence into the software layer. All Google’s teams across the board had to solve the problems of distributed computing and massive scale. Abstraction layers allow not worrying about the lower levels of infrastructure. Google built a system called Borg as an abstraction and management layer which allowed nodes to be managed and operated at scale as a single entity. This was around 2003. Over time Google had other issues of running multiple applications on the same operating system, such as noisy neighbor problems. Cgroups was a project that came out of the problems around this realm. In 2013 Docker the company open sourced tooling which eventually became Docker containers.

Kubernetes, developed and open-sourced by Google is the container orchestrator for containers. it is an open-source system for automating deployment, scaling, and management of containerized applications. A Kubernetes cluster is made up of two components – a control plane (master) and then the workers (workload processing). The interaction with Kubernetes happens via an API which is one of the reasons it is has been super successful. Kubernetes Workers typically run Docker containers. Kubernetes management of the workers happens in a construct called a Pod. Kubernetes needs compute, storage, and networking.

Advantages of Running Kubernetes K8S on VMware vSphere

When thinking about running containers on vSphere many have the idea that bare metal is desirable since containers have built in mechanisms for handling application availability. If applications are fault-tolerant, we don’t need DRS, HA, etc, right? So many believe there may be a reduced value of running Kubernetes on vSphere or any other hypervisor. Additionally, some may view virtualization an an unnecessary layer that reduces performance and increases complexity and costs.

If you think about running Kubernetes on top of physical infrastructure we need to take a step back and look at the assumptions made when saying Kubernetes will be ran on bare metal. In the presentation, Frank Denneman made the great points for thought in this comparision – In essence, you are saying that you want to go back to the time when we didn’t have vMotion. You are saying that you want to manage physical hardware. How do you patch your bare metal servers? How do you make sure your workloads are available? Unless you rely on a cloud provider, the availability zones depend on you writing your own availability into your Kubernetes cluster. All of these things are sacrificed when eliminating the vSphere hypervisor.

There are complexities as well when thinking about the expertise required to run open source software on bare metal. Skillset and “brain drain” are problems with open source software. It is hard to keep skilled people who may have provisioned the Kubernetes cluster to begin with.

In thinking about the provisioning time it takes to get new physical hardware, getting hardware in the datacenter is an unpredictable process. This is extremely difficult if you want to provide a consistent service to your customers. Depending on environments, it can take varying amounts of time to get hardware. As mentioned in the presentation, in the US it takes an average of 86 days to provision hardware! If you go bare metal, you have to pick your hardware and decide which server to get. What about drivers? Will it work with your Kubernetes cluster.

By comparison, running on top of vSphere, VMware provides a virtual HCL that abstracts the underlying hardware from the Kubernetes cluster. This solves a wide range of problems. To move workloads if you have a bare metal environment, you would have to kill your workload. Then you would have to bring it up somewhere else. With VMware, you have vMotion, you can simply move the workload wherever you want.

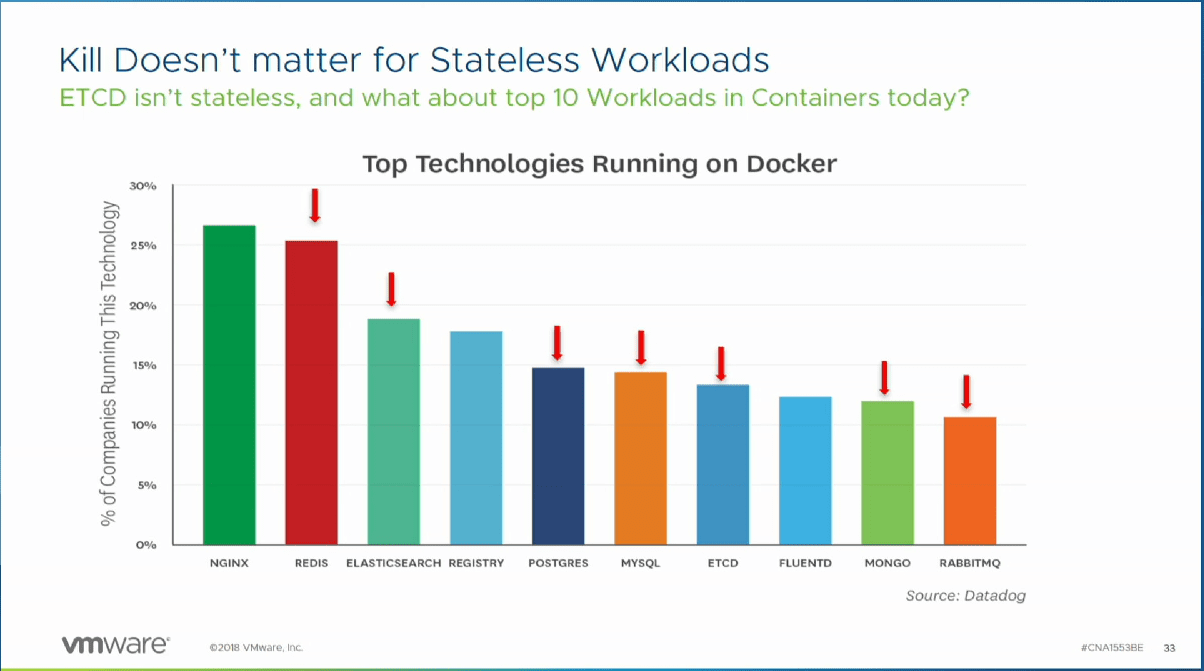

What about storage? Many think containers are simply stateless applications. A recent study says that 7 out of 10 containers are stateful. What about security boundaries? Since containers share the kernel of their host, there can potentially be security concerns with containers, especially in multi-tenant environments. Virtual machines provide a strong security isolation boundary that can be used to isolate containers.

When thinking about performance – In the old days of bare metal (pre-virtualization) – host information is used to tune run time for best performance. When thinking about containers, this means that the container process is pinned to the physical host and unused resources are wasted. Kubernetes has container format so you can start packing containers on physical host. Kubelet and container runtime runs on top of the bare meta server. Node capacity and node allocatable (buffer that it leaves for non Kubernetes processes on the host). Kubernetes is not aware of hyperthreads so it will start degrading performance when physical cores are exhausted.

Some points to consider with bare metal:

- Not all Runtimes are Cgroup-aware!

- How to tune per workload

- Resource contention and workload interference

- How must to reserve vs waste?

- Isolation security?

How can vSphere help with the above questions? VMware has a smart accounting system when a process runs on a hyperthread. In other words vSphere understands the difference between hyperthreads and physical cores. What about the VMware L1 security patch? One thing to understand, the VMware patch doesn’t really disable hyperthreading. VMware makes sure that processes don’t run processes on hyperthreads at the same time, so it is better than just disabling the hyperthreading altogether.

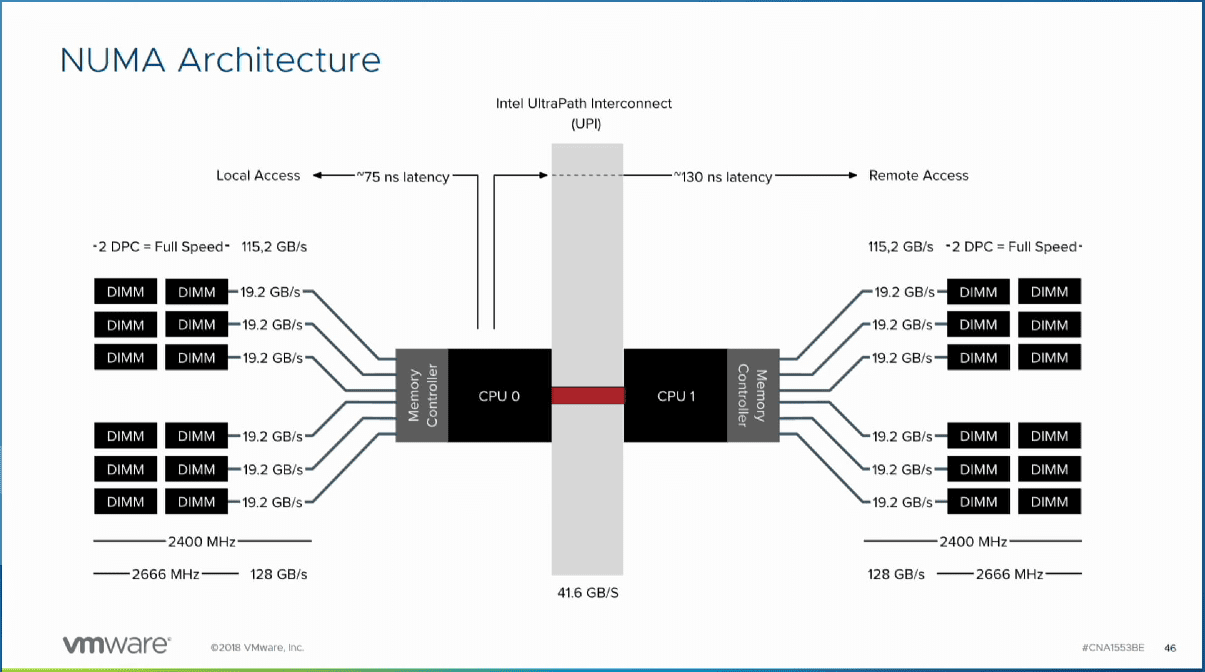

NUMA is also a consideration for workloads. 96% of customers use a 2-CPU system for running ESXi. This means there are two memory controllers. Latency increases when memory is fetched from another memory controller. The same constructs apply to containers. When sizing containers, try to reduce the footprint of the VM/worker node to reside within a single NUMA node. VMware vSphere helps to slice up the workers and performs the resource management that you would otherwise have to perform manually on bare metal.

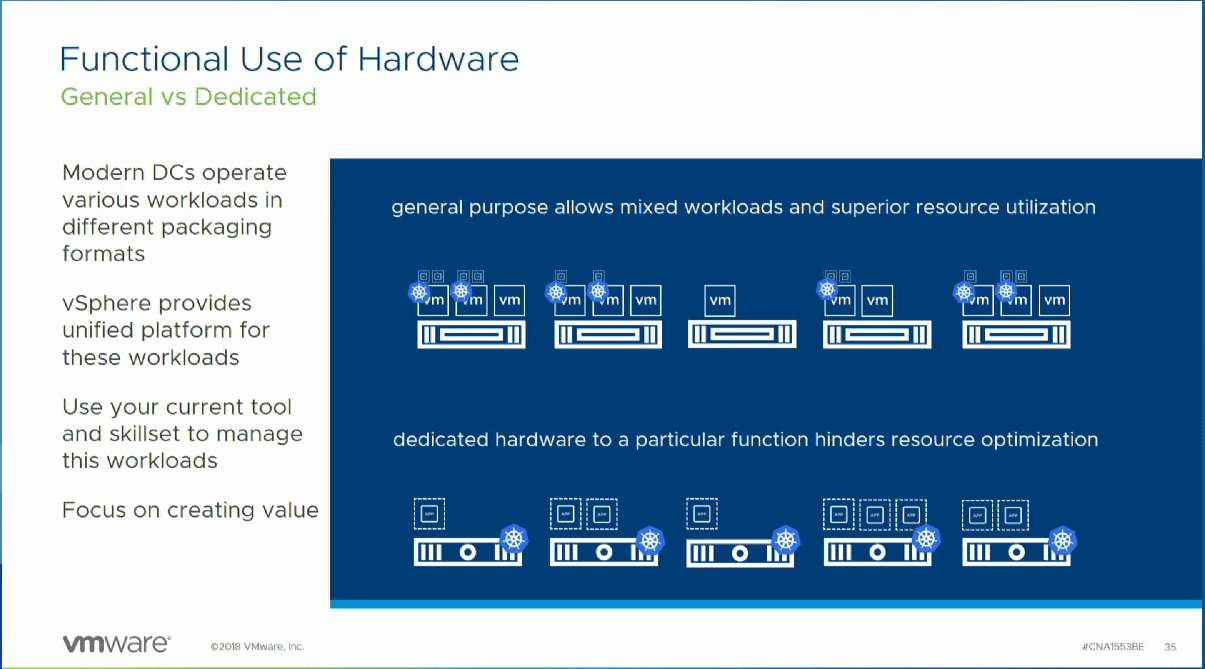

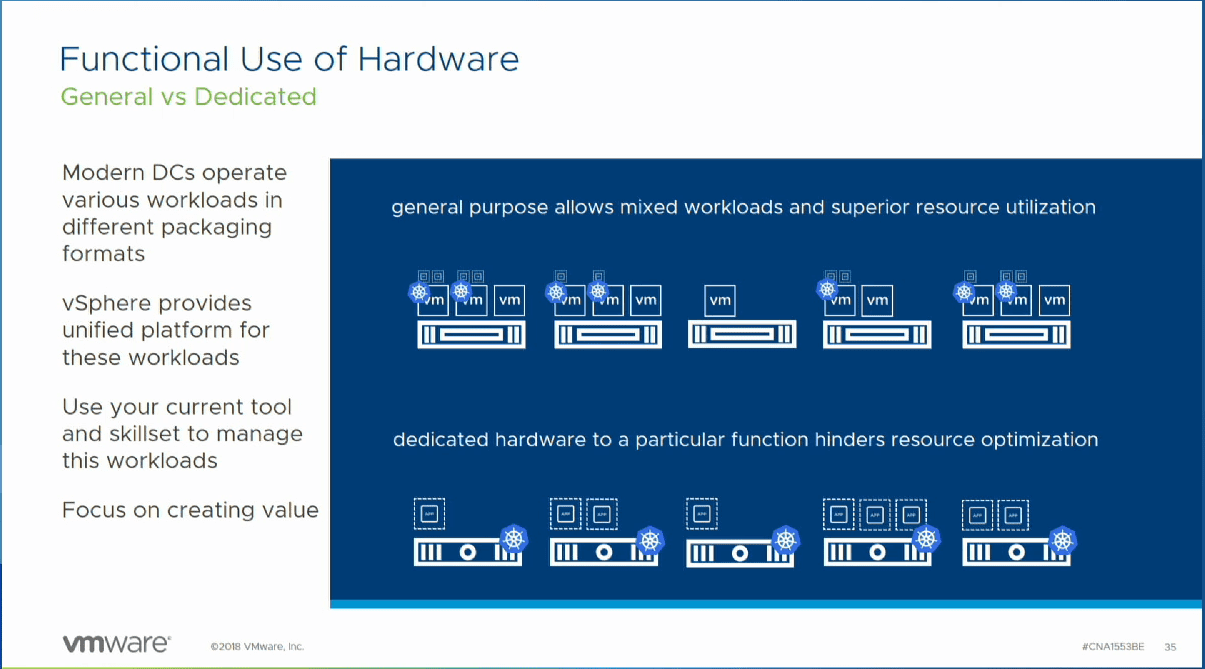

Day 2 Challenges include container and cluster sprawl. With Kubernetes success with the platform means growth and managing the growth of the Kubernetes environment. There may be different Kubernetes clusters for various reasons – prod, dev, test, multiple development teams, etc. So it isn’t a single cluster you have to manage if thinking about bare metal installs, but multiple clusters. This all translates into imbalanced resource usage, approaches, wasted resources, etc.

So thinking about running Kubernetes on VMware. Why?

You already have the environment no doubt running Windows, Linux, and other VMs. Multiple clusters of Kubernetes can be provisioned. Simply thinking about the DRS mechanism, VMware vSphere will automatically manage resources across the physical nodes in the vSphere cluster.

Day 3 Maintenance 7 Availability (Control Plane) – Kubernetes is a quorum based system so if you have a failure in a 3-node control cluster, it will still be up and running. However, if thinking about bare metal, host maintenance or even the failure of a control plane node can lead to challenges. Admission control and failover capacity (MTTR)? Proactive HA? All of these challenges are solved with VMware vSphere running Kubernetes. With bare metal, all of these mechanisms would have to be handled manually or written yourself to introduce these capabilities.

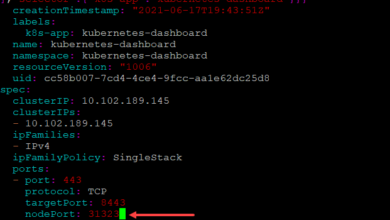

Worker nodes – with bare metal. By default in Kubernetes it takes 5 minutes for Kubernetes to determine if a failure happens. What about persistent volumes that may be mounted to the container? After 6 minutes Kubernetes will detach the volume from the dead node and mounted elsewhere. This storage mount/unmount is currently not configurable in Kubernetes.

With VMware vSphere, HA restart priorties allow identifying virtual machines and selecting which nodes need to be restarted first. In looking at getting resources back up and running quickly, and making sure prod comes up before dev/test, this is powerful functionality.

With VMware vSphere you can created a restart dependency group to allow the /etcd nodes to be started first, then the Kubernetes Control plane, and then the workers, allowing maximum efficiency with restart priorities.

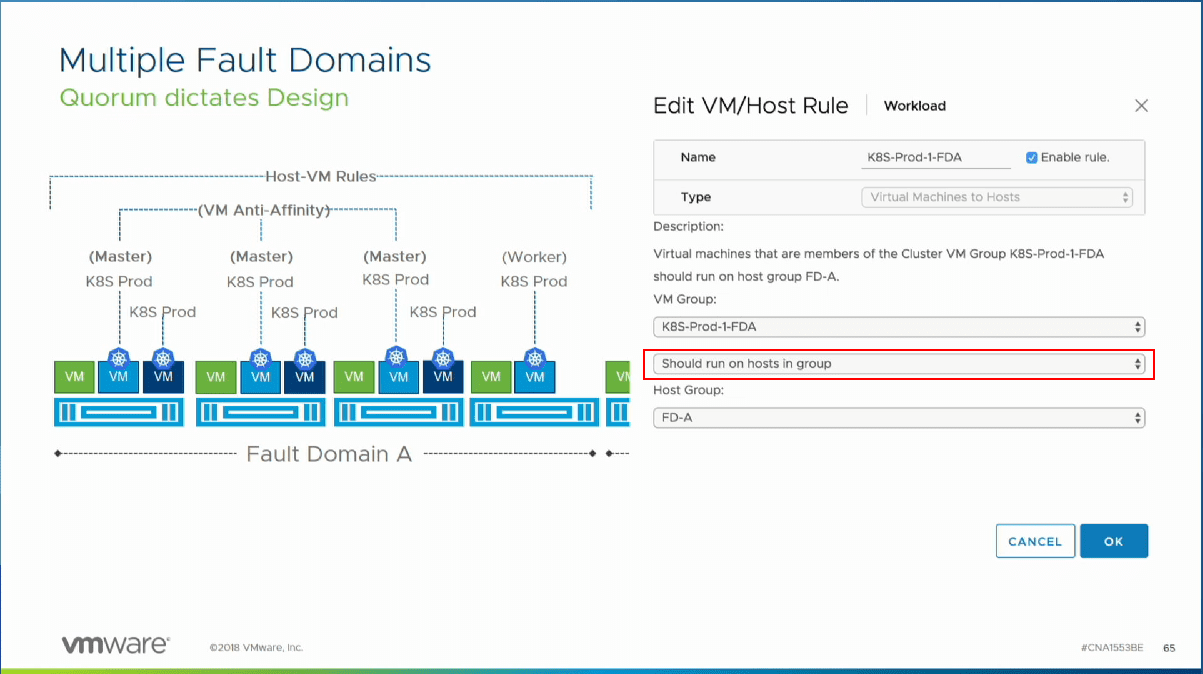

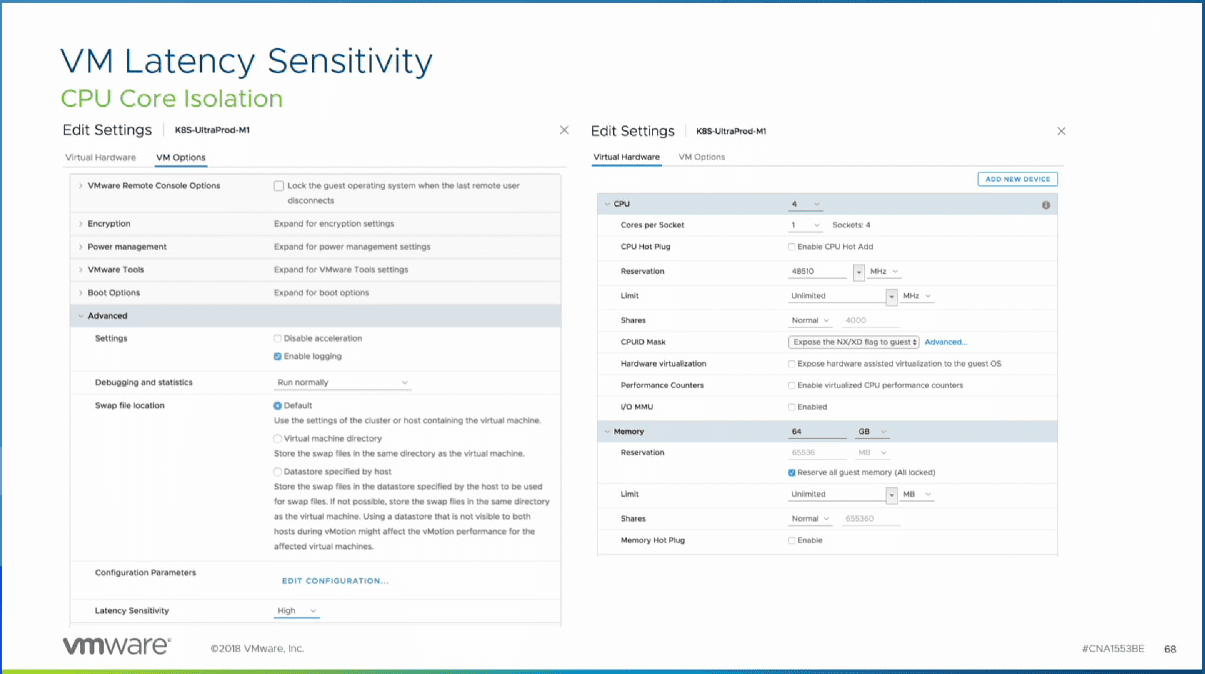

VM anti-affinity rules allow separating out the K8S master and worker nodes. Because you use affinity rules, DRS takes care of the violation of rules. With multiple fault domains VM/Host group rules you can keep master nodes together in the same fault domain. VM CPU latency sensitivity allows giving a VM near bare metal performance which can be used for container VMs to have maximum performance.

Takeaways

There is no question that container technology is here to stay and is gaining more and more momentum among developers and organizations running production applications at scale. Kubernetes has made the orchestration of containers at scale achievable and allows organizations to effectively operate clusters of worker containers that provide the ability to manage multiple containers as a single entity. Many have started to question whether a hypervisor is even needed when running containerized applications. However, there are many considerations to make when thinking about running containers on bare metal as opposed to using a hypervisor such as VMware vSphere. With vSphere, you get a powerful platform with all the mechanisms built in that allow providing functionality such as easily vMotioning nodes between physical hosts, DRS capabilities, HA failover technology, advanced CPU scheduling that understands the differences between cores and hyperthreading as well as the ability to group VMs together during startup, anti-affinity rules, as well as enforced security boundaries between containers by utilizing VMs. The Advantages of Running Kubernetes K8S on VMware vSphere are many and when comparing between utilizing vSphere and running on bare metal, there are many reasons to choose running K8S on top of VMware vSphere. Be sure to check out the session Deep Dive: The Value of Running Kubernetes on vSphere (CNA1553BE).