k0s vs k3s – Battle of the Tiny Kubernetes distros

Today, we compare k0s vs k3s, which both are two unique Kubernetes distributions that can run Kubernetes across different environments and infrastructures, from cloud to bare metal and edge computing settings. Those with home labs will also be interested in comparing the two.

What is k0s?

k0s, an official Cloud Native Computing Foundation sandbox project developed by Mirantis, is a minimalist, certified Kubernetes distribution that brings a future-proof solution to environments. Designed to reduce complexity, it’s packaged into a single binary. It comes with no host OS dependencies, relying solely on the host OS kernel to function, hence providing an efficient, clean, and straightforward approach to run Kubernetes.

k0s is a reliable choice for lightweight environments, edge computing locations, and offline development scenarios, mainly due to its optimized memory usage and reduced reliance on extensive resources.

This Kubernetes distribution doesn’t include additional components out of the box, leaving the users free to choose and install the ones they need. The result is a simpler, more flexible, and easily manageable system for deploying containerized applications on Kubernetes clusters.

What is k3s?

k3s is a lightweight, powerful Kubernetes distribution developed by Rancher Labs. Despite being compacted into a single binary like k0s, k3s stands apart due to its rich feature set and compatibility with varied environments. It’s designed to focus on minimal resource environments and shines brightly in edge computing scenarios.

k3s includes an ingress controller and a local path provisioner for persistent storage right out of the box. This characteristic, coupled with broader compatibility with different container runtimes and Docker images, makes it a popular choice for managing containers and deploying applications.

Furthermore, k3s replaces the default Kubernetes container storage with a SQLite-backed lightweight storage option, reducing complexity and contributing to its appeal. To simplify the running of Kubernetes, k3s stands as a robust, feature-rich, yet simplified Kubernetes distribution.

Both use containerd container runtime

Containerd is a high-performance container runtime that provides the robustness, performance, and extensibility necessary for running Kubernetes clusters. It is a core component of Docker, but it can be used independently of Docker, as with k0s and k3s.

While both distributions use containerd by default but support other container runtimes, this flexibility allows users to select a runtime that best fits their specific use case and requirements.

In k3s, Docker can be used as the container runtime, but it is no longer the default choice as of k3s version v1.19.1+k3s1. Kubernetes in Docker (kind) is also supported.

In k0s, you can configure it to use Docker or any other container runtime that complies with the Container Runtime Interface (CRI).

Using containerd as the default in both k0s and k3s showcases the ongoing shift in the Kubernetes community towards lightweight and flexible solutions for container runtime environments.

Exploring k0s: Minimalist, Future-Proof Kubernetes Distribution

As an official CNCF sandbox project, k0s shines as a certified Kubernetes distribution compacted into a single binary. It adheres closely to the upstream Kubernetes design, simplifying the task of running Kubernetes, making it a fitting solution for lightweight environments.

k0s features a lean operating system footprint by utilizing the host OS kernel directly, keeping the host OS dependencies minimal. Its simple configuration file eliminates developer friction, paving the way for easy application deployment. Another key aspect of k0s is its conservative memory usage, making optimal use of minimal resources ideal for Kubernetes deployments in remote locations or offline development scenarios.

Installing k0s Kubernetes cluster

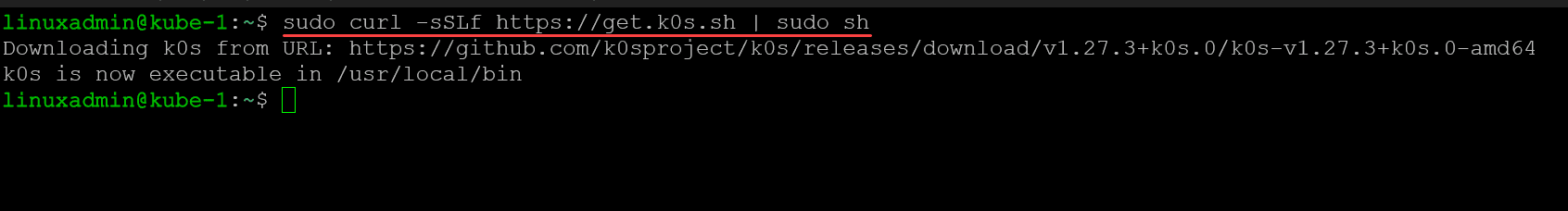

The process to install and create a k0s Kubernetes cluster is straightforward. First, we pull down the binary and execute the install script.

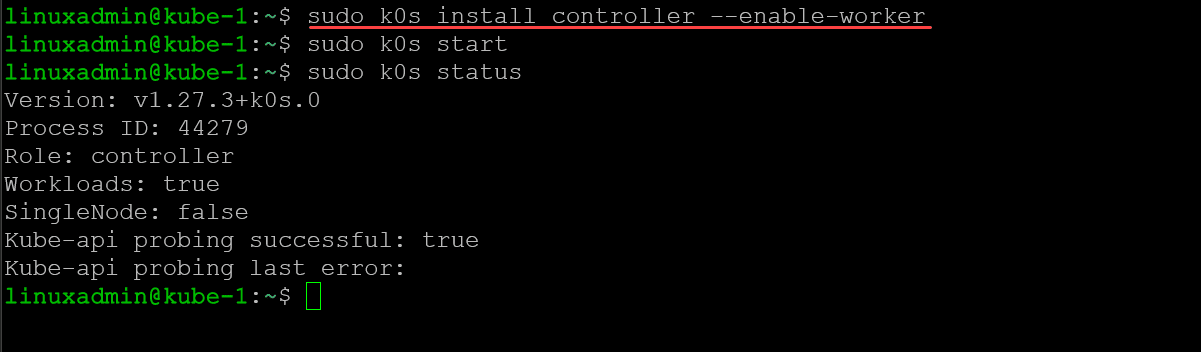

sudo curl -sSLf https://get.k0s.sh | sudo shNext, we will enable the controller as a worker as well:

sudo k0s install controller --enable-workerWe also need to start k0s. We can do that with:

sudo k0s startThen we can see the status of the k0s node using the command:

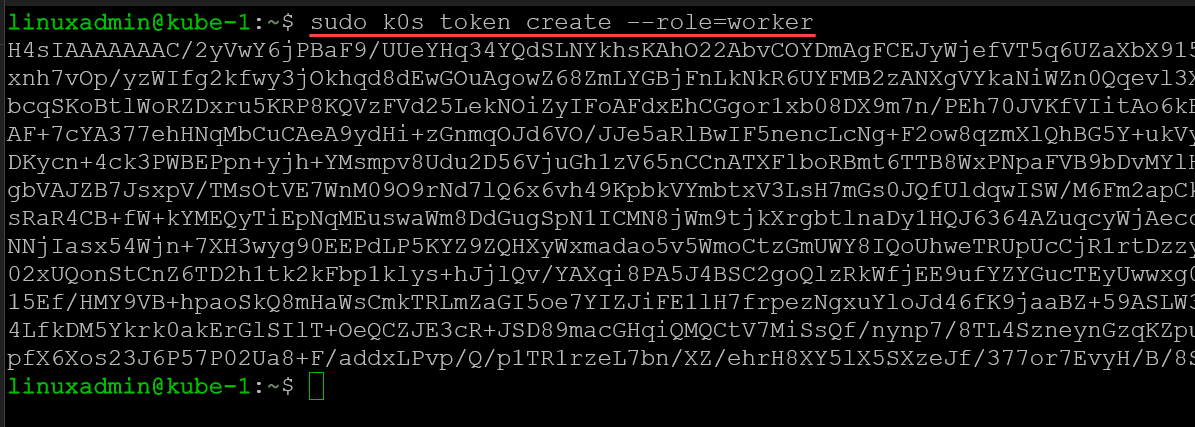

sudo k0s statusTo create and display the worker token, use the command:

sudo k0s token create --role=workerNext, joining your worker nodes by first installing k0s:

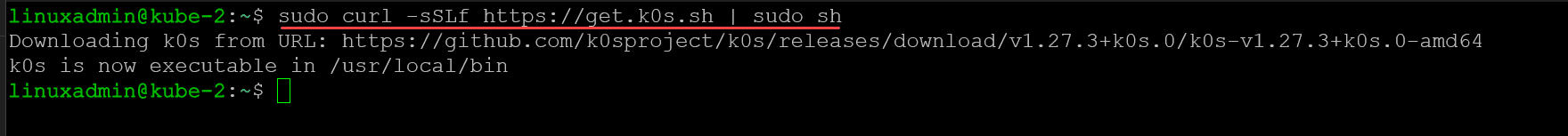

sudo curl -sSLf https://get.k0s.sh | sudo shNext, create and save a local file to each worker that contains the join token:

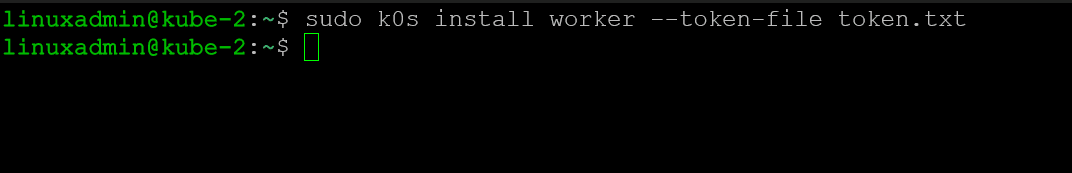

sudo k0s install worker --token-file token.txtMake sure to start k0s as well on each of your worker nodes:

sudo k0s startAfter you have run these commands on each worker you want to join, you can check the status of the k0s cluster from your controller node:

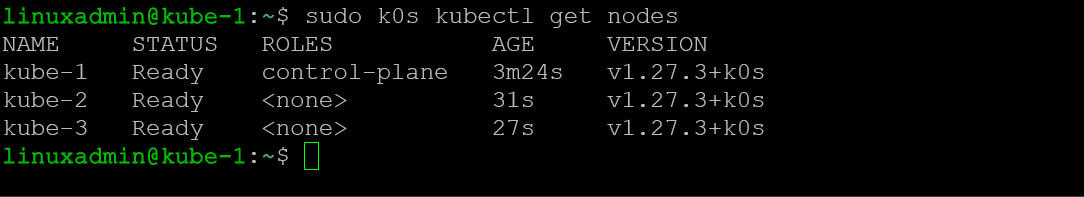

sudo k0s kubectl get nodesUnderstanding k3s: The Lightweight Powerhouse from Rancher Labs

Rancher Labs brings to the table k3s, a Kubernetes distribution that’s as lightweight as it is powerful. Its design to efficiently run Kubernetes in environments with minimal resources extends its use to edge computing scenarios.

In contrast to k0s, k3s comes with an ingress controller (Traefik) and a local path provisioner for persistent storage, right out of the box. These built-in features simplify the deployment and management of containerized applications. A significant advantage of k3s vs. other Kubernetes distributions is its broad compatibility with various container runtimes and Docker images, significantly reducing the complexity associated with managing containers.

Installing k3s

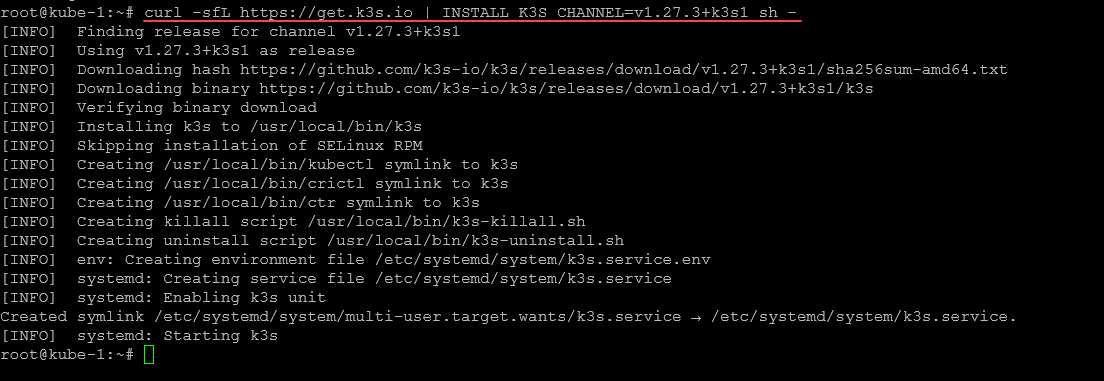

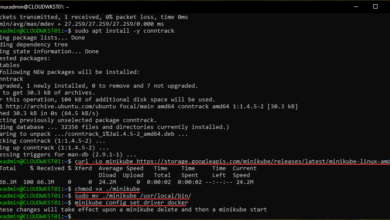

Installing k3s is simple and is a single binary you download and run. To download and run the command, type:

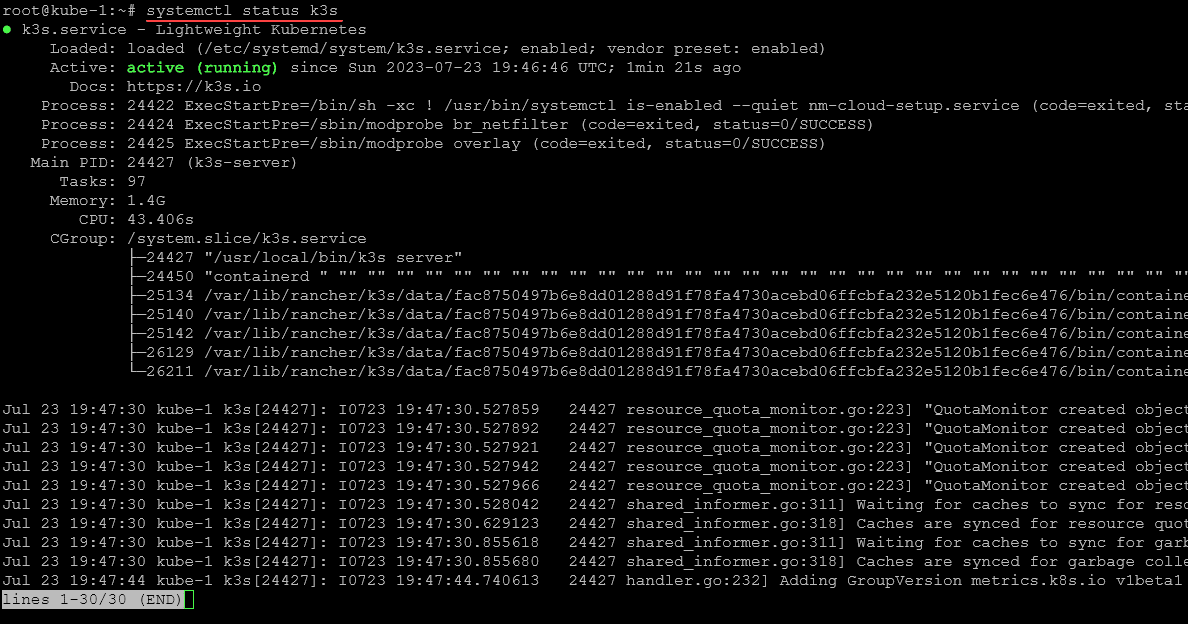

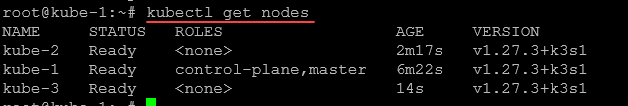

curl -sfL https://get.k3s.io | INSTALL_K3S_CHANNEL=v1.27.3+k3s1 sh -K3s is setup as a system service with the command above. We can check the status of the service using:

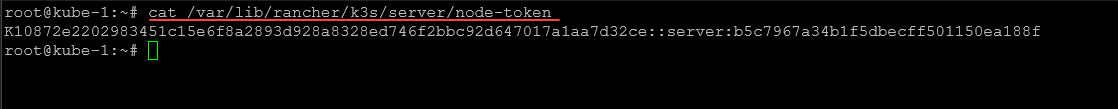

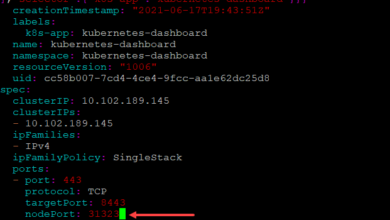

systemctl status k3sTo get the token you will need to join other k3s worker nodes, use the following:

cat /var/lib/rancher/k3s/server/node-token

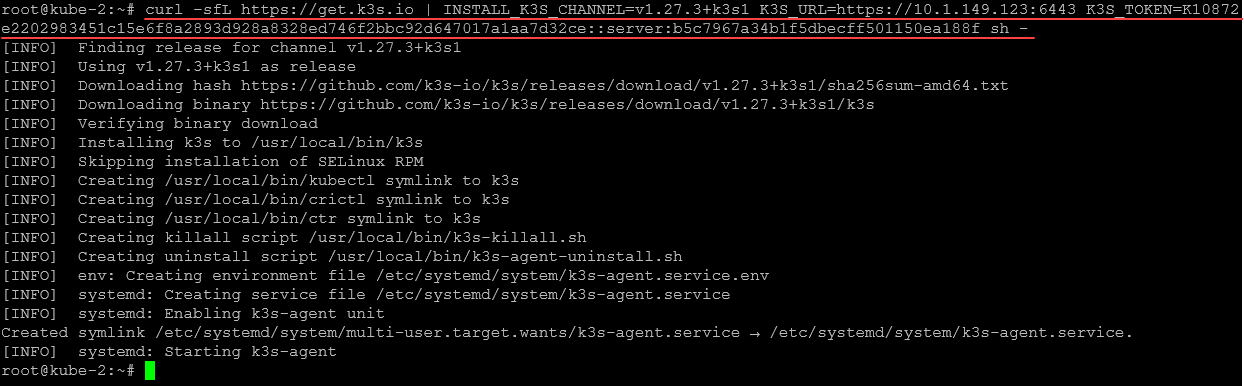

To connect a worker node in the cluster and install k3s with the join token:

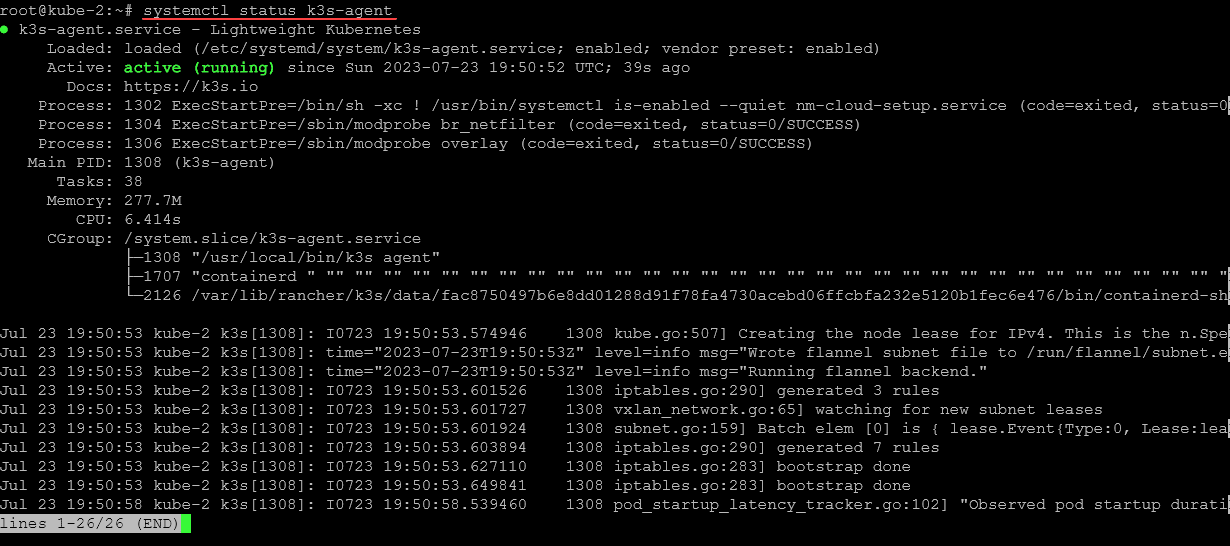

curl -sfL https://get.k3s.io | INSTALL_K3S_CHANNEL=v1.27.3+k3s1 K3S_URL=https://10.1.149.123:6443 K3S_TOKEN=<your join token> sh -After it installs, you can check the status of the service using:

systemctl status k3s-agentOnce you have joined all your nodes, you can check the status of the cluster with:

kubectl get nodesk0s vs k3s: Exploring the differences

The choice between k0s and k3s depends on their fundamental differences. Both provide a single binary, Kubernetes distribution suitable for environments ranging from virtual machines to bare metal. k3s leans toward feature-rich environments, while k0s sticks to the bare essentials.

k0s maintains simplicity by not bundling additional tools, unlike k3s, which includes an ingress controller and load balancer right out of the box. k3s also replaces the default Kubernetes container storage with its lightweight, SQLite-backed storage option, another critical difference in the k3s vs k0s comparison.

The Control Plane and Cluster Nodes: Diving Deeper into k0s and k3s

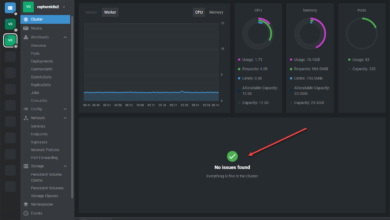

k0s and k3s offer a simplified control plane and an easy-to-setup Kubernetes cluster with a handful of worker nodes. These Kubernetes distributions cater to everything from small clusters (single node to a few worker nodes) and large-scale production workloads.

In both distributions, the control plane (comprising the master node or controller nodes) manages the Kubernetes worker nodes. k3s optimizes the Kubernetes API server and other control plane components to function efficiently with minimal resources, making it an enticing choice for low-power devices and edge locations.

Conversely, k0s delivers a pure upstream Kubernetes experience, retaining a consistent control plane, suitable for integration with existing infrastructure.

Deploying Applications and Managing Resources: k0s and k3s at Work

k0s and k3s streamline applications’ deployment and management in Kubernetes clusters. However, their approaches differ notably. k3s provides advanced features like an out-of-the-box ingress controller and load balancer, simplifying application exposure.

In contrast, k0s lets users choose their necessary components. Its minimalist approach reduces the memory footprint and overhead, making it efficient for running Kubernetes on systems with minimal resources. Both distributions manage Kubernetes resources effectively but cater to varying use cases and operational constraints.

Ease of Use: Simplifying Kubernetes with k0s and k3s

When discussing the ease of use of Kubernetes distributions, the k0s vs k3s comparison presents an interesting contrast. k0s touts a minimalist approach to Kubernetes, aiming to reduce the heavy lifting usually associated with setting up a Kubernetes cluster.

Packaging all necessary components into a single binary removes the need for complex configuration files and allows for quick, straightforward deployment of Kubernetes resources. This approach caters to users who desire a streamlined, pure Kubernetes experience without requiring extensive setup or host OS dependencies.

On the other hand, k3s takes a more feature-rich approach. While also providing a single binary distribution, it includes various components out of the box, such as an ingress controller and a local path provisioner for persistent storage.

This approach can reduce developer friction by providing readily available tools for everyday tasks, making it an appealing option for users looking for an out-of-the-box solution.

Resource Efficiency: Lightweight Champions in the Kubernetes Arena

k0s and k3s shine in the arena of resource efficiency. k0s, with its zero host OS dependencies and lean approach, optimizes memory usage, making it ideal for environments with minimal resources. It shines in edge computing and remote locations where resources may be limited.

k3s takes a slightly different approach to resource optimization. While it is also lightweight, it replaces the default Kubernetes container storage with a SQLite-backed option. This design decision enables a simpler, more streamlined storage system, resulting in less resource consumption, which benefits edge computing and IoT devices.

Performance and Scalability: Running Production Workloads with k0s and k3s

When it comes to handling production workloads, both k0s, and k3s prove to be reliable solutions. Their design to run on different infrastructures and their capability to effectively manage containers allows them to handle high-availability scenarios and large-scale deployments.

k0s’ design is straightforward and minimalist, focusing on the core functionalities of Kubernetes, making it a dependable choice for running production workloads, particularly in lightweight environments. Conversely, k3s’s feature-rich approach and broad compatibility with different container runtimes make it a flexible choice for various production workloads, from simple applications to complex, distributed systems.

Compatibility and Integration: How k0s and k3s Play Well with Others

k0s and k3s showcase impressive compatibility and integration features. While k0s relies solely on the host OS kernel, reducing potential conflict with host OS dependencies, it can work with any container runtime that adheres to the Container Runtime Interface (CRI). This approach allows users to select a runtime that best suits their needs.

k3s offers a bit more compatibility. It supports more container runtimes and has built-in support for several out-of-the-box components, including Docker. Additionally, it offers a simple process to switch container runtimes if needed, contributing to a more flexible, adaptable Kubernetes experience.

Wrapping up

Ultimately, either of these Kubernetes distros, k0s and k3s, will provide a great environment for running kubernetes. One or the other may fit your use case better or your needs. Regardless both will allow you to run modern containerized workloads at home or in production.

any source for this? AFAIK they only started gathering interest to submit an application via a GitHub issue.

Hi Jannis,

I will link to this in the post as well, but the official Github link for K0s is found here: https://github.com/k0sproject/k0s.

Brandon

What’s funny is k3s appears to be, not k0s: https://www.cncf.io/projects/k3s/

losinggeneration, interesting for sure. Mirantis says they are “CNCF certified.” Not sure if this is a step down from a project recognized by CNCF, but interesting nonetheless.

Brandon

Great comparison without taking shots and focusing on comparing features and totally agree they both work fine for specific use cases.

Concerning the CNCF certified, while both are certified, meaning they pass all the conformance tests to be “at the same level” as kubernetes vanilla, only K3s has currently been given to the CNCF and accepted as the official second kubernetes distro (first being kubernetes itself).

Last but not least, if you check both cards on https://landscape.cncf.io/card-mode?category=certified-kubernetes-distribution&grouping=category, you’ll see that K3s belongs to CNCF while K0s is still Mirantis.

But again, this detail doesn’t impact the article quality, so great job Brandon.

Nuno,

That makes total sense and thanks for explaining the differences in the realm of CNCF! Hopefully this helps with questions one have about the differences between the two with CNCF.

Brandon

K0sctl would be highly recommended to setup and maintain a k0s cluster.

Michael,

Great recommendation and thank you for your comment. I wanted to compare installing k0s and k3s using the same type of install for the blog but I would like to do a deep dive for a follow up post using k0sctl.

Brandon

Curious is Microk8s is still a thing?

Nate,

Thanks for the comment! Absolutely, Microk8s is still alive and well and is a great solution. Portainer has even added a direct provisioning wizard for Microk8s cluster into the latest releases of Portainer. Makes it super easy to get up and running with Kubernetes in a home lab or even production environment. You can check out my post here: https://www.virtualizationhowto.com/2023/05/mikrok8s-automated-kubernetes-install-with-new-portainer-feature/

Brandon

I’ve tested K0s and I like it but I’m also curious about Sidero Metal. The idea of taking bare hardware, connecting it all and bootstrapping a Kubernetes cluster with a single PC is intriguing. Have you taken a look at Sidero Metal?

Laurence,

Thank you for the comment. I haven’t tried Sidero metal, but your comment has got me wanting to try it out. Have you tried it before or played around with it in the lab?

Brandon