Virtual machine vs container: Which is best for home lab?

No doubt, if you have worked with technology for any time, you have heard the terms “virtual machines” and “containers” more than once. Both virtual machines and containers are core technologies in today’s ever-advanced technology world. However, many running home lab environments may wonder which they should run, virtual machine vs container. This post will dive deep into virtual machines vs. containers and which could serve your home lab best. The answers may surprise you. First, let’s compare and learn their strengths and weaknesses.

Table of contents

- What is a virtual machine (VM)?

- How virtual machines operate

- What are containers?

- Exploring containers functionality

- Security aspects of VMs and containers

- Virtual machine vs container in the home lab

- The Future of home labs: Virtual machines, containers, or both?

- Deciding between virtual machines and containers

- Other posts you may like

What is a virtual machine (VM)?

Let’s start our discussion with virtual machines, often referred to as VMs. These have been integral in the world of computing since the dawn of the virtualization age in the early 2000s. Essentially, they are digital replicas of physical computers, furnished with their own operating system and system resources. These resources are allocated from the underlying hardware of a real-world, physical server.

In essence, running multiple virtual machines on a single server gives you the flexibility of having multiple machines with different operating systems within your reach.

The hypervisor

A virtual machine runs an operating system and applications, behaving independently while operating on a fraction of the resources from a physical server. The magic behind virtual machines lies in a component called the hypervisor, that is essentially the host operating system.

This software layer allows your physical computer, the host, to create and run virtual machines. The hypervisor assigns system resources such as processing power, memory, and storage space from the host computer to each of the guest VMs it creates.

Multiple operating systems same hardware

A standout characteristic of virtual machines is their capacity to host multiple operating systems on a singular set of physical hardware. This allows for the utilization of a host computer, perhaps running a Windows operating system, to concurrently operate virtual machines running distinct operating systems, such as Linux or MacOS, all within the same hardware confines.

Virtual machines mimic the physical computer’s hardware architecture. As such, each VM boasts its own collection of virtual hardware components, encompassing CPUs, memory, hard drives, network interfaces, and other peripheral devices. The guest OS, or the operating system running within the virtual machine, interacts directly with these virtual hardware elements.

Efficient management

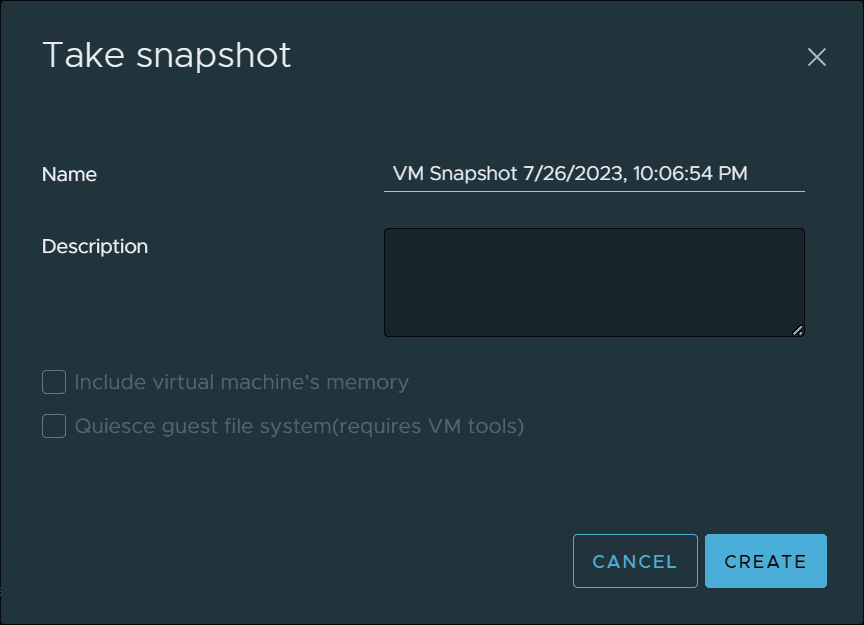

Another critical feature is the ability to manage virtual machines efficiently. You can create, delete, and modify VMs as needed, making tasks such as testing across multiple environments or software development on various operating systems a breeze.

You can also take virtual machine snapshots, providing a frozen point-in-time copy of a VM. This is useful for tasks like testing new software, where you can roll back to the snapshot if something goes wrong.

In essence, virtual machines provide the flexibility to emulate multiple computers with potentially different operating systems, all within the confines of your existing physical hardware.

How virtual machines operate

In a virtual environment, the hypervisor running on the physical computer creates an isolated virtual environment in which a guest operating system runs. This complete operating system layer gives each individual virtual machine its independence. It is separated from the host OS and neighboring virtual machines.

Make good use of modern hardware

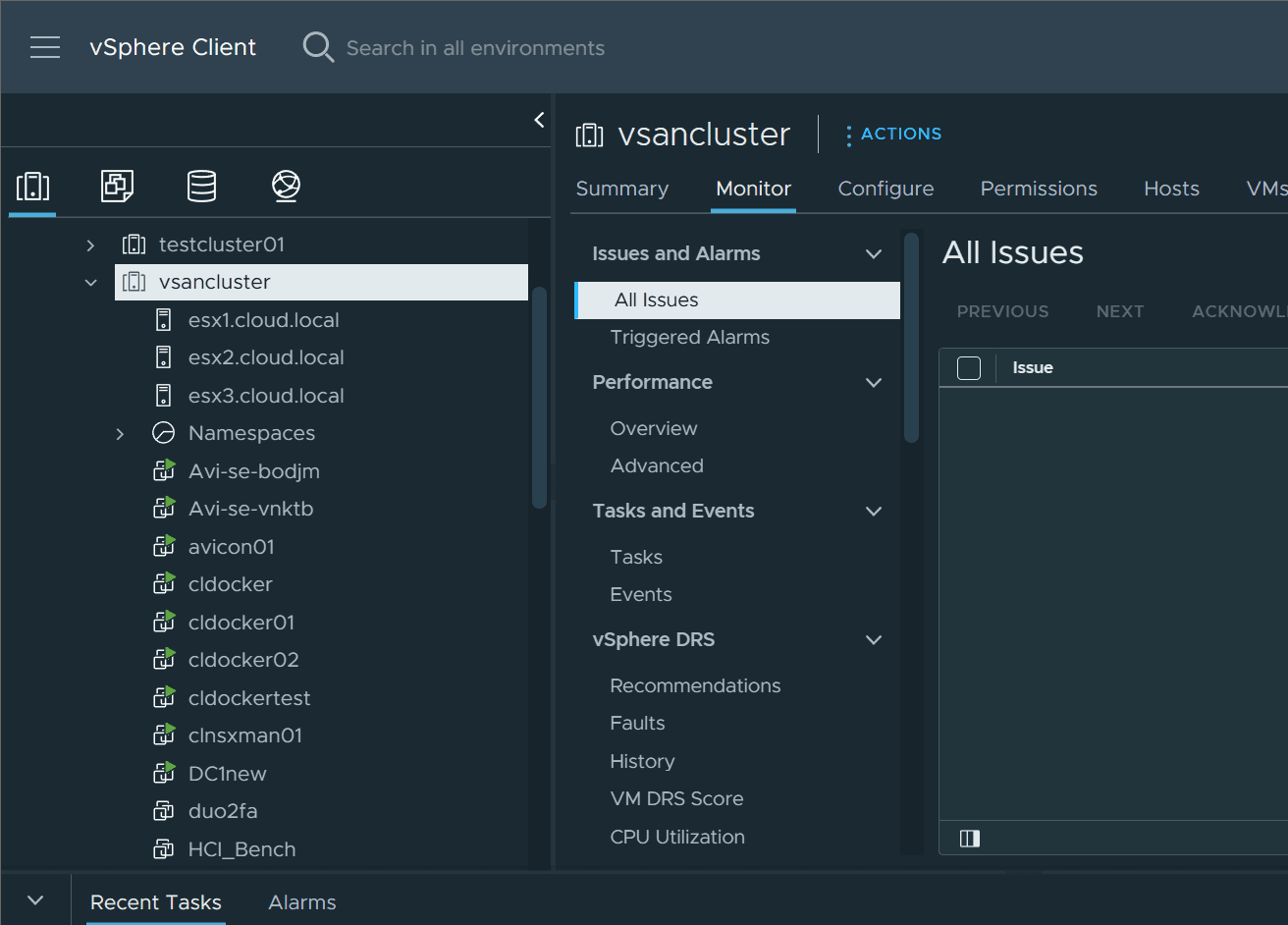

Leveraging modern, high-performance hardware, it’s entirely feasible for a single server to accommodate numerous virtual machines, each running a distinct operating system. The task of managing virtual machines has been significantly streamlined due to established virtual machine platforms like VMware and VirtualBox.

These platforms offer tools to handle virtual machine images, take a virtual machine snapshot, clone virtual machines, migrate VMs between hosts, etc., making operating and maintaining environments simple.

What are containers?

Shifting our focus to container technology, containers are another type of virtualization technology, but they take a slightly different approach compared to virtual machines. Rather than emulating an entire computer’s hardware and running a complete operating system, a container encapsulates an application and its dependencies isolated from other containers.

Shared host operating system kernel

A container runs directly on the host OS kernel, sharing it with other containers. This means that all containers on a host use the same underlying operating system, unlike virtual machines which can run different operating systems. The shared operating system approach is part of what makes containers lightweight and efficient compared to VMs.

Container images

Containers originate from something known as container images. These are agile, independent, and executable software packages that encompass all the necessary components to run a specific software – the code, runtime, system tools, libraries, and configurations. Take Docker containers as an instance; they are constructed from Docker images, whose definitions are inscribed in a Dockerfile.

Owing to their small size and quick startup time, containers prove to be very efficient for dynamic development workflows and microservices, particularly when integrated with CI/CD platforms. They enable a uniform packaging and distribution of software across multiple environments, enhancing the efficiency of the software development lifecycle.

Process-level isolation

The isolation provided by containers is at the process level. Each container operates as an isolated user-space instance, running a single application or service. While they don’t offer as robust isolation as VMs, containers still provide an effective way to package and isolate applications with their dependencies, reducing conflicts and improving deployment consistency across multiple environments.

Container engines like Docker or Kubernetes (orchestration platform) can manage containers. These engines allow you to automate the deployment, scaling, networking, and availability of containerized applications, making the management of containers more straightforward and scalable.

Exploring containers functionality

Containers vs virtual machines in the home lab is not just a battle of resources; it’s also about how they fit into your home lab or a software development lifecycle if you are interested in learning about CI/CD pipelines, etc.

In an agile development environment, for instance, containers can be beneficial due to their lightweight nature and fast start-up times. Running containers on your physical machine won’t burden your system resources as much as running multiple virtual machines.

The container engine, which could be Docker or any other similar platform, is responsible for managing the lifecycle of containers. It handles tasks like starting, stopping, and destroying containers based on the instructions given in the container image’s build file.

With containers and simple Docker Compose code, you can quickly spin up multiple solutions for effective application stacks. Below we are spinning up Traefik and Pi-Hole using Docker Compose:

version: '3.3'

services:

traefik2:

image: traefik:latest

restart: always

command:

- "--log.level=DEBUG"

- "--api.insecure=true"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=true"

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

- "--entrypoints.web.http.redirections.entryPoint.to=websecure"

- "--entrypoints.web.http.redirections.entryPoint.scheme=https"

ports:

- 80:80

- 443:443

networks:

- traefik

volumes:

- /var/run/docker.sock:/var/run/docker.sock

container_name: traefik

pihole:

image: pihole/pihole:latest

container_name: pihole

ports:

- "53:53/tcp"

- "53:53/udp"

dns:

- 127.0.0.1

- 1.1.1.1

environment:

TZ: 'America/Chicago'

WEBPASSWORD: 'password'

PIHOLE_DNS_: 1.1.1.1;9.9.9.9

DNSSEC: 'false'

VIRTUAL_HOST: piholetest.cloud.local # Same as port traefik config

WEBTHEME: default-dark

PIHOLE_DOMAIN: lan

volumes:

- '~/pihole/pihole:/etc/pihole/'

- '~/pihole/dnsmasq.d:/etc/dnsmasq.d/'

restart: always

Security aspects of VMs and containers

Security is always a consideration, no matter what type of environment you are thinking about, including home labs. As you weigh the options between virtual machine vs container, consider the isolation level.

Comparing VMs and containers, virtual machines offer more isolation since each runs its own operating system, reducing the security risk. Containers, while efficient, share the host OS kernel with the other containers running on the host. It could present a security concern due to the shared OS kernel.

Virtual machine vs container in the home lab

Ok so we have a much better understanding of what a virtual machine vs container is and what they are typically used for. So, now, which is best for the home lab? Well, in the famous phrase that none of us like to hear, it depends.

I have been running a home lab for a decade now and have seen many technological shifts since starting to run my lab a decade ago. However, the right answer for most will probably be running both. Why is that the answer?

Well, first and foremost, containers need container hosts. The container host is the computer or virtual machine with Docker or other container runtime installed. Most will likely use virtual machines in most home lab or production environments to serve this purpose as VMs are much easier to manage, backup, migrate, etc, than a physical computer.

So by their nature, containers need and work well with VM technology. For most, they will not replace their entire lab with a bare-metal physical container host to run containers, they will keep their hypervisor in place, running virtual machines as container hosts.

The shift in home lab technology – more containers!

However, I think we have seen a shift in home lab focus and technologies like production environments. Containers allow us to run home labs much more efficiently. Instead of running 65 VMs 10 years ago, we may now have 5-10 VMs, some running as container hosts with multiple containers serving out self-hosted services.

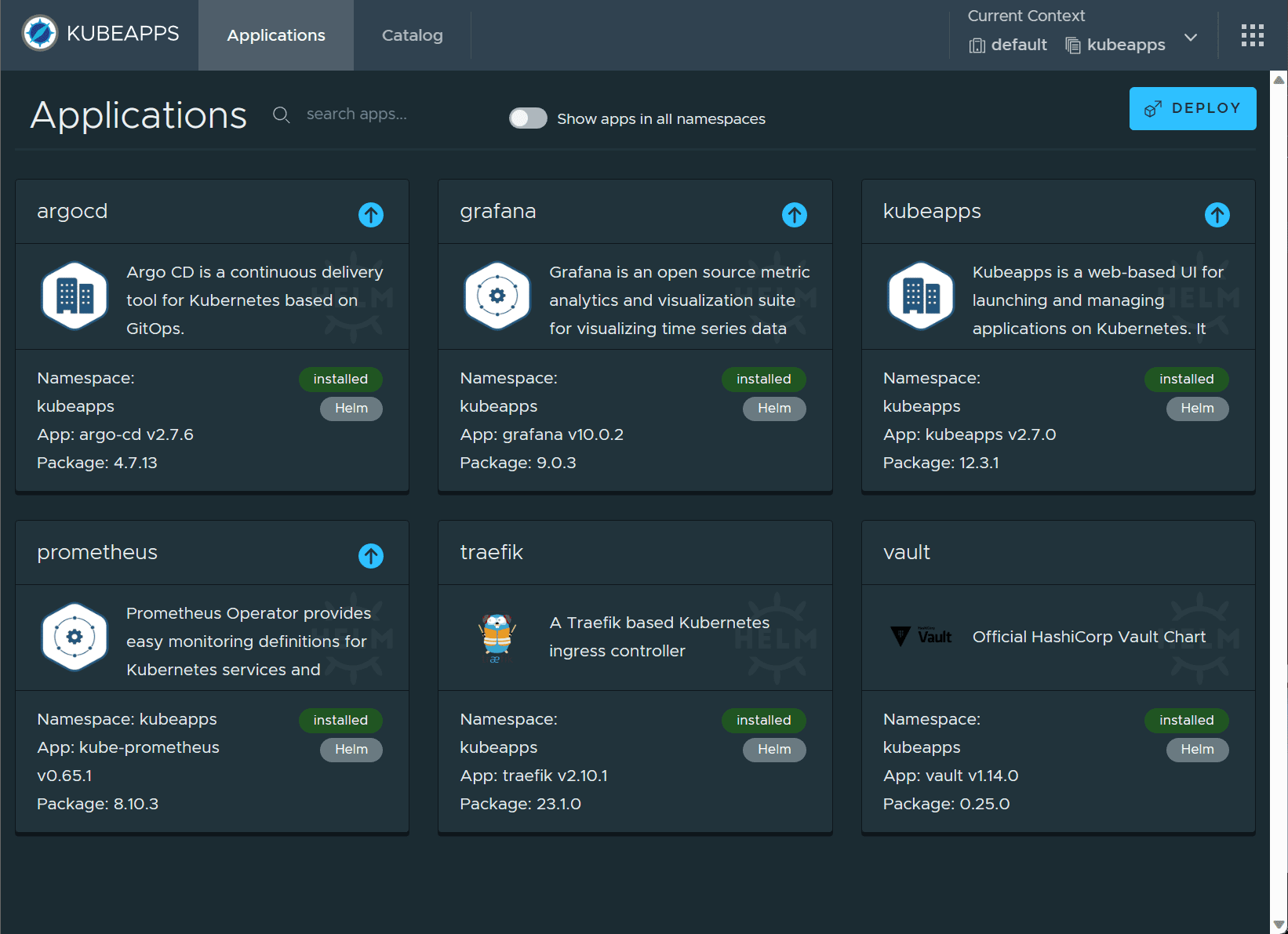

In addition to container hosts, Kubernetes has also taken off in many home lab environments. Kubernetes is a container orchestration tool that provides many great features, including:

Robust container scheduling

Elasticity

self-healing

etc

Virtual machines also have their place in other use cases. Many still may run big monolithic SQL servers, backup servers, domain controllers, or other management appliances as virtual machines. These still are important and have their place.

The great thing about the shift in technology and the new hybrid mix of VMs and containers is it becomes even easier to have a home lab running multiple self-hosted services more efficiently.

The best use case for containers in the home lab

Generally speaking, web-driven services are the best type of service to run inside containers. Containers are excellent for self-hosted web services. In addition, using containers in the home lab is an excellent way to spin up new services to test out without having to worry about spinning up a new VM and installing all the prerequisites needed to run the services.

Below is a screenshot of Kubeapps I have running in my home lab in a Kubernetes cluster, allowing management of many different containerized services.

Container images include all the necessary software and prerequisites to run the application you are spinning up.

The Future of home labs: Virtual machines, containers, or both?

The tech world is steadily leaning towards containerization, especially with the rise of microservices and cloud-native applications. But this doesn’t mean virtual machines are obsolete. They still hold tremendous value in most environments, including home labs.

Containers and virtual are not competing technologies. Rather they complement each other. VMs provide robust isolation, and containers bring speed and efficiency.

Best use for VMs?

Container hosts

Big servers that need lots of resources and needs to run many applications

SQL Servers, backup servers, domain controllers, etc

Management appliances

Best use for containers?

Running very small web services

Testing out new services in your home lab

CI/CD pipelines and agile development

Evergreen environments that are easily upgraded

Deciding between virtual machines and containers

It’s not necessarily a case of virtual machine vs container. It’s about understanding your specific needs and aligning them with the strengths of each technology. If you require complete operating systems to simulate a complex network of multiple resources or you need to run earlier versions of software, virtual machines could be your best bet.

If your goal is to have a streamlined software development process that can replicate multiple environments quickly, then containers may serve you better.

Ultimately, the decision between containers and virtual machines boils down to the specific requirements of your home lab setup, the physical resources available, and the nature of the projects you’ll be working on.

Nice write-up, I started building my home lab with the main purpose to host Plex, Emby and Jellyfin, a media server. Hardware speaking is a Intel NUC with 3 NVMe, 1 for the Hypervision, 1 fot the media storage and 1 for backup. It has 32gb of RAM, and a server processor. I installed Proxmox and now I’m trying to define how to put all together. It seems that using one Debian VM with 3 dockers will be recommended. Should I allocate all the RAM and cores to this single VM if I plan to run the three dockers on it?

Hey Alex! Thanks for the comment. I wouldn’t recommend dedicating all your available RAM for the VM as you will need some for Proxmox itself. I would probably allocate at least 8-16 GB of memory for the VM and start there to see what your memory pressure is and adjust accordingly. You can also run LXC containers in proxmox for small footprint containers, but I would probably stick with Docker containers for running your self-hosted services as they are better supported from vendors, etc.

Brandon

You compare apples to oranges. If one is not a developer he does not need a “home container lab” it will never serve as good as a virtualisation solution. Kubeapps full of trash. Just more trash to maintain the container environment.. whats easy in that?

I want to test a new app before upgrading it in my host? I can do that easily with a virtualisation solution. What use of a container in home lab? Nothing. Running a micro webservice for what? How many people need that..

Emrecnl,

Thanks for your comment. I understand your feelings on containerization. Many have felt the same way and wonder “why.” However, for me, and I think many others, a home lab environment is about learning. Things you learn and prove out in the home lab allows taking those skills to production environments or better understanding new technologies adopted at work. Also for home labs, containers allow making better use of your resources. They are not competing solutions. I doubt containers will ever get away from the underlying virtual machine until the next “thing” comes along that is able to do that successfully.

Brandon

Why not both? All my containers run in a VM. I use truenas scale for storage, and run a windows 11 VM on it for containers, simple as.

Trevor,

I agree! Neither VMs or containers are good for every single use case. Running both is the best of both worlds. Also, definitely a fan of TrueNAS Scale!

Brandon

I prefer LXC/LXD for my home labs. More lighter than vms, more control than containers

Bulli,

Great to hear you are using LXC/LXD. Are you running Proxmox? Or running these natively in another Linux distro?

Brandon

LXC/LXD are literally containers.

Yeah, when I hear the industry talk about containers, they always seem to talk about Docker and Kubernetes. I think they should give due weight to LXC/LXD and OpenVZ.

Jonathan,

I agree with you, LXC/LXD is a great option and gives a middle ground between Docker/Kubernetes and full virtual machines. Plus with Proxmox they are super easy to spin up, backup, etc.

Brandon

You don’t need to run full in kubernetes to use containers. You just need a single Linux server or VM. You can continue any service you like. You can upgrade different services without affecting one another. You can move easily roll back and forward.

It’s easier to setup your days storage for application backup and recover as well.

Not to mention being able to compose applications that have different moving parts like a nail server. Mailu is way easier than having to hand configure everything.

Use what you like, but your post just makes you it clear how ignorant you are.

Nice writeup, very clear, good flow.

As a relatively new starter to all of this (at the bottom of the DevOps learning curve!) I am, however, still really confused about why people at home prefer Proxmox on one server (Seemingly mini/microcomputers) over spanning containers across several smaller SBCs (In case I have a hardware failure, it’s not going to take out everything).

I initially opted for the latter route (Docker everywhere, Portainer dashboard). I ‘still’ don’t know if this is wise:- all I need is for stuff to work, and when it doesn’t, gracefully failover to a ‘backup snapshot’ (this part I haven’t figured out yet, and honestly, leaves me uncomfortable, as I am still concerned over my docker backup/failover situation – i.e. I do not have a solution!)

This all came to a head recently when I elected to accept an ‘update’ to my dedicated Netgate PfSense firewall unit, thinking it was a good thing to do. It bricked it and put me in 4 hours of hurt while I tried desperately to get data into the property for the next day’s work.

Looking for any advice in this area 😊

Fredu,

Thank you for your comment! I think the reason you see a lot of people running one server as opposed to many servers in proper HA comes down to cost (power, hardware, etc), heat, and budget. Home lab enthusiasts generally accept the risk of not running HA over the cost of introducing true HA that you find in the enterprise. Some elect to use disaster recovery as their strategy. They realize that if the host goes down, it will take everything with it. However, if you have good backups of your virtual machines that may be serving as Docker hosts, then you will have a good copy of your services and your data. Most who run home labs, don’t have anything as critical as you see in “production” environments. However, some have critical services they want to keep up such as DNS services, etc. Its all about your strategy and needs.

As you mention though, this can lead to down time. If you are running Docker, you can look at less complex setups using Docker Swarm as opposed to Kubernetes. However, there are many simple Kubernetes distributions like Microk8s, k3s, and k0s that make installing Kubernetes a breeze. In the end, it is all about what you are hoping to accomplish. Let me know if this helps.

Thanks Fredu,

Brandon