Stretch isolated network between multiple ESXi servers

Setting up testing networks and lab networks have been very interesting to me the past few weeks. When you are setting up Dev and Staging environments, the requirements may be to have multiple copies of production environments properly segmented and functioning to a degree that the VMs operate at a network level normally or semi-normally as they would in production. The requirements may include keeping the same IP address space. I have already written a couple of posts concerning creating these types of networks both with a purely Layer 2 model as well as Layer 3 connectivity.

Creating Lab Network Series:

Post 1 Layer 2 – Create isolated test environment same ips and subnet with VMware

Post 2 Layer 3 connectivity with same IP address space – Overlapping Subnets Lab Environment

Stretch isolated network between multiple ESXi servers

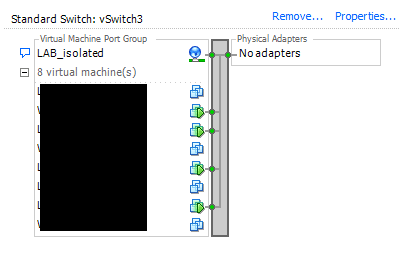

With the posts above, it was assumed that all VMs existed on a single ESXi host configured with an isolated virtual switch. By “isolated” we generally mean that we don’t have any network cards assigned to the vSwitch. It will generally look something like this:

When virtual machines are connected to this type of switch, there is no where for the traffic to go or to be routed out, so you basically have one “layer 2” wire that you can connect virtual machines to. They will be able to communicate with each other but no one else. The idea works the exact same way as when you take a single physical switch, plug devices into it, but have no uplink to the switch. it is isolated.

This type of setup works great if you have one development or staging server that you host your VMs on. However, what if you want to scale out and provide multiple hosts to leverage compute and storage to the existing “isolated network”? Well, here is where we have to rethink the truly isolated switch.

As mentioned above, the isolated vSwitch has no network cards assigned. Obviously, to share the network between two hosts, we are going to have to extend the network between the two by connecting them in some form or fashion. This is exactly what we do. By leveraging a physical switch and a VLAN separate from production/management traffic, we can achieve the same objective here extending an “isolated” network between two or multiple hosts even though we have physical links connected to the vSwitch.

There are many ways that the vSwitch(es) can be configured. If you want to dedicate physical links to your isolated traffic, you can create a new vSwitch and use those specific uplinks for that particular network. If you are already using all your uplinks connected to a particular vSwitch, you can simply create a new port group on that vSwitch that is dedicated to your isolated traffic.

VLAN Configuration

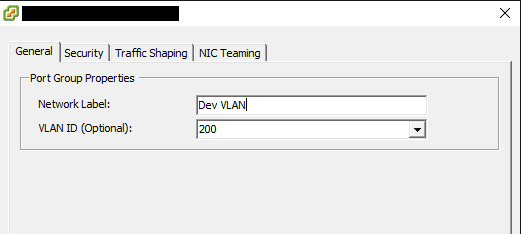

There are a few different ways that we can tag the VLAN traffic that we have designated. However, for a lab environment, the easiest way and recommended way on many fronts is to use Virtual Switch Tagging or VST. This method tags all traffic on a particular vSwitch port group with a certain VLAN ID. Below, you can see an example of a portgroup where we have VST in place.

To “share” this network between the ESXi servers in question, we would simply make sure the VLAN tagged port group exists on every ESXi server that needs to have VMs see the particular network.

As you can see, the process for sharing an isolated network between multiple ESXi servers is really no different than sharing any VLAN’ed network between ESXi servers. The only real difference that exists between this type of network and a traditional VLAN backed network is that we don’t trunk the network out of the physical switch throughout the network, so the traffic is basically isolated on the physical switch that aggregates the traffic between ESXi hosts.

The exception to the “no trunking” would be if the ESXi hosts you wanted to aggregate were on separate switches. In that case, you would need to trunk the VLAN between the switches so that tagged traffic would pass between them.

Use PowerCLI to change the network label on many VMs

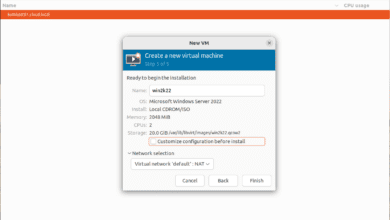

What if you have tens or hundreds of VMs that need to be moved to the new “shared isolated network” on the ESXi hosts? You can easily do this with PowerCLI.

get-vm | get-networkadapter | where {$_.NetworkName -eq "Dev Isolated"} | set-networkadapter -NetworkName "Dev Isolated Shared" -confirm:$false

Final Thoughts

The need to stretch isolated network between multiple ESXi servers may come up if you are working with creating extended development environments. Hopefully, the information above will help with the details of how this can be accomplished.