Hi,

I have a headache with my homelab network and I'm stuck, maybe someone could help me a bit as I'm not networking guy, in fact I'm hardware tech.

I've been rebuilding my vmware homelab (I know time to search new hypervisor that's one reason to have a homelab working for testing):

basically, 3 esxi hosts at home and 1 on vps cloud (where vcenter lives usually, at least by now)

VPS server is a cheap one, with only 1 nic, so i installed pfsense in vm on it, also in in one of the esxi hosts at home, so i made a working Wireguard site to site vpn with pfsense plugin and at home i have a 172.26.0.0/24 network, and at vps server i have 172.16.0.0/24. I can manage everything from my home laptop, where I also installed vpn client for when i'm as roadwarrior.

Now I'm stuck for days with this issue when I wanted add nfs shred storage at esxi vps server:

In my VPS Server I created 2 vSwitch

-vswith 0 with VM Network portgroup where the pfsense WAN is (also a vmk0 for the original management network, that i'll plan remove), connected to vmnic0 (the only one).

-vSwitch 1, i made a lan management network vmk1 and a 'NAT network' portgroup with vcenter, debian and LAN of pfsense for and 'isolated net', without physical nic. All vm have internet access and homelab connection (from 172.16 to 172.26 and viceversa).

Ok, now I wanted in esxi host at home share nfs storage, I can add it perfectly to all home host, and i can also add it manually to the debian vm at cloud server. But, I can't add it to the VPS server esxi host. Checking, vcenter is able to ping 172.26.0.0/24 (home), as far as i added all hosts etc., but when i check vps esxi host console, I can't ping my 172.26.0.0 network.

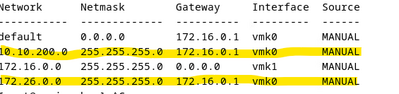

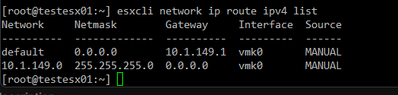

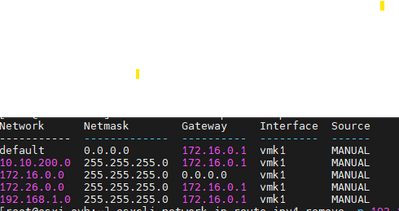

esxcli netwotk ip route ipv4 list result:

The marked one are adds I made today for testing, with no success, can be ignored. I'm lost tbh, i see all routes are added to vmk0 interface, and however VPS esxi host can ping 172.16.0.0 network (vcenter and vm on it, dns primary is pfsense ip), i can't make it route to my home network to reach the storage server. From vps esxi host can't ping internet either (I don't care, i won't it public as far as I'm connected with Site to Site VPN, but I don't know if it affects).

For me, if everything is connected except VPS esxi host, pfsense isn't the issue (It's very permisive as far i'm trying first make it work before add extra security)

Someone could help me to point in right direction if it's a esxi host routing or configuration mistake, or if it's related to pfsense firewall or routing? Maybe it's not the right way to isolate cloud esxi host... any help will be appreciated

Thanks