Over the past few days, I've been working on converting my 2-host ESXi cluster to using vSAN instead of independent datastores.

My reasons for moving to vSAN: 1) because it's cool and I can, 2) not having to worry about balancing VMs across multiple datastores, 3) taking my first step into HCI.

Here's my basic hardware layout:

Host 1: dual Xeon CPU in a 2U Huawei server, with 8x pcie 3.0 x8 slots (no bifurcation 😔 )Host 2: single Xeon CPU in a 3U Chenbro case with a mixture of pcie 3.0 slots and a few 2.0 slots (a few 3.0 slots support bifurcation, yay!)

In their old configuration, Host 1 was using 2x 2TB NVMe drives as one datastore each. Host 2 had a 2TB and 1TB NVMe each as their own datastore as well. So I had 4 datastores total over the 2 hosts.

The biggest annoyance I had was the fact I wanted to use the existing NVMe as the new vSAN datastore. So I had to buy a few more drives to temporarily hold all my VMs. Also, since my 2U server doesn't support bifurcation, I had to buy a switching NVMe adapter (i have other devices taking up the other slots, plus leaving 1 or 2 for future expansion if needed). I picked up a pretty inexpensive card that so far has work as expected: https://www.amazon.com/dp/B08ZHNTWNG

I also had to buy another 2TB NVMe so my vSAN capacity drives would match. I went with the WD SN850X. This will mix just fine with the 3 other 2TB Solidigm P44 Pro drives. They all have similar IOPs and speed.

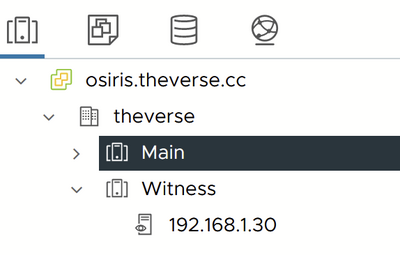

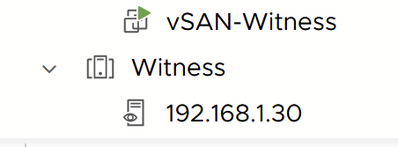

Now that I had the hardware, I needed to deploy the vSAN witness appliance, since I'm using a 2-node environment. I deployed the OVA template to an existing host. This is just an image that is actually an ESXi host. Once deployed, I created a new cluster in my existing environment, and added the appliance to it (since the Witness cannot be in the same cluster as the vSAN nodes). You can see in the screenshot I attached that I have a "Main" cluster and a "Witness" cluster under my "theverse" datacenter. The Witness appliance is just a VM called "vSAN-Witness". I then connected to it as a host in vCenter and put it in the "Witness" cluster.

After all VMs are moved to temporary datastores, I deleted the old datastores to free up the 4 NVMe drives. Next, you run through the vSAN setup wizard (very easy), select the witness host (192.168.1.30 in my case), then the disks. For my implementation, I used the 4 NVMe drives as the capacity tier, and each host also had an NVMe Optane drive to use as a cache device. Disks for each tier are required. The cache tier *must* be flash.

Then vmware does its thing and creates the datastore, and boom, you're in business. I had to re-do my setup a few times because I was silly and tried to do one host at a time (see "impatient" in post title) because moving VMs around was taking a long time.

Next I just had to move all the VMs onto the new vSAN datastore and I'm good to go!

Future steps: I will probably look into adding a 3rd host for better fault tolerance. I may try to do just a mini PC with enough storage but low compute just for the capacity.

Anyways, these are my random, unorganized thoughts on the process. If you have any questions let me know!