If you have not heard about a tool called N8N, please read this blog post. This is a phenomenal tool that really opens a lot of possibilities for doing things with AI agents and other workflows that would be much more complicated to do otherwise. Also, one of the coolest things about N8N is that they offer it in a self-hosted community edition. I am going to show you how to self-host it using Docker Compose in a standalone host or even with Docker Swarm. Let’s dive in.

What is n8n?

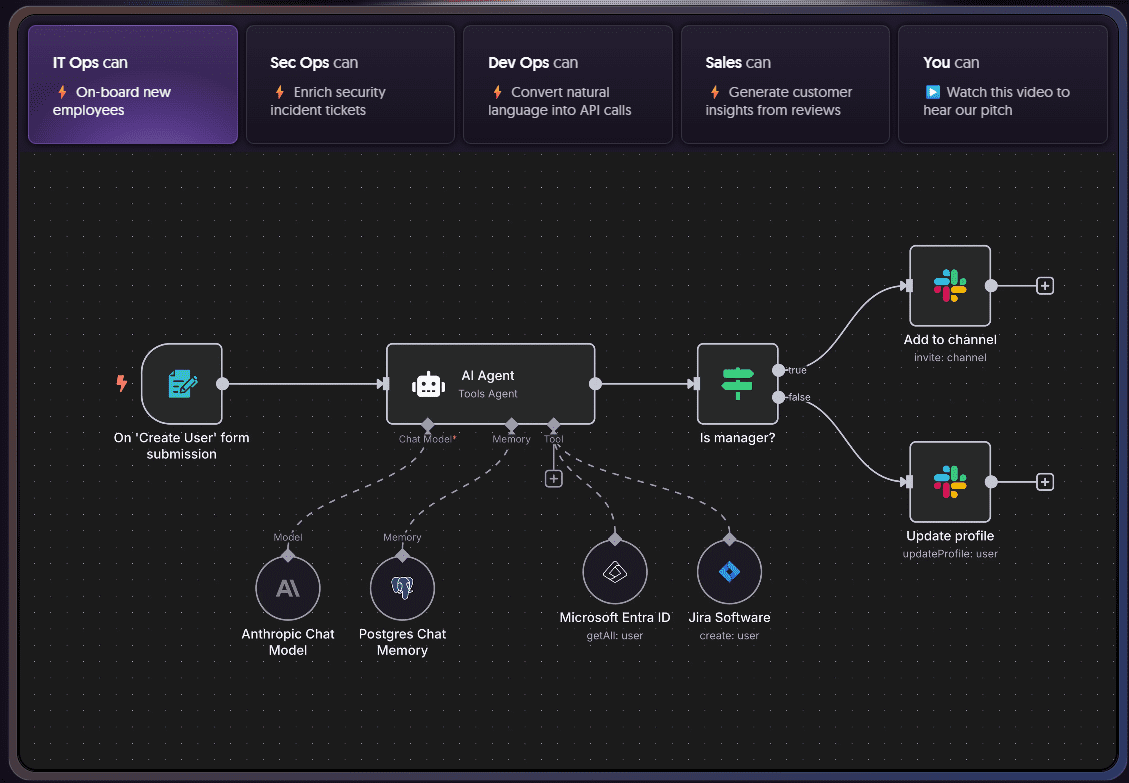

N8N is a self-hosted, open-source workflow automation tool that lets you visually connect and orchestrate over 200 services. The services you can use range from HTTP endpoints and SSH to Slack, Prometheus, and GitLab.

The powerful bit about it is that it is a drag and drop interface that allows you to build these integrations and workflows on a canvas and schedule the workflows in event-driven pipelines. I am really excited about what this tool can do as it will help I think bring AI in a practical way into the home lab and even production environments and actually get it outside of a “chat bubble” and actually doing work for us.

Features of N8N

As most of you know, I use CI/CD tools heavily like GitLab in the home lab. This has been super powerful for me for DevOps processes and building containers. However, there are other types of processes that CI/CD pipelines may not be the best suited for, like integrating with other services, kind of like a Zapier-like solution.

Note the following about n8n and what there is to like about it:

- Open Source & Self-Hosted – I like full control over my data and infrastructure. n8n’s self-hosted Docker image means I can run it on my NAS or a small VM without worrying about cloud outages or egress fees.

- Low-Code visual editor – Designing workflows in a drag-and-drop interface reduces the time I spend debugging typos in code. Yet, when I need custom logic, n8n supports JavaScript functions for advanced transformations.

- Extensive integrations – Out of the box, n8n provides over 200 nodes for services like Prometheus, Slack, GitLab, Webhooks, and more. This allows me to glue together monitoring, notifications, and configuration management without writing custom connectors.

- Active community and extensibility – The n8n community regularly publishes new nodes, and the API is well-documented if I ever need to write my own integration.

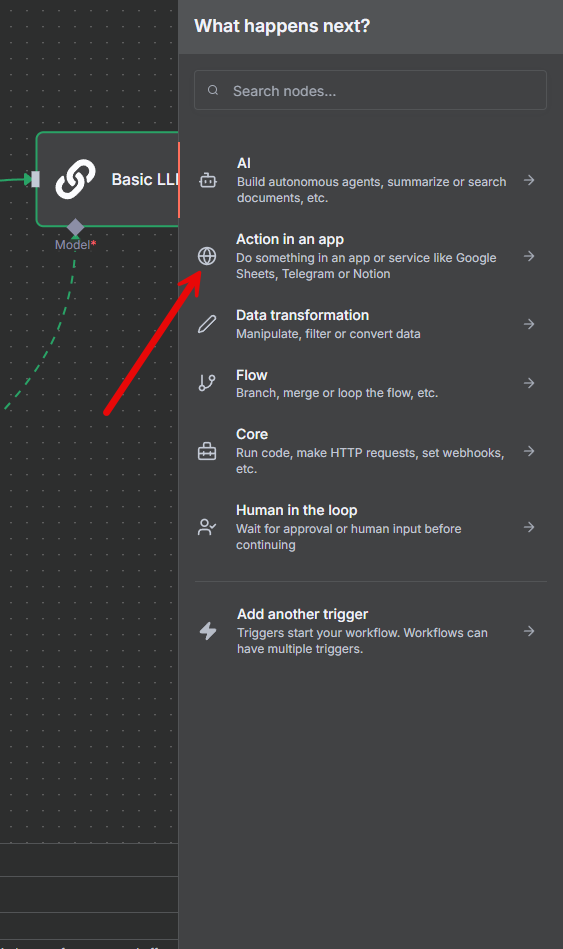

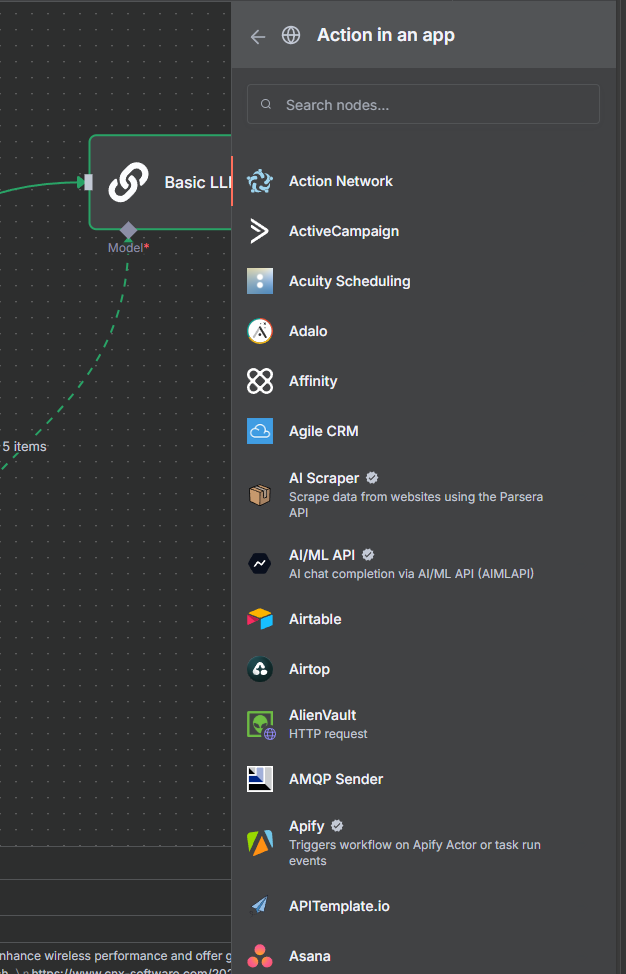

App integrations

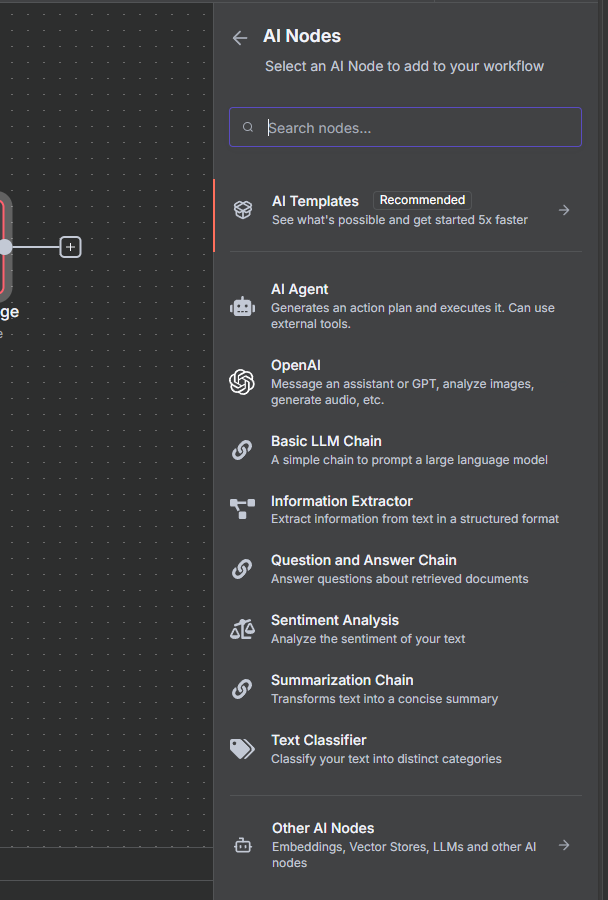

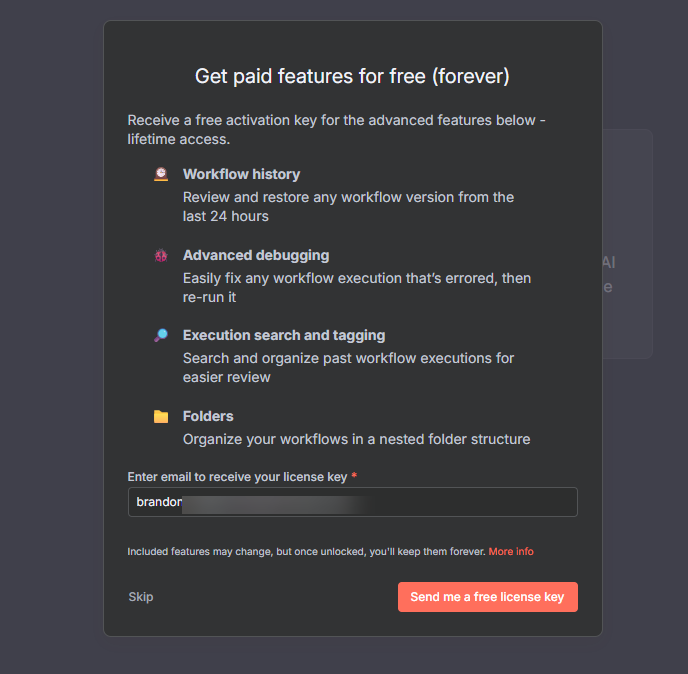

I think by far one of the most powerful capabilities of n8n is the app integrations that it has out of the box. When you create a workflow, you can see these by opening the nodes panel. Then

Here you will see the over 200+ and growing app integrations. There are tons of apps that you can integrate with.

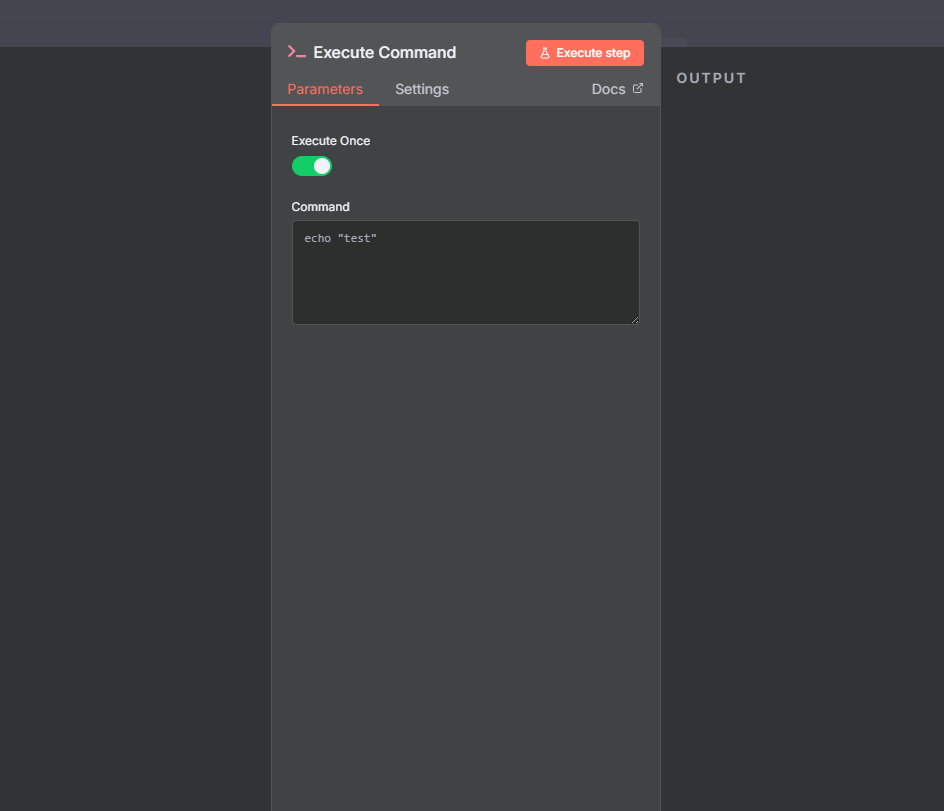

Below is just an example of one of the interesting ones for home labs, the execute command. Think ping, ipconfig, nslookup, telnet, etc. Any of the command line commands you are used to running, you can do that here.

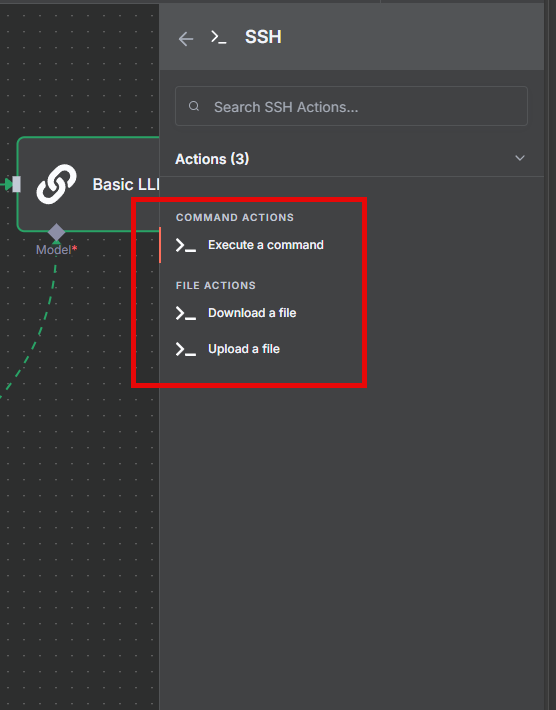

Another interesting option I think is the SSH command. You can see below, you can execute a command, download a file, upload a file, etc. So you can essentially do anything you could do by SSH’ing into a node.

AI is built-in

One of the great things you will see about n8n is that it lets you easily integrate with popular AI solutions in the cloud like ChatGPT and Gemini, but also your own Ollama instance! Very cool.

Downloadable templates

Another really great thing about n8n is that on their site you can find thousands of premade workflow templates that others have made. This takes out the heavy lifting of more complex workflows that others have already built out so you don’t have to. Don’t reinvent the wheel if it isn’t required:

Installing n8n using Docker Compose

Ok, so I have worked out the Docker Compose code and what you need for a PostgreSQL backend container so you don’t have to. Let’s look at the Compose code below. You will just need to replace the variables with your specific values in the .env file you create for the docker-compose file to pull what it needs. Save the docker-compose file as docker-compose.yml.

docker-compose.yml

version: "3.8"

services:

n8n:

image: n8nio/n8n:latest

user: "1000:1000"

restart: unless-stopped

env_file:

- ./.env

environment:

- DB_TYPE

- DB_POSTGRESDB_HOST

- DB_POSTGRESDB_PORT

- DB_POSTGRESDB_DATABASE

- DB_POSTGRESDB_USER

- DB_POSTGRESDB_PASSWORD

- N8N_HOST

- N8N_PORT

- N8N_PROTOCOL

- WEBHOOK_URL

- N8N_ENCRYPTION_KEY

- GENERIC_TIMEZONE

- TZ

ports:

- "5678:5678"

depends_on:

- postgres

healthcheck:

test: ["CMD-SHELL", "wget --quiet --tries=1 --spider http://localhost:5678/healthz || exit 1"]

interval: 30s

timeout: 10s

retries: 3

start_period: 60s

volumes:

- ./data:/home/node/.n8n

- ./files:/files

postgres:

image: postgres:16

restart: unless-stopped

env_file:

- ./.env

environment:

- POSTGRES_DB

- POSTGRES_USER

- POSTGRES_PASSWORD

- POSTGRES_NON_ROOT_USER

- POSTGRES_NON_ROOT_PASSWORD

volumes:

- ./postgres-data:/var/lib/postgresql/data

- ./init-data.sh:/docker-entrypoint-initdb.d/init-data.sh

healthcheck:

test: ["CMD-SHELL", "pg_isready -h localhost -U ${POSTGRES_NON_ROOT_USER} -d ${POSTGRES_DB}"]

interval: 5s

timeout: 5s

retries: 10

networks:

default:

name: n8n_networkYou will also need the init-data.sh script that is defined in the docker-compose.yml file. Here is the contents of that file:

init-data.sh:

#!/bin/bash

set -e

# Create the n8n user and database if they don't exist

psql -v ON_ERROR_STOP=1 --username "$POSTGRES_USER" --dbname "$POSTGRES_DB" <<-EOSQL

CREATE USER $POSTGRES_NON_ROOT_USER WITH PASSWORD '$POSTGRES_NON_ROOT_PASSWORD';

GRANT ALL PRIVILEGES ON DATABASE $POSTGRES_DB TO $POSTGRES_NON_ROOT_USER;

GRANT ALL PRIVILEGES ON SCHEMA public TO $POSTGRES_NON_ROOT_USER;

GRANT CREATE ON SCHEMA public TO $POSTGRES_NON_ROOT_USER;

ALTER USER $POSTGRES_NON_ROOT_USER CREATEDB;

ALTER DEFAULT PRIVILEGES IN SCHEMA public GRANT ALL ON TABLES TO $POSTGRES_NON_ROOT_USER;

ALTER DEFAULT PRIVILEGES IN SCHEMA public GRANT ALL ON SEQUENCES TO $POSTGRES_NON_ROOT_USER;

EOSQL.env:

# Postgres core settings

POSTGRES_DB=n8n

POSTGRES_USER=postgres

POSTGRES_PASSWORD=SuperSecretPass

# Non-root n8n user for Postgres

POSTGRES_NON_ROOT_USER=n8n

POSTGRES_NON_ROOT_PASSWORD=AnotherSecretPass

# n8n Database connection (mirrors the Postgres vars)

DB_TYPE=postgresdb

DB_POSTGRESDB_HOST=postgres

DB_POSTGRESDB_PORT=5432

DB_POSTGRESDB_DATABASE=${POSTGRES_DB}

DB_POSTGRESDB_USER=${POSTGRES_NON_ROOT_USER}

DB_POSTGRESDB_PASSWORD=${POSTGRES_NON_ROOT_PASSWORD}

# n8n application settings

N8N_HOST=n8n.example.com

N8N_PORT=5678

N8N_PROTOCOL=https

WEBHOOK_URL=https://n8n.example.com/

# Encryption & security

N8N_ENCRYPTION_KEY=your-generated-32+char-key

# Timezone

GENERIC_TIMEZONE=America/Chicago

TZ=America/ChicagoOnce you have created all three files, just run the command:

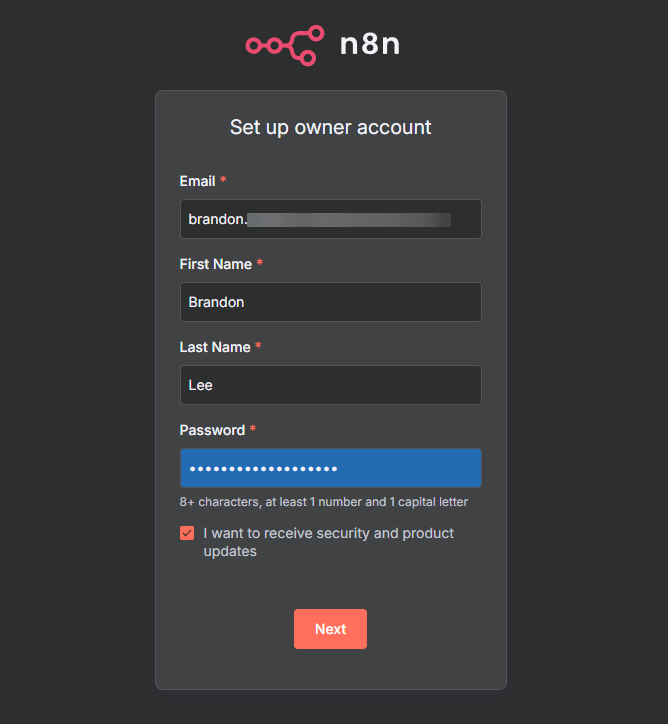

docker compose up -dAfter bringing up the container, you will see the Set up owner account. Here we enter a valid email address, first name, last name, and create a password. You can opt-in to security and product update messages as well.

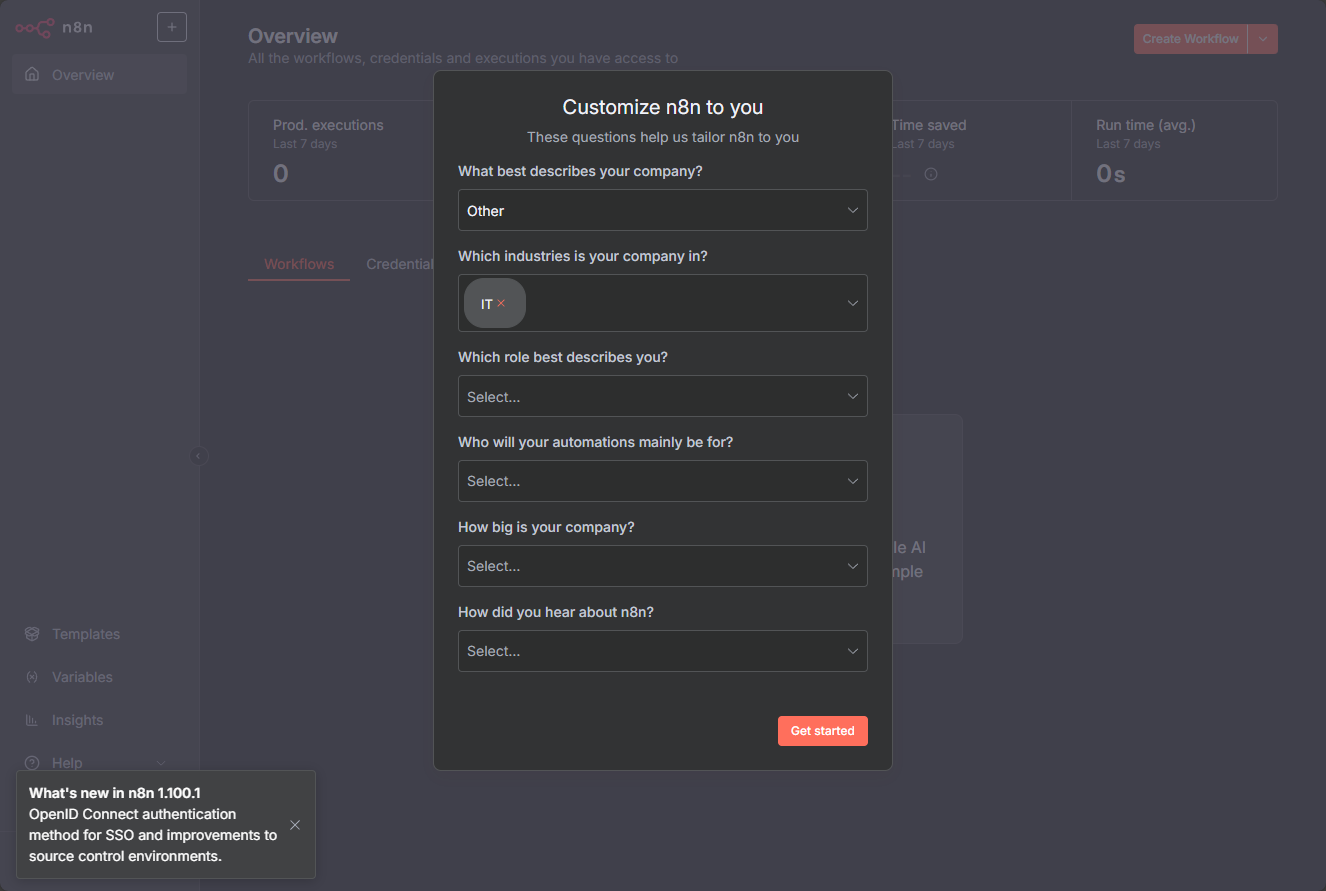

Next you can customize n8n to you with this little form.

Also, something I think is pretty cool is that you can get paid features for free (forever). This means you will get a license key that will allow you to have the things you see in the dialog box, like advanced debugging, which is great. Just enter your email address in the bottom of the form and click the Send me a free license key.

Example home lab automation workflows

1. Automated commands or file uploads and downloads

We have already highlighted above the ability to run commands and also SSH into nodes. I think there is no limit to what this will allow us to do with stringing a workflow together in the lab environment. AND, you can trigger this off certain things, such as a manual trigger, scheduled trigger, or even a chat trigger! Pretty cool.

Think about the following:

- Trigger on schedule – A Cron node fires every night at 2 AM based on another action or something else you are triggering in n8n.

- Run Backup Script – A SSH node connects to a server and runs

borg create - Verify & Prune – SSH node prunes old backups

- Notify Me – A Slack or email node posts a summary of the backup outcome

- Discord notify – In addition you can have n8n post things to your discord server

This replaces a tangled set of shell scripts and multiple cron entries with a single workflow. This is generally much easier to audit and modify than what we typically do.

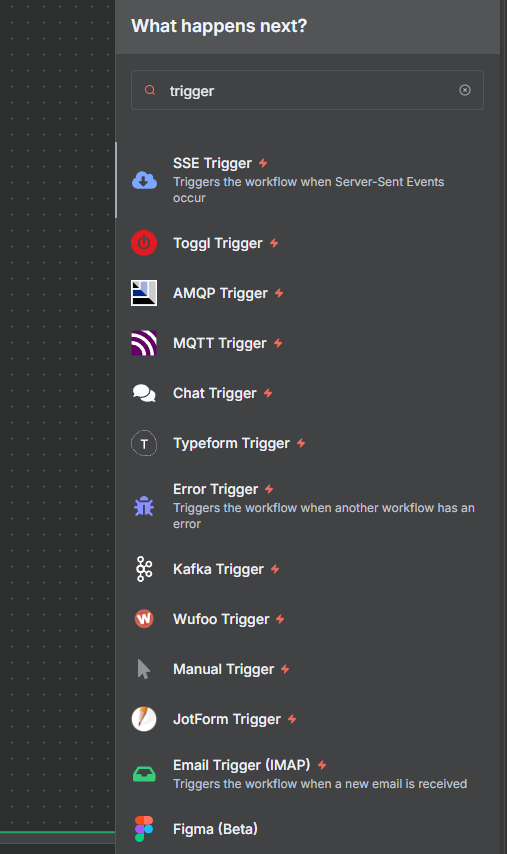

2. Aggregate or summarize tech news

One of the totally cool things you can do with n8n if you run freshrss or other rss tools is pull a list of your recent feeds and then have chatgpt summarize these for you for quick digestion or posting elsewhere. Very cool!

- Trigger a pull from your FreshRSS self-hosted instance

- Apply limits and splitting out of posts

- LLM Chain – summarize and send your posts to chatgpt, gemini, or even ollama to summarize

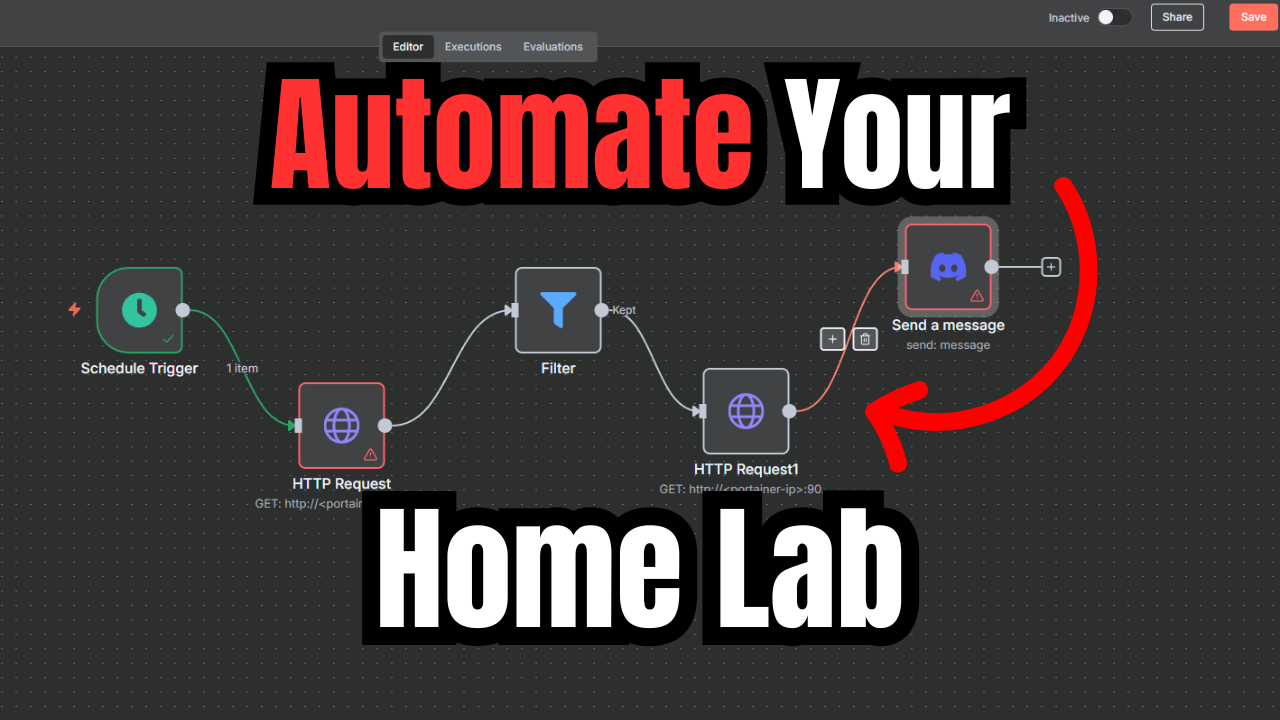

3. Polling internal home lab APIs like Portainer

Think about a solution in your home lab that you are running like Portainer that has an API that can be polled or queried.

- Polling – An HTTP Request node queries Portainer’s API for container statuses

- Filter – A Function node filters for containers with State=”exited”

- Notification – A Telegram or Slack node sends a direct message listing the failed containers and timestamps

You can catch silent failures before they escalated into bigger issues.

4. SSH into your Kubernetes node and get AI look at logs and other health metrics

I think a really exciting use case is the SSH use case with Kubernetes where you can SSH into one of your Kubernetes nodes, run kubectl and then have something like ChatGPT or Gemini check for problems with your cluster, and do some proactive troubleshooting.

- SSH into your Kubernetes node

- Run kubectl get pods -A

- Look for pods that are crashing or have issues

- Send this to an LLM

- Get notifications in Discord or other means if problems are found

5. Home lab inventory and documentation

You can have n8n run a string of commands or other tools that you normally do manually to update your documentation and inventory in the home lab environment.

Think about having it do the following:

- API polling – Query Proxmox and Docker for VM/container details

- Transform – A Function node formats the data into Markdown

- Git Commit – A Git node commits updates to a documentation repo on GitLab

- Merge – Optionally opens a merge request for review

With this, your documentation repo can always reflects the current state of your lab without manual updates.

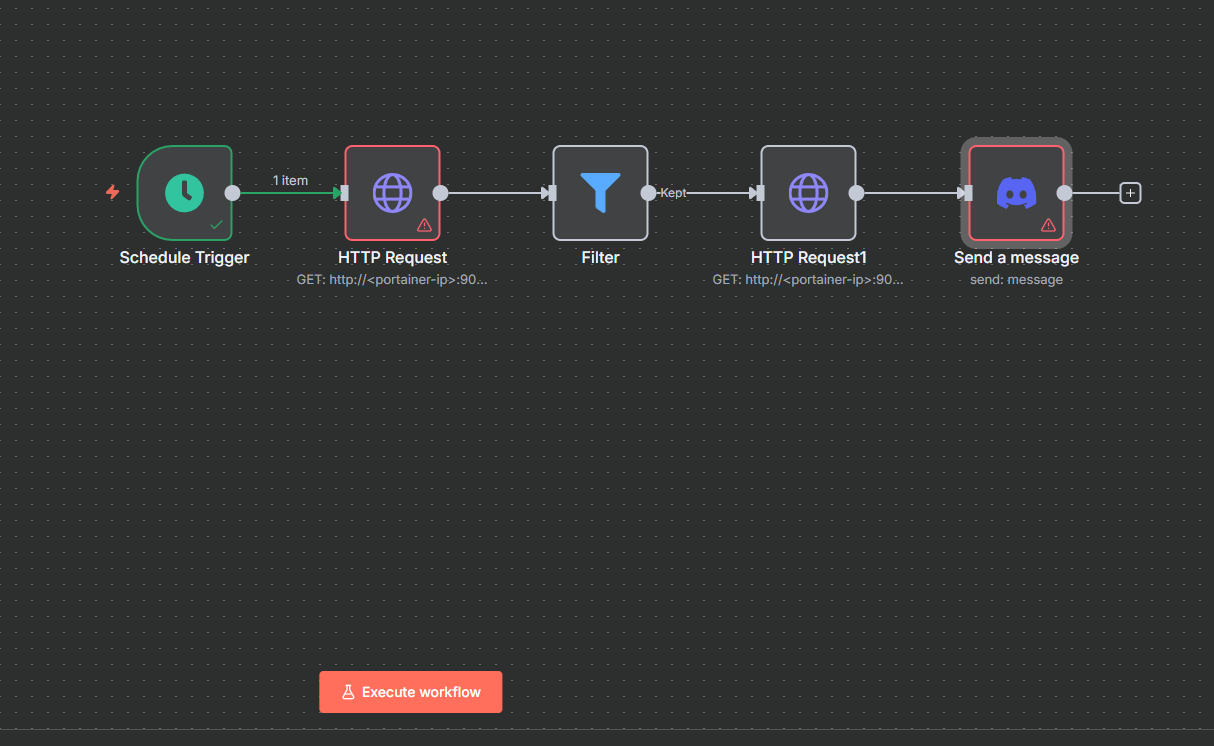

Building a Sample Workflow: Docker Container Restarts

Let’s walk through a simple example: automatically restarting a Docker container if it stops unexpectedly.

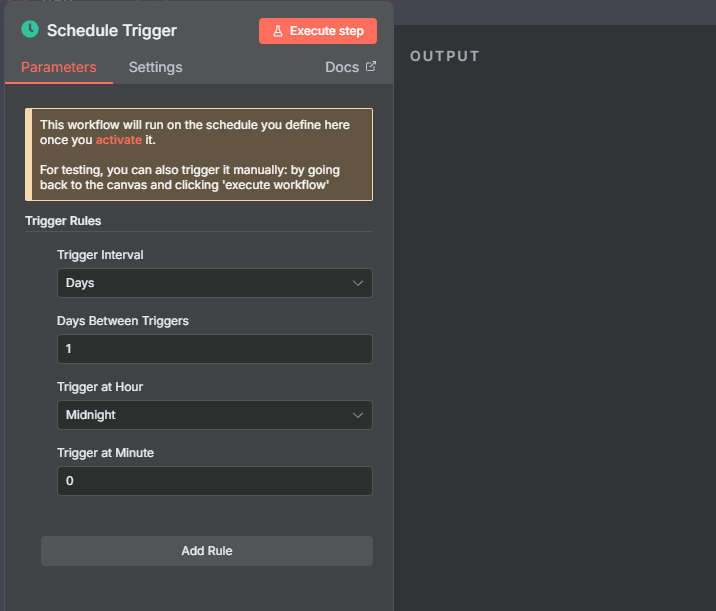

Schedule Trigger – Add a Cron node set to run every 5 minutes or an interval you would like

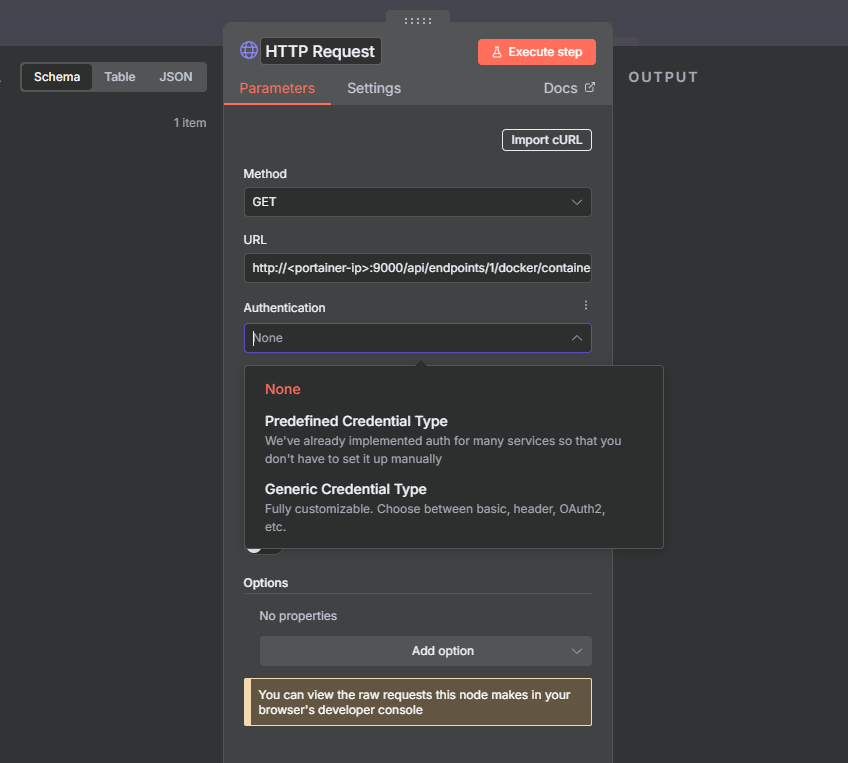

HTTP Request to Portainer – Configure an HTTP Request node with method GET: http://<portainer-ip>:9000/api/endpoints/1/docker/containers/json?all=true. Set Authentication to your Portainer API key.

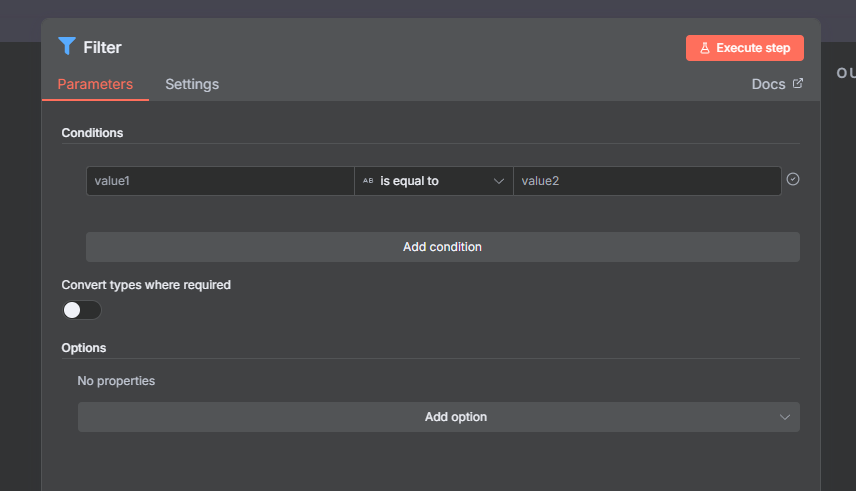

Function Node – Add a Function node with code to filter containers with State === 'exited' and return an array of container IDs.

HTTP Request to Restart – Connect the Function node’s output to another HTTP Request node configured with method POST: http://<portainer-ip>:9000/api/endpoints/1/docker/containers/{{ $json.containerId }}/restart

Notification – Finally, you can send something to Discord or elsewhere: “Restarted container {{ $json.containerName }} at {{ $now }}.” Below is an overview of what the n8n workflow automation might look like.

Tips and tricks you might consider with n8n

Note the following quick list of things you might consider when working with n8n:

- Save often – One of the things with n8n that I don’t particularly like is that you have to manually save your workflow. If you spend 20 minutes editing your workflow, and then accidentally click out of it and don’t save, it is gone. It would be nice if it had some type of auto save functionality. Just be aware of this and save often.

- Use Environment Variables – Store credentials and URLs in n8n’s environment instead of hard-coding them in nodes

- Version Control – Export your workflows to JSON files and commit them to Git and treat them like code

- Use templates – Look for templates on the n8n site as you may find a starter scaffolding for a workflow you are wanting to build

- Backup n8n Data – Your workflows and credentials live in the

.n8nvolume persistent volume for your container. Be sure to be backing this up.

Wrapping up

The possibilities with n8n are really endless. I think we are seeing a paradigm shift with AI and tools like n8n that will allow us to have agents do a lot of the manual grunt work that we have been used to doing in the past. If you are like me, there are better things you would rather be doing than looking through logs and checking out container or pod health. Let me know in the comments if you are using this already.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

n8n is not open source. It has a community edition on Github. This is a version that does not support all functions and is tied to an n8n account.

OpenSourceGuy,

That’s a good call out. It looks like it is a “Sustainable Use license” that lets you use it, modify it, and distribute to others so long as you don’t charge for it. Its a good differentiation to make though and good shout. I think for most though this will be what they are looking for in a lab environment.

Brandon