There are definitely tools that I keep coming back to in the home lab and that are the core of what I would call what runs most of my lab environment now. I use these tools to build CI/CD pipelines, run AI models and run container orchestration. I’ve worked on a ton of projects this year across Kubernetes, Docker Swarm, self-hosted LLMs, GitLab, monitoring stacks, and other projects. In this post, I want to share my favorite home lab tools 2025 . These projects have genuinely changed how I operate my home lab or build out automation for clients.

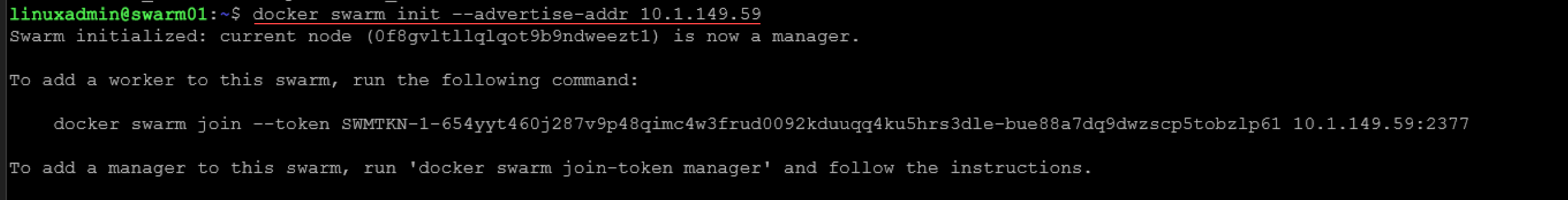

1. Docker Swarm + MicroCeph: The Perfect Lightweight HA Stack

I’ve gone deep into Docker Swarm this year, not just as a learning tool but as a solid platform for hosting production workloads. But, things really came together when I introduced MicroCeph, a minimal Ceph implementation from Canonical into the cluster. It gave me HA storage for containers without the headache of managing full-blown Ceph clusters.

Why I love it:

- Seamless integration with Docker volumes

- Works great with Portainer for visual management

- Easy to replicate across three nodes with Keepalived for VIP failover

I wrote a full walkthrough on setting up Docker Swarm with MicroCeph and Portainer that makes this a real alternative to k3s or full Kubernetes stacks in lightweight labs.

2. Ollama + OpenWebUI: Local LLMs Done Right

The hype around generative AI hasn’t slowed in 2025. And thanks to Ollama and OpenWebUI, I’ve been able to run powerful local LLMs like Gemma, Phi, and even Mixtral right on my Proxmox boxes.

What stands out:

- OpenWebUI provides a clean, ChatGPT-like experience

- Ollama simplifies downloading, quantizing, and serving models

- GPU passthrough in Proxmox VMs or LXCs just works with the right drivers

I now use this setup daily for brainstorming, writing prompts, and testing ideas without paying for API fees. It’s fast, private, and super extensible. I even wrote about how to host your own GPT using Docker and Proxmox with Ollama as the backend.

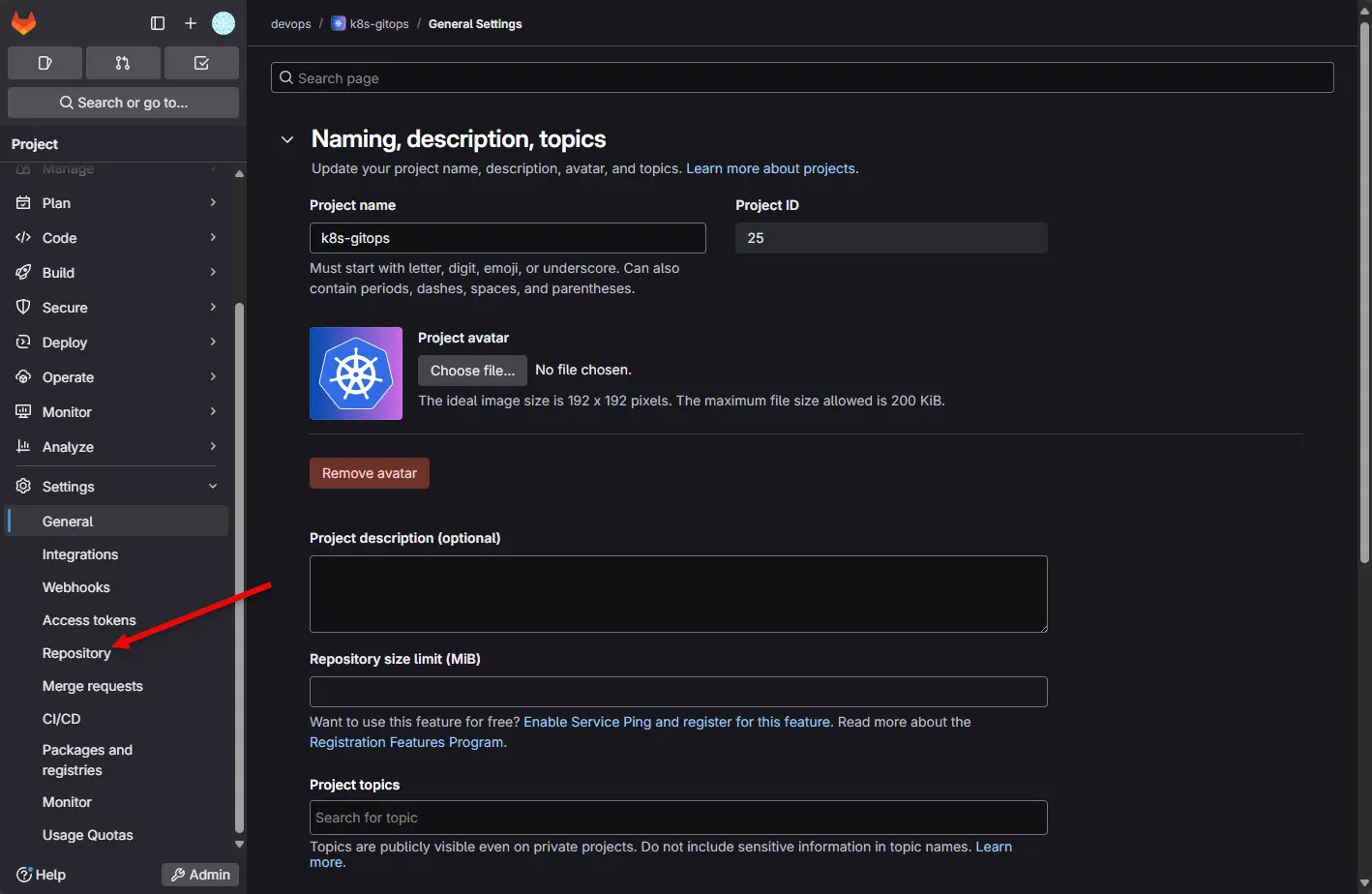

3. GitLab + GitLab Runner on CephFS

I’ve had a love-hate relationship with GitLab in the past, mostly due to how resource-intensive it can be. But after setting it up on Docker Swarm with CephFS-backed volumes, it’s finally clicked into place and is definitely one of my favorite home lab tools 2025.

What I like now:

- CephFS gives shared storage for GitLab, runner logs, and builds

- Docker-based deployment makes it easier to update and maintain

- I finally solved the permission issues and 500 errors plaguing my pipelines

This setup now runs all my automated container builds and CI workflows. Plus, I’ve started documenting this in a post titled “Optimizing GitLab Performance with Docker Swarm and CephFS”—coming soon.

You can check out my Gitlab documentation here: You searched for gitlab.

4. Netdata: Real-Time Monitoring That Just Works

I’ve tried Prometheus + Grafana stacks plenty of times, and while powerful, they can be overkill for smaller environments. Enter Netdata, which I now run both in containers and directly on hosts. It’s become my go-to for real-time visibility into my nodes, services, and even Ceph status.

Why it’s a win:

- Instant visualizations, no dashboard building needed

- Free SaaS mode with multi-node views under a home lab license ($90 a year)

- Built-in support for exporting metrics to Prometheus if needed

The Docker-based version works great for quick testing, but I also deployed the full agent using the Netdata kickstart script for deeper monitoring. It even picks up Kubernetes metrics if you’re running MicroK8s or K3s.

5. My AI Toolbox: Ollama, OpenWebUI, KubeBuddy, and Windsurf

2025 has been a breakout year for practical AI tools in the home lab. I have seen real benefits, not just flashy demos. These can truly enhance automation, monitoring, and productivity from my experience. I’ve been hands-on with several LLM-based tools that now form the foundation of my AI workflow stack:

- Ollama + OpenWebUI – for running local LLMs like Gemma and Mixtral in a private, responsive chat environment.

- kubectl-ai – this one is a powerful command-line based enhancement to the normal kubectl from Google that allows you to tie in an AI model with your kubectl command line. Check out my article here: Meet kubectl-ai: Google Just Delivered the Best Tool for Kubernetes Management.

- Windsurf – this is an LLM-powered event-driven automation platform that acts like a coding assistant right from your VS Code interface. So instead of copying and pasting code from your browser, it helps with that process, even editing files, etc.

Why I keep using them:

- They’re mostly self-hostable or API-compatible for local use

- They actually solve real operational problems (vs. just novelty)

- They’re modular and easy to combine into workflows or scripts

Wrapping up

Hopefully my favorite home lab tools in 2025 listed in the post are the core of what I use in testing and playing around with different technologies, specifically containerized technologies will give you ideas of what to try next. These allow me to learn new technologies and work on ideas for new projects that I may want to introduce in the home lab.

Got a favorite tool you’ve discovered in 2025? Hit me up in the comments and let’s talk shop.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

The link to the MicroCeph setup is wrong. It should be https://www.virtualizationhowto.com/2025/03/how-i-deployed-a-self-hosting-stack-with-docker-swarm-microceph/

Peter,

Many thanks! I have updated now.

Brandon