As with any discipline, there are many different paths that lead to the same goal. However, there are certain skills that are foundational to learning the skill you are attempting to learn. With DevOps, there are many different foundational skills that might be thought of to go with the DevOps mindset. Som may choose to disagree with me here. However, I think the foundational skill that anyone looking to start their DevOps journey should begin with is containers, and specifically, Docker. Let’s see why containers are a great first step and how to get hands on with them fast.

Why containers are the logical first step to DevOps

Containers are purpose built for the DevOps mindset. DevOps is about not only deploying software quickly and repeatably but also reliably across environments. Containers scale at this so much better than virtual machines.

Containers have many advantages and capabilities.

- Everything to run an app – A container image bundles everything together to successfully run an applications, including prerequisites, etc.

- Repeatability – You can use a simple Dockerfile to rebuild a container image with the same software every time

- Portability – As long as the underlying platform is the same, the same container can run across multiple environments, whether this is on a laptop, Docker host, Kubernetes cluster, or cloud environment

- Immutability – Once the image is built, it doesn’t change. If you need to change something you rebuild the container image. This makes changes and other configuration much easier.

These characteristics are core to how DevOps workflows work and succeed. Choosing to learn Docker and containerized technologies will help you understand what I think is the pivotal first step in getting into DevOps practices and workflows.

Also, spinning up your first Docker host on a Linux operating system will help you also get familiar with BASH and Linux command line skills along with learning Docker.

“Dependency hell” and portability

If you have ever tried to install a very involved application on a virtual machine and ran into “dependency hell”, you will understand the pain that can come from running apps in full-blown VMs. Also, if you don’t get every dependency exactly the same on the production server compared to a development machine, it may work on the development machine and not work on the production host.

Also, have you ever attempted to upgrade an app on a virtual machine and then ran into issues with a previously installed dependency causing issues as the new version of the app needs something else?

A container helps you to get around this dependency hell issue as all the app components and prerequisites are bundled together in the container image. So, you simply respin the container after you repull the upgraded image for upgrades and you simply move the image from a development machine to production and it will run identically on the production server vs the development environment.

Learn these core Docker concepts

There are several core Docker concepts that are great to start out with learning to be well on your way to this important Docker first step:

- Image – A read-only template that defines your app and environment. Built from a Dockerfile.

- Container – A running instance of a container image. You can create, start, stop, and delete containers

- Dockerfile – this is a simple text file that contains the instructions and various components and environmentment variables to build the container image.

- Volumes – You can use volumes to have external storage mounted to your containers to persist data that needs to be persisted

- Networks – Container virtual networks allow communication between services hosted in containers

- Docker Hub – This is like the GitHub of container images where you can store and pull down container images

An example of building your first container from scratch

Let’s walk through a real-world example: creating a basic Python web app and containerizing it.

Step 1: Create a simple Python app

# app.py

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello():

return "Hello from Docker!"

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)Step 2: Write a Dockerfile

# Use an official Python base image

FROM python:3.10-slim

# Set the working directory

WORKDIR /app

# Copy source code

COPY . .

# Install dependencies

RUN pip install flask

# Expose port

EXPOSE 5000

# Run the application

CMD ["python", "app.py"]Step 3: Build and run the container

docker build -t my-python-app .

docker run -p 5000:5000 my-python-appVisit http://localhost:5000 in your browser. You should just see that you deployed your first containerized app!

Fast track to learning Docker faster

There are several things you can do that I have found helps to learning Docker much faster and helped me to get up to speed quickly. What are those. Note the following:

- Use Docker Compose early: Docker Compose is a great tool to begin with early. Using Docker compose allows you to get used to combining Docker services together as a single cohesive unit to build applications that rely on different components. I have found, that it also helps with some of the concepts with Kubernetes manifest files.

- Bookmark common commands: Create a cheatsheet of commands like

docker build,docker run,docker ps,docker exec,docker logs, anddocker compose upuntil you get familiar with these and remember them with “muscle memory.” - Watch logs in real-time: Get familiar with the idea of using the Docker logs command,

docker logs -f <container_id>as this command allows you to see errors or other things that are going on inside the container and allows you to much more easily troubleshoot issues with your containers. - Clean up regularly: Use

docker system prune -ato remove old images and containers. One thing I found with my first Docker host was the disk space continued to decline, even when I didn’t have that many containers running. However, using the docker prune command, you can free up unseen stale container images and other old artifacts on the Docker host. - Use VS Code with Docker extension: It gives you an interactive view of containers, images, logs, and lets you manage Docker right from your editor.

One of the best ways I have found – Projects!

I have found personally, that one of the best ways to learn anything is to go about it with project-based learning. When you have an actual project it creates focus on the end-result as well as the path to get there. Projects can be anything. However, when it comes to Docker there are really great projects to begin with like the following suggestions.

- Migrate an app from a VM over to a container – As a simple suggestion – if you are running Pi-Hole in a VM for DNS in your home network, why not migrate this to Docker?

- Stand up a reverse proxy – If you already have one or two containers, you might stand up your first reverse proxy to get started with understanding how this works along with SSL certificate termination

- Create an “app stack” with Docker Compose – This will teach you how to put apps together in a Docker Compose file.

Also, check out my full blog post just on this subject here: Best 5 Home Server Projects to start learning Docker in 2025.

Common mistakes or troubleshooting needed with Docker

When you first start working with Docker, there are some natural mistakes or troubleshooting that will come up. Note the following ones that can cause issues:

- Forgetting to map ports – If you don’t use

-p, your app might be running, but inaccessible. - Confusing images with containers – Remember, images are blueprints. Containers are running instances.

- Not cleaning up – Old containers and dangling images accumulate fast—periodically prune.

- Image bloat – Use slim base images and multi-stage builds to avoid bloated image sizes.

- Volume mishaps – Not mounting volumes for persistent data will cause data loss when the container stops.

Understanding and avoiding these pitfalls early will save you a lot of frustration down the road.

What is the logical next step after learning Docker?

Once you’re comfortable building and running containers, you’re ready for the next layer of DevOps progression, and that is orchestration and GitOps workflows. Note the following:

- Container orchestration: Learn Kubernetes or Docker Swarm to manage multiple containers at scale and have self-healing capabilities

- CI/CD pipelines: Use tools like GitHub Actions, GitLab CI, or Jenkins to automatically build, test, and deploy Docker containers on every commit

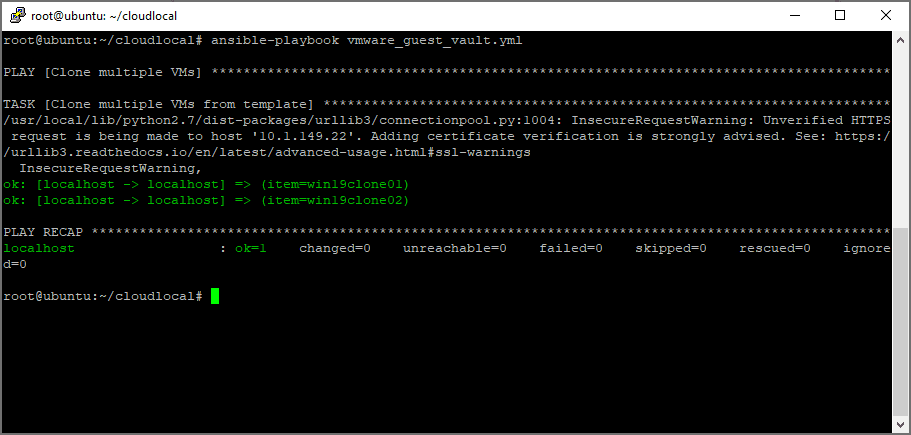

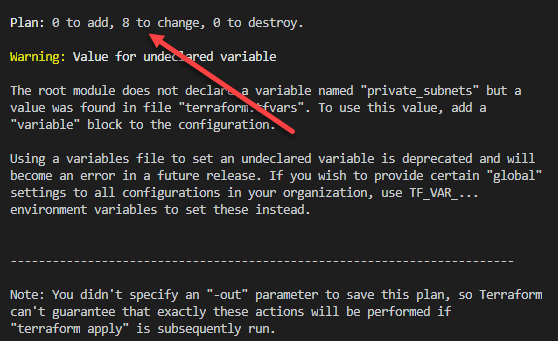

- Infrastructure as Code: UseDocker with tools like Terraform and Ansible to automate infrastructure provisioning

- GitOps workflows: Use Git as the source of truth for declarative infrastructure and application delivery

Mastering containers with Docker isn’t just a step in DevOps—it’s the launchpad. It gives you the confidence to move into automation, orchestration, and continuous delivery with clarity and control.

Wrapping up

DevOps is a really great field to get into and is a natural progression for traditional architecture and operations with virtualized environments. Since many organizations are migrating towards containerized environments as opposed to traditional VMs, DevOps first steps with Docker makes a lot of sense as a career path with infrastructure as code environments that many have adopted. CI/CD pipelines that use containers and cloud-native technologies rely on containerized infrastructure to run and push builds to staging and production. Learning Docker I think is the foundational step towards getting into DevOps from a traditional infrastructure role. Let me know your thoughts.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.