AWS Load Balancer Controller installation and configuration

Let’s look at an open source solution called AWS Load Balancer Controller that allows you to manage your elastic load balancers with Kubernetes ingress.

Table of contents

- What is an AWS Load Balancer?

- What is an AWS Load Balancer Controller?

- Kubernetes Ingress and Application Load Balancer

- TargetGroupBinding

- Ingress Creation Process

- AWS Load Balancer Controller on EKS Cluster

- Installing the AWS Load Balancer Controller

- Deploying on EKS

- Traffic Routing

- Ingress Group – Multiple Ingress Resources Together

- Deploy Applications

- Related links

- Wrapping up

What is an AWS Load Balancer?

Its a service you can setup that handles your incoming app traffic coming in and this can be across multiple Amazon Elastic Compute Cloud (EC2) instances. It helps to take care of architecting your apps in HA architecture and available as it can distribute your traffic evenly across multiple resources.

There are three app LB types:

- Application Load Balancer (ALB)

- Network Load Balancer (NLB)

- Classic Load Balancer.

What is an AWS Load Balancer Controller?

This is an open-source project that makes it possible to manage elastic load balancers using Kubernetes. Kubernetes lets you automatically deploy and config your AWS Application Load Balancers (ALBs) for routing your traffic to your Kubernetes services.

Kubernetes Ingress and Application Load Balancer

Kubernetes Ingress makes it possible to see how the traffic should be routed to services within a K8s cluster. An Ingress controller does the heavy lifting.

TargetGroupBinding

You create a TargetGroupBinding resource that maps your Kubernetes services to a Target Group. The controller then manages the Target Group and then it updates the ALB listener rules as you need it to to route traffic to the appropriate Target Group.

With this, you can manage your Kubernetes services and Load Balancers on their own, giving you more control over how traffic is routed and balanced.

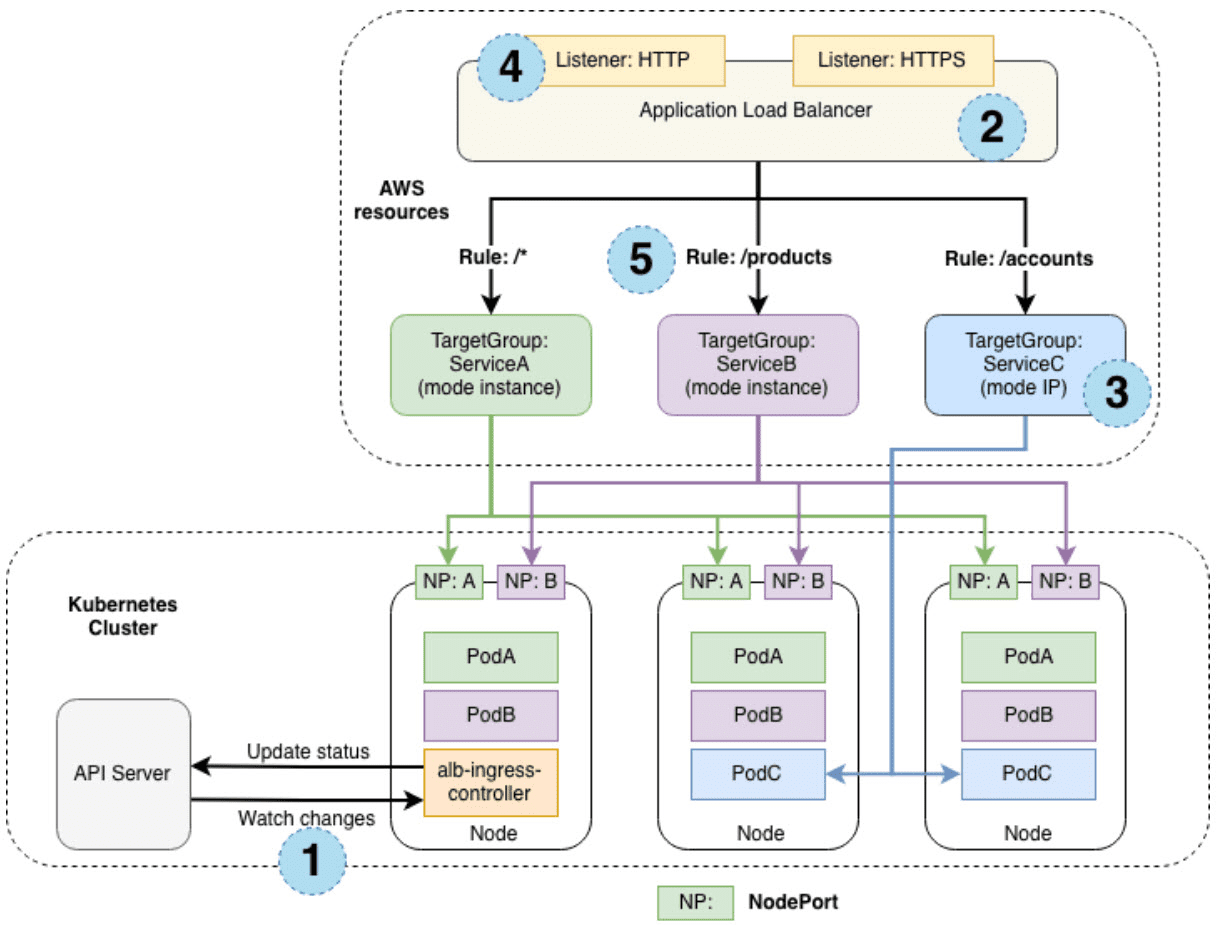

Ingress Creation Process

This section outlines each step (circle) mentioned above, demonstrating the creation of a single ingress resource.

The controller monitors the API server for ingress events

An Application Load Balancer (ALB, also known as ELBv2) is created in AWS for the new ingress resource (internet facing or internal if you need)

Target Groups are created in AWS

Listeners are created for every port specified in the ingress resources and SSL certs can be attached

Rules are created for ingress resource

AWS Load Balancer Controller on EKS Cluster

To install and configure the AWS Load Balancer Controller on an Amazon EKS cluster, you’ll need to follow these steps:

Create an EKS cluster or use an existing one.

Install and configure the AWS CLI and Kubernetes command-line tools (kubectl and eksctl).

Set up the necessary IAM roles and policies for the controller.

Deploy the AWS Load Balancer Controller using a Helm chart or YAML manifests.

Installing the AWS Load Balancer Controller

The AWS Load Balancer Controller manages AWS Elastic Load Balancers for a Kubernetes cluster, provisioning the following resources:

An AWS Application Load Balancer (ALB) when a Kubernetes Ingress is created.

An AWS Network Load Balancer (NLB) when a Kubernetes service of type LoadBalancer is created.

Deploying on EKS

Note the following steps to take care of

Create an IAM policy – Download an IAM policy for the AWS Load Balancer Controller

US east or west:

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy_us-gov.jsonAll other AWS Regions:

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy.jsonCreate an IAM policy using the policy downloaded

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

Create an IAM role.

Create a Kubernetes service account named aws-load-balancer-controller in the kube-system namespace for the AWS Load Balancer Controller

Use eksctl or the AWS CLI and kubectl to create the IAM role and Kubernetes service account.

Configure the AWS Security Token Service endpoint type used by your Kubernetes service account which is an optional step.

Uninstall the AWS ALB Ingress Controller or the 0.1.x version of the AWS Load Balancer Controller (only if installed with Helm)

If you installed the incubator/aws-alb-ingress-controller Helm chart, uninstall it.

helm delete aws-alb-ingress-controller -n kube-systemIf you have version 0.1.x of the eks-charts/aws-load-balancer-controller chart installed, uninstall it. The upgrade from 0.1.x to version 1.0.0 doesn’t work due to incompatibility with the webhook API version.

helm delete aws-load-balancer-controller -n kube-system

Install the AWS Load Balancer Controller using Helm V3 or later or by applying a Kubernetes manifest.

Add the eks-charts repository.

helm repo add eks https://aws.github.io/eks-charts

Update your local repo to ensure you have the most recent charts.

helm repo updateIf your nodes don’t have access to the Amazon ECR Public image repository, pull the image:

public.ecr.aws/eks/aws-load-balancer-controller:v2.4.7Install the AWS Load Balancer Controller. If you’re deploying to Fargate, add the following flags to the helm command that follows:

–set region=region-code

–set vpcId=vpc-xxxxxxxx

Verify that the controller is installed.

kubectl get deployment -n kube-system aws-load-balancer-controller

NAME READY UP-TO-DATE AVAILABLE AGE aws-load-balancer-controller 2/2 2 2 84s

Traffic Routing

Here’s a sample Ingress resource that demonstrates path-based routing:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: alb

spec:

rules:

- http:

paths:

- path: /app1

pathType: Prefix

backend:

service:

name: app1-service

port:

number: 80

- path: /app2

pathType: Prefix

backend:

service:

name: app2-service

port:

number: 80

This Ingress resource routes traffic to the app1-service Kubernetes service when the request path starts with /app1 and to the app2-service when the request path starts with /app2.

Ingress Group – Multiple Ingress Resources Together

Sometimes, you may need to group multiple Ingress resources together to share a single ALB. The AWS Load Balancer Controller supports this functionality through the concept of an Ingress Group.

To create an Ingress Group, add the alb.ingress.kubernetes.io/group.name annotation to your Ingress resources:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/group.name: my-ingress-groupDeploy Applications

Here’s an example of a Deployment and Service for a sample web application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-web-app

spec:

replicas: 3

selector:

matchLabels:

app: sample-web-app

template:

metadata:

labels:

app: sample-web-app

spec:

containers:

- name: sample-web-app-container

image: sample-web-app:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: sample-web-app-service

spec:

selector:

app: sample-web-app

ports:

- protocol: TCP

port: 80

targetPort: 80

type: NodePort

Use the kubectl apply command to create the resources in your EKS cluster:

kubectl apply -f sample-web-app.yamlRelated links

Wrapping up

The AWS Load Balancer is a powerful cloud-based solution allowing you to load balance traffic in your AWS environment effectively. It provides advanced routing, HA and scalability. But, you have to configure it properly. Do look at the official documentation and align the configuration with your environment and what you need.