VMware ESXi 7 Update 1 Boot Disk is not Found PSOD

Since upgrading my Supermicro home lab to ESXi 7.0 GA and also ESXi 7 Update 1, I have seen a couple of issues that have plagued my lab environment. One was an issue where the vSAN daemon liveness check failed and another is a Purple Screen of Death (PSOD) that occurs after I reboot a host from time to time, especially after applying updates. I wanted to give everyone a heads up on this issue, in case you have seen this in your lab environment or even on a production host. VMware has a KB post that covers the vSAN liveness check failure. I had a hunch the two are interrelated. Not long ago I again experienced the issue on a host and was able to test my theory. Let’s take a look at VMware ESXi 7 Update 1 Boot Disk is not Found PSOD and see how to resolve this issue.

Description of the issue

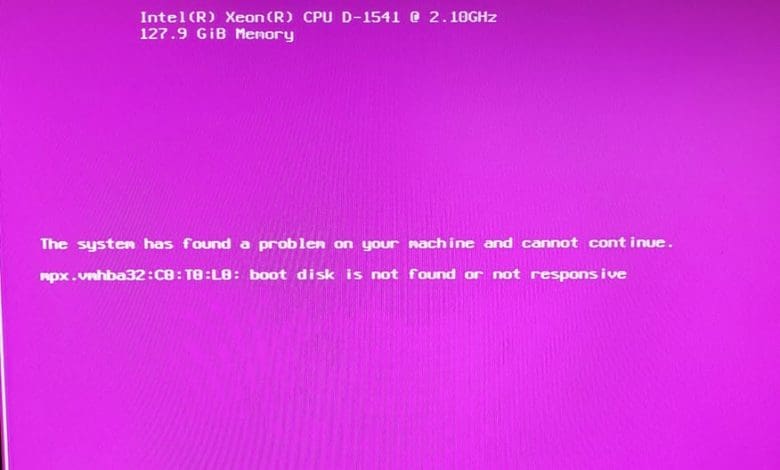

In my home lab case, I had started with ESXi 7.0 clean installs on all hosts. I first noticed the purple screen of death when I had applied updates to my ESXi hosts. What does the exact error look like?

The error would occur when it was on the VMware boot splash screen when components are being loaded.

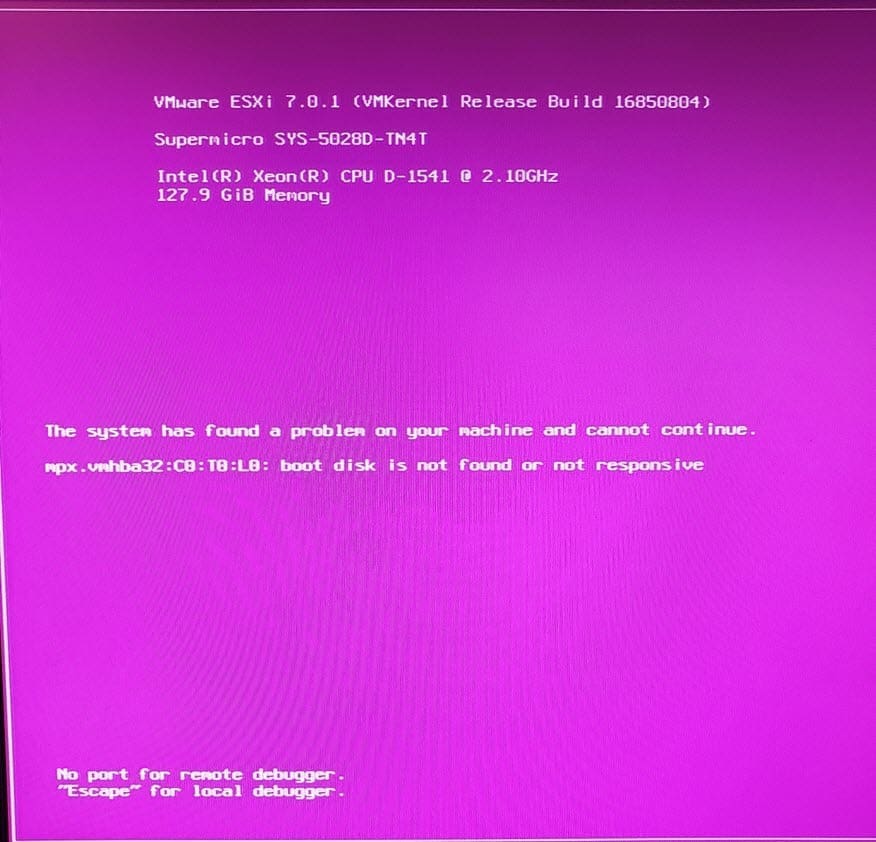

After a few moments, the ESXi host would PSOD with an error referencing the boot device: boot disk is not found or not responsive.

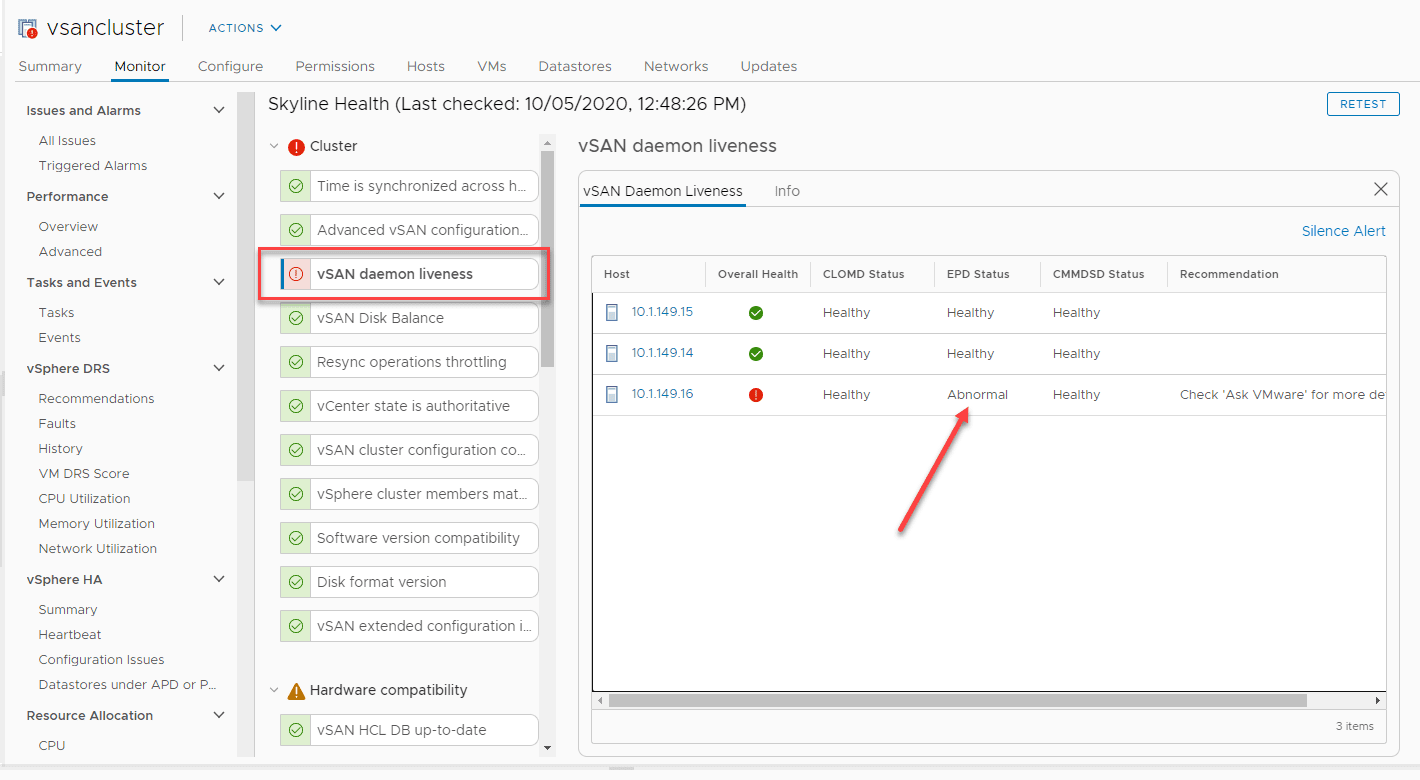

I had also seen a couple of odd things with vSAN when the host would boot that initially, I didn’t connect with the PSOD. I wrote about this error here:

In the vSAN heath status check, I would see the EPD status as abnormal. As it turns out VMware recently released a KB article covering this issue here:

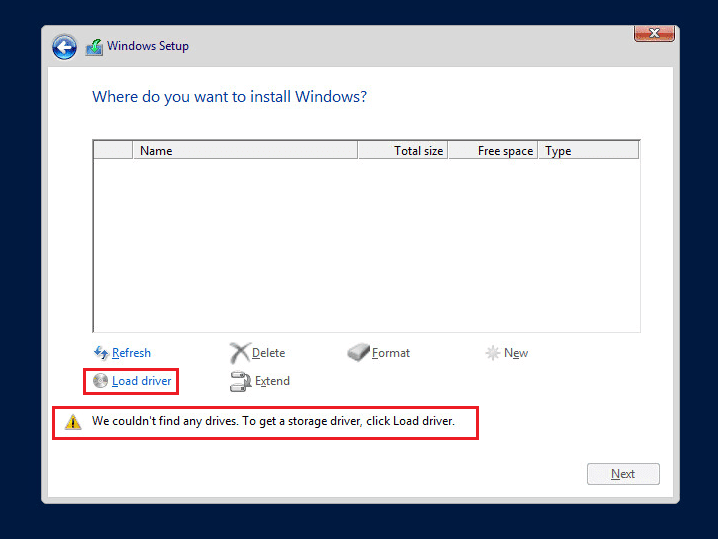

What is the cause of the issue described here? The storage-path-claim service claims the storage device ESXi is installed on during startup. This results in the bootbank/altbootbank to become unavailable and ESXi then reverts back to ramdisk. Since I had seen the exact issue referenced in the KB article, it seemed reasonable this was the cause of the PSOD I was experiencing.

Lab Hardware

I have written quite a bit about my lab hardware. You can read some of those articles here so you know what environment I am seeing this in:

VMware ESXi 7 Update 1 Boot Disk is not Found PSOD

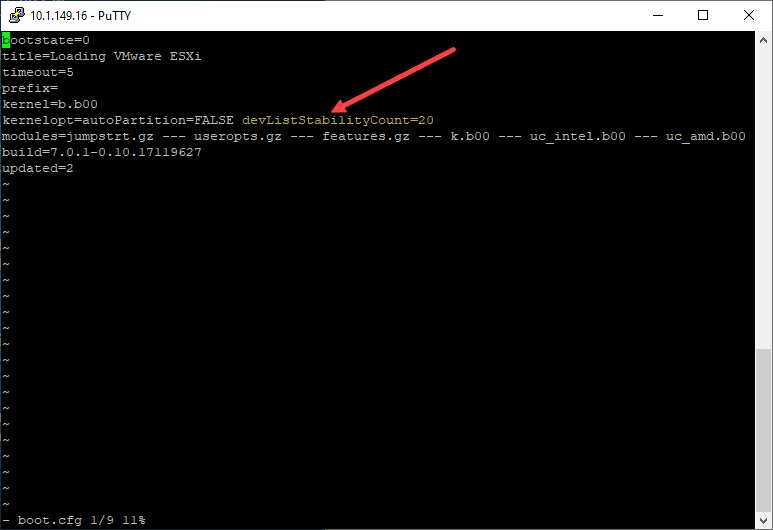

So, in testing my theory here, I simply followed the KB article describing how to basically add a delay in the storage-path-claim service. This allows the bootbank and altbootbank to be claimed properly. VMware recommends tweaking this time value based on values you see in your logs. However, I simply started out with 30 seconds as defined in the KB.

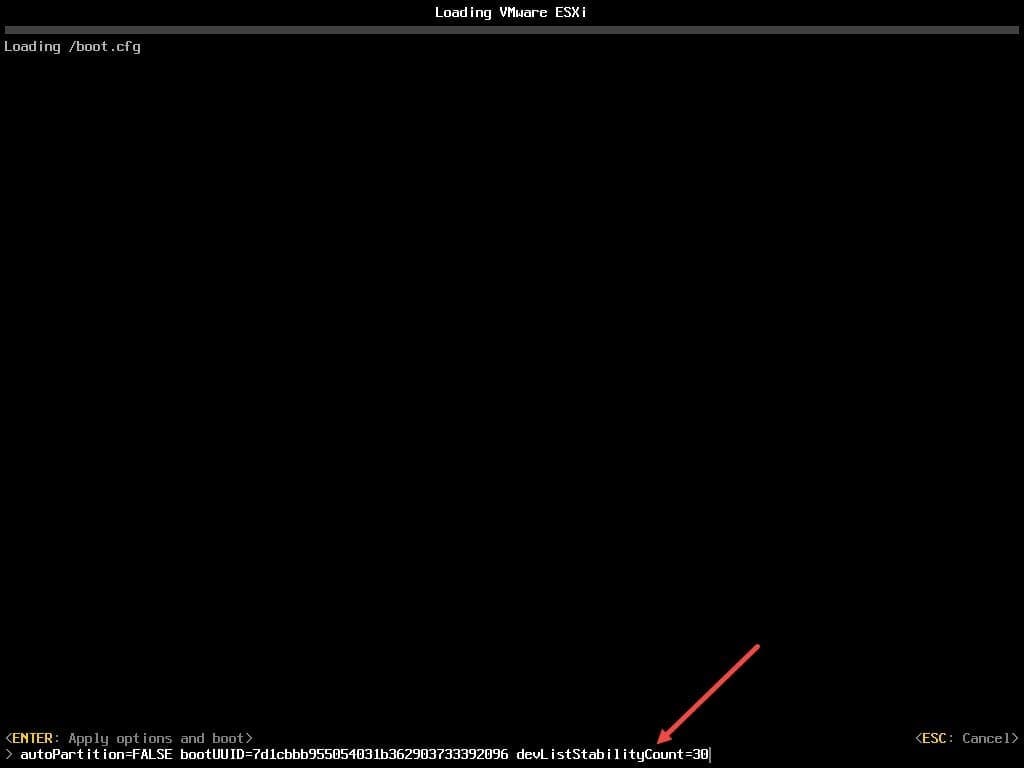

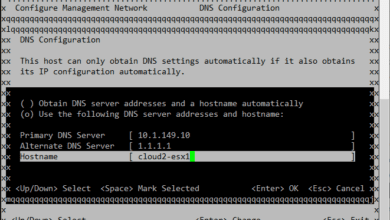

To get to this screen, when ESXi is booting you use the SHIFT+O option to edit your boot option line. Add the following:

- devListStabilityCount=30

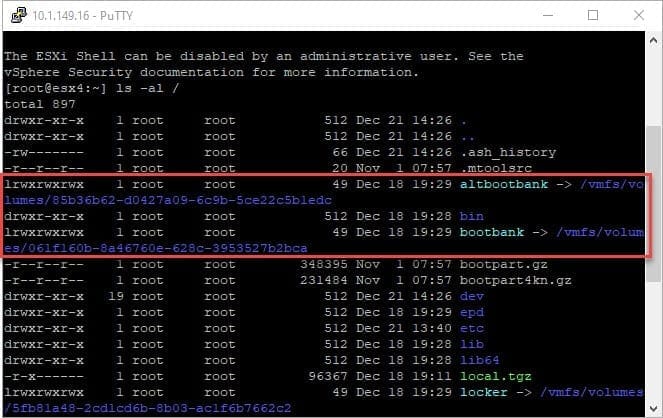

Sure enough, after adding the delay, an ESXi host that was PSOD’ing booted properly! You can then check to make sure bootbank and altbootbank are claimed correctly. However, in my case since I no longer saw the PSOD, it was pretty safe to assume they were. For posterity though, I checked and they were displaying as claimed correctly.

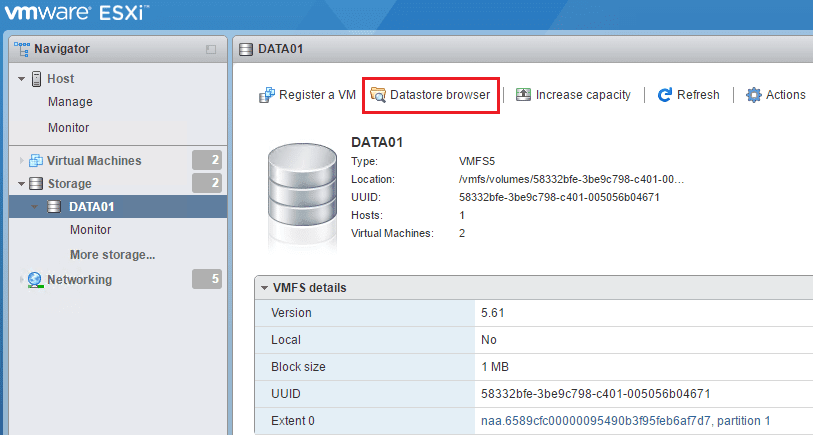

The problem with the SHIFT+O approach is this is not persistent between reboots. To make this setting persistent, you can do that by editing your boot.cfg file.

cd /bootbank cp boot.cfg boot.cfg.bak vi boot.cfg

As you see below, I was able to back my value down to 20 instead of 30 and it still worked. You can get more exact by examining the logs as detailed in the KB.

After making the change to your boot.cfg file, you can now reboot without the PSOD showing up. Hopefully, if you have experienced the VMware ESXi 7 Update 1 Boot Disk is not Found PSOD, this article will help you to quickly get around the issue. I am not sure how many have seen this issue aside from myself. However, as the KB details quite a few scenarios such as:

- Configuration changes not persisting across host reboot operations

- The ESXi host may roll back to a previous configuration

- In vSAN environments, the health status may report warnings of EPD status as abnormal

There appear to be several scenarios that can present due to the storage-claim-service issue that seems to exist. Just as a note, this issue still seems to be present as of VMware vSphere ESXi 7 Update 1b.

hOW DO I RESOLVE THIS ERROR

Thabo,

Thank you for the comment! Were you able to use the steps in the post?

Brandon