You don’t need cloud credits or racks of GPUs to experiment with powerful AI tools. Thanks to lean local models and awesome open-source tools, you can build your own AI stack in a weekend. You can do all of this right in your home lab. You can host your own ChatGPT alternative, automate your RSS feeds, or start building your own AI assistant. Let’s take a look at 5 projects are weekend-friendly, fun, and future-proof for 2025. Let’s jump into it.

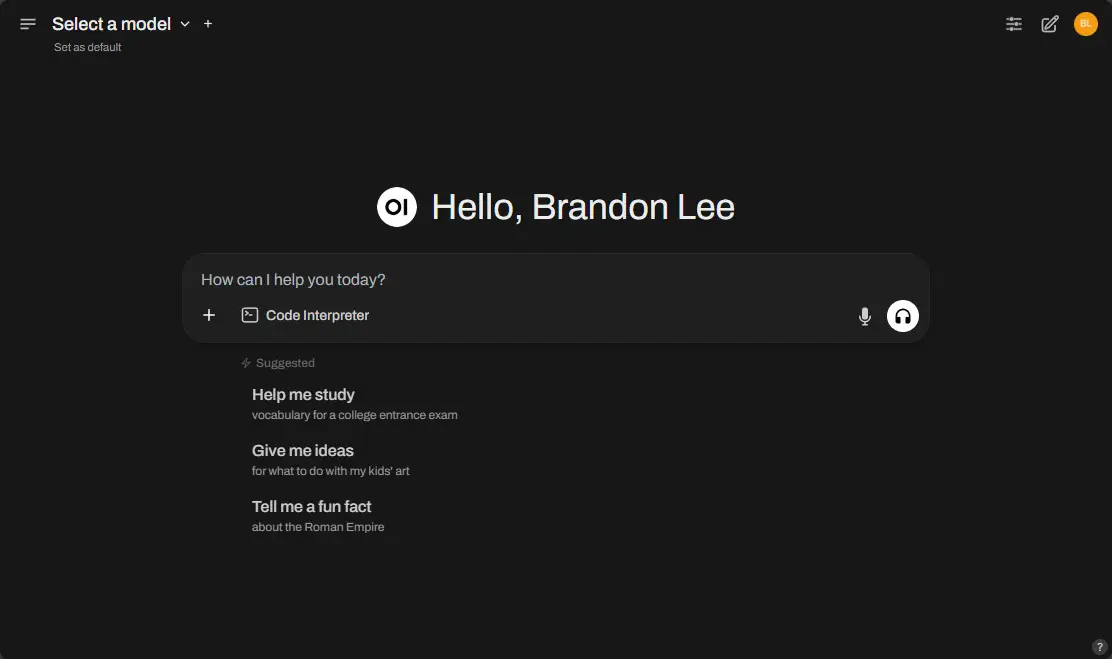

1. Host Your Own ChatGPT with Ollama + OpenWebUI

What it is: A self-hosted ChatGPT-style chatbot that runs entirely in your home lab and you don’t need any type of cloud subscription or “credits.”

Why it’s awesome:

- You control your data.

- It works with lightweight models like Phi-3, Gemma 2B/7B, or even LLaMA 3.

- OpenWebUI provides a full web-based front end that looks and feels like ChatGPT and in some ways I think is even better.

How to deploy (in under 30 minutes):

- Use Docker Compose to spin up

ollamaandopen-webui. - Download a quantized model (

ollama pull phi3). - Access the interface via

http://localhost:3000.

You can take a look at my tutorial on running Ollama with GPU passthrough in LXC containers here:

Weekend bonus:

- Try multi-model switching or integrate with ChatGPT for fallback via OpenWebUI’s hybrid support.

Resource tip: Even without a GPU, models like Phi-3 run well on a decent CPU (Ryzen 7+ or Core i7). If you do have an NVIDIA GPU, Ollama will take advantage of it automatically. Check out my YouTube video on this one:

2. AI-Powered RSS Summarizer (FreshRSS + Ollama + Python)

What it is: In this project idea you can let AI automatically summarize new articles from your favorite RSS feeds using your local LLM. This is one that I have in flight as well. Post coming on this one soon.

Why it’s awesome:

- Combines automation, real-time content, and LLM smarts.

- Completely private and customizable.

- Gives you daily summaries without needing to open 30 tabs.

Stack:

- FreshRSS for feed syncing.

- A Python script that polls unread articles, runs summaries via Ollama or ChatGPT, and marks them read.

- Optional: output summaries to Markdown or post to Substack Notes.

Weekend bonus:

- Add push notifications via ntfy or email using

smtplib.

Geek tip: Use the Fever API instead of the older Google Reader API for better support in modern FreshRSS forks.

3. Create a Private AI File Assistant (GPT for Your Notes)

What it is: In this project idea you let your LLM act as a personal assistant that can search, summarize, and answer questions about your documents.

Why it’s awesome:

- Great use case for local AI—ask questions about your notes, logs, or Markdown files.

- Easy to extend with your own knowledge base or lab documentation.

Weekend setup:

- Use llama-index or privateGPT.

- Point it to a folder of

.md,.pdf, or.txtfiles. - Run locally with Ollama using models like Phi-3 or Gemma.

Bonus project:

- Deploy a lightweight web UI for chatting with your indexed documents.

Hardware tip: Use an SSD-backed LXC container in Proxmox for great performance with low RAM usage.

4. Real-Time AI-Enhanced Logging with Dozzle and Ollama

What it is: This is a project that I have on the books to try. I would like to monitor my Docker containers in real time and let AI highlight or summarize interesting logs.

Why it’s awesome:

- Useful for DevOps or homelab debugging and keeping an eye on things so you don’t have to

- Great intro to AI-in-the-loop observability

Stack:

- Dozzle for real-time Docker logs.

- A Python or Go sidecar that reads logs and uses Ollama to summarize anomalies (errors, timeouts, spikes).

- Optional: send insights to a Discord or Slack webhook.

Weekend bonus:

- Add alert thresholds or generate weekly health reports using LLM-generated summaries.

Pro tip: This is a great project to practice simple pipelines like doing Docker logs → parser → AI → actionable output and keeping an eye on your environment.

5. Build a Local Voice Assistant with Whisper + Ollama + Piper

What it is: Instead of relying on cloud services, check out this project idea to speak commands or questions out loud, transcribe them with Whisper, and get spoken answers using your local LLM and TTS engine.

Why it’s awesome:

- A completely local, privacy-respecting smart assistant.

- No cloud APIs, subscriptions, or tracking.

- You can customize the voice and language model.

Weekend components:

- Whisper for transcription.

- Ollama for the response (chat-style).

- Piper TTS for turning replies into speech.

Bonus idea:

- Add wake word detection (e.g., via Porcupine) for a full Jarvis-like setup.

Fun fact: Piper’s voice models are lightweight and fast—you can even run them on a Pi 5.

Wrapping up

As I have mentioned many times before, I am very much an advocate of project-based learning. I think that is absolutely the best way to learn new skills and technologies. It allows us learn while accomplishing something that is worthwhile in the home lab. All it takes in many cases is a weekend’s worth of time off and on. Now, it literally doesn’t have to be that you block off an entire weekend. It can be in short learning sessions as you have time and can span multiple weekends.

AI technologies are becoming more and more exciting and they have tremendous potential to lighten the load from mundane troubleshooting so that you can focus on more projects which is what we all want to do right? Let me know in the comments if you are starting an AI-driven home lab learning project soon or have one underway now.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.