There is no question that the world of Kubernetes is evolving fast. The AI revolution has also brought about some tremendously helpful tools to work with and on Kubernetes clusters. Most IDE coding tools now have chat with AI bots built in so that you can bounce ideas off an AI engine for troubleshooting or just general help with syntax. However, Google just dropped kubectl-ai. This is a game-changing tool that will be what I think is a paradigm shift in how we manage Kubernetes clusters. Let me show you why.

What Is kubectl-ai?

First of all, what is kubectl-ai? It is a plugin basically for kubectl, written by Google. As most who are familiar with Kubernetes know, kubectl is the defacto standard for working with Kubernetes clusters from the command line. If you are like me, likely you have already been using generative AI to help with Kubernetes issues, troubleshooting, configuration, writing manifests, etc.

However, this can be challenging and difficult to do between a browser window, copying code between there and then pasting code into your command line for your Kubernetes node. By using kubectl-ai plugin for kubectl, you can integrate AI models directly into your Kubernetes cluster where they can be natively used from the command line. This is a game changer.

Instead of typing out long, complex CLI commands or if you are struggling to write valid YAML, you can ask questions or make requests in plain English, like the following:

- “Scale my frontend deployment to 5 replicas”

- “Show me all pods that failed in the last hour”

- “Generate a HorizontalPodAutoscaler YAML for the

web-apideployment”

When you use the natural language queries, kubectl-ai translates these into requests for the needed kubectl commands.

By default, it queries Gemini, but can also query OpenAI, Grok, and even your own local LLMs! Very cool! We will see how to change the LLM provider below.

Lowering the barrier to entry with Kubernetes even farther

Kubernetes is the de facto standard for container orchestration. However, it is infamously challening to learn. To manage Kubernetes you need to have a deep understand of concepts and tools like:

- Command-line syntax (

kubectl,kustomize,helm) - YAML formatting and indentation

- Kubernetes resource definitions and APIs

- Troubleshooting using logs and events

With kubectl-ai, Google is reducing the operational overhead by allowing users to focus on intent instead of having to focus on the syntax.

This is a great opportunity for the following types of engineers that are looking to operationalize Kubernetes:

- Junior engineers who are still learning Kubernetes

- Platform teams who want to reduce repetitive tasks

- DevOps teams who need faster diagnostics and command generation

- Developers who prefer to interact with Kubernetes in a more human-friendly way

How kubectl-ai works

Here’s a simplified look at how it works in general:

- You install the

kubectl-aiplugin on your local machine - You provide your Gemini API key via an environment variable

- When you use the

kubectl aicommand, your prompt is sent to the Gemini model - The model interprets your request and returns either:

- A CLI command (like

kubectl get pods --namespace=web) - A YAML manifest

- An explanation or help text

- A CLI command (like

- The response is printed to your terminal, and you can choose to copy, run, or refine it.

The plugin acts as an intelligent assistant, not just a code generator—it’s able to reference your current Kubernetes context and namespace to tailor its suggestions.

Installing kubectl-ai

You can find the official documentation on the official GitHub site for the tool: GoogleCloudPlatform/kubectl-ai: AI powered Kubernetes Assistant.

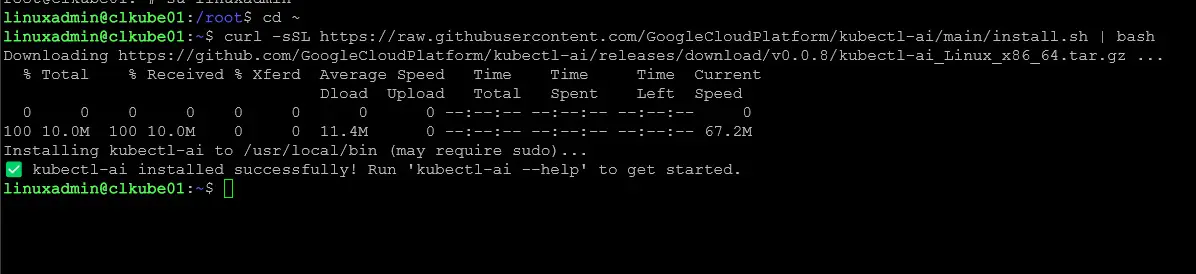

1. Install the Plugin

curl -sSL https://raw.githubusercontent.com/GoogleCloudPlatform/kubectl-ai/main/install.sh | bashYou can also manually install the tool from the releases page: Releases · GoogleCloudPlatform/kubectl-ai

2. Set Your API key for your cloud service (Gemini, OpenAI, and Local LLMs with Ollama)

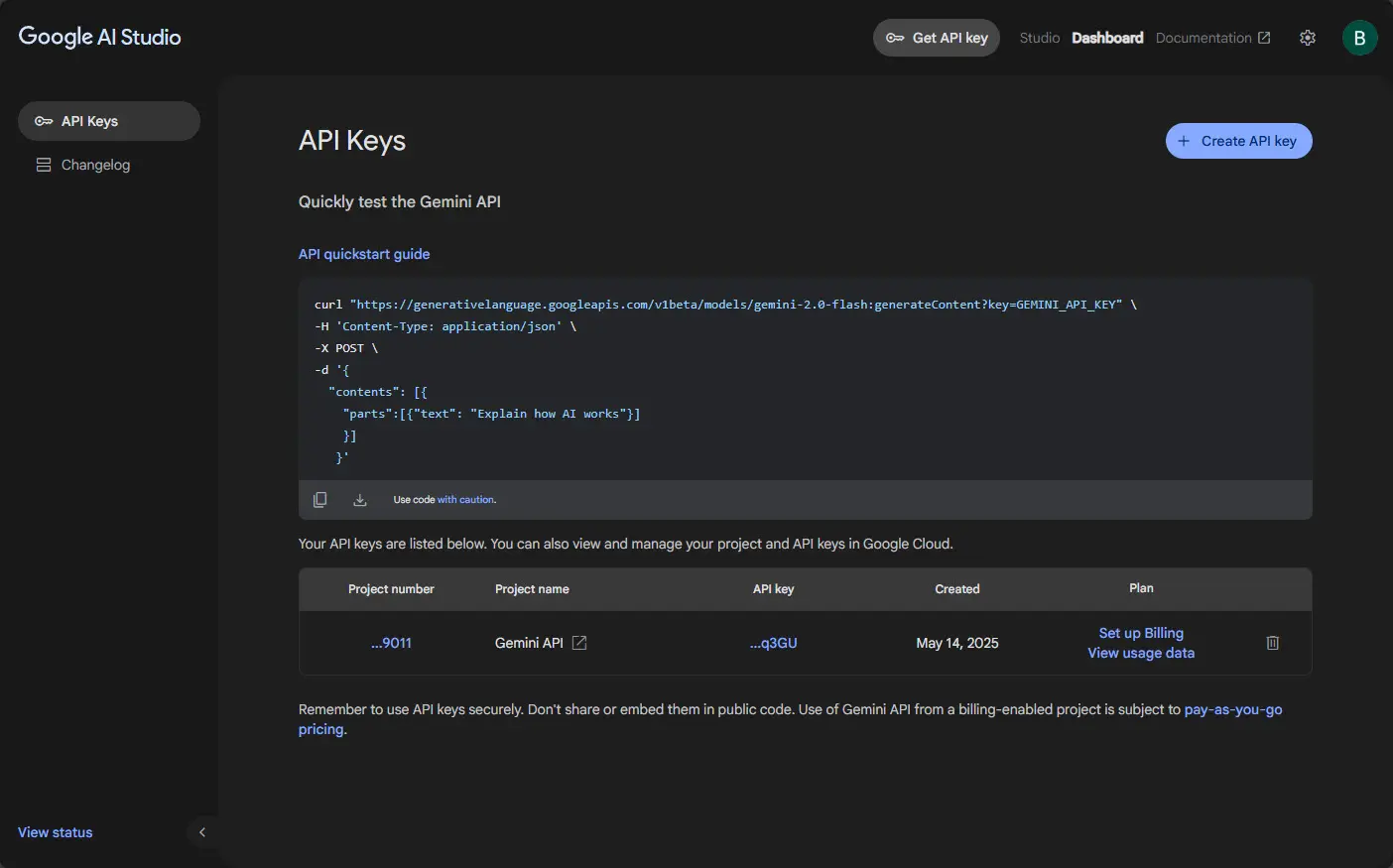

The default model is Google Gemini which makes sense since this is a Google produced tool. However, it is great they allow you to also use all the other big models and services out there. First, with all the big cloud AI services, you will need an API key.

You can get your Google API key here: Chat | Google AI Studio. Below, you can click the + Create API key button in the upper right-hand corner.

Export your API key using:

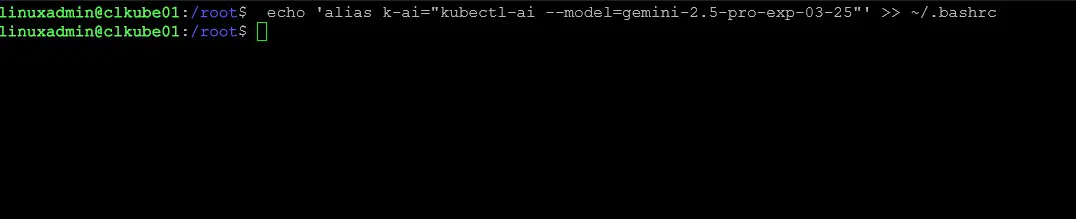

export GEMINI_API_KEY=your_api_key_hereI also have found that you can combine all the needed variables and create an alias for your command. Here I am using k-ai.

echo 'export GEMINI_API_KEY=your_api_key_here' >> ~/.bashrc && echo 'alias k-ai="kubectl-ai --model=gemini-2.5-flash-preview-04-17"' >> ~/.bashrc && source ~/.bashrcThis is the command for setting an alias pointed to OpenAI:

echo 'export OPENAI_API_KEY=your_openai_api_key_here' ~/.bashrc && echo 'alias k-ai="kubectl-ai --llm-provider=openai --model=gpt-4.1"' >> ~/.bashrc && source ~/.bashrcIf you want to use a local LLM, you can use the following:

echo 'export OLLAMA_HOST=http://192.168.1.3:11434/' ~/.bashrc && echo 'alias k-ai="kubectl-ai --llm-provider ollama --model llama3 --enable-tool-use-shim"' >> ~/.bashrc && source ~/.bashrcRunning kubectl-ai

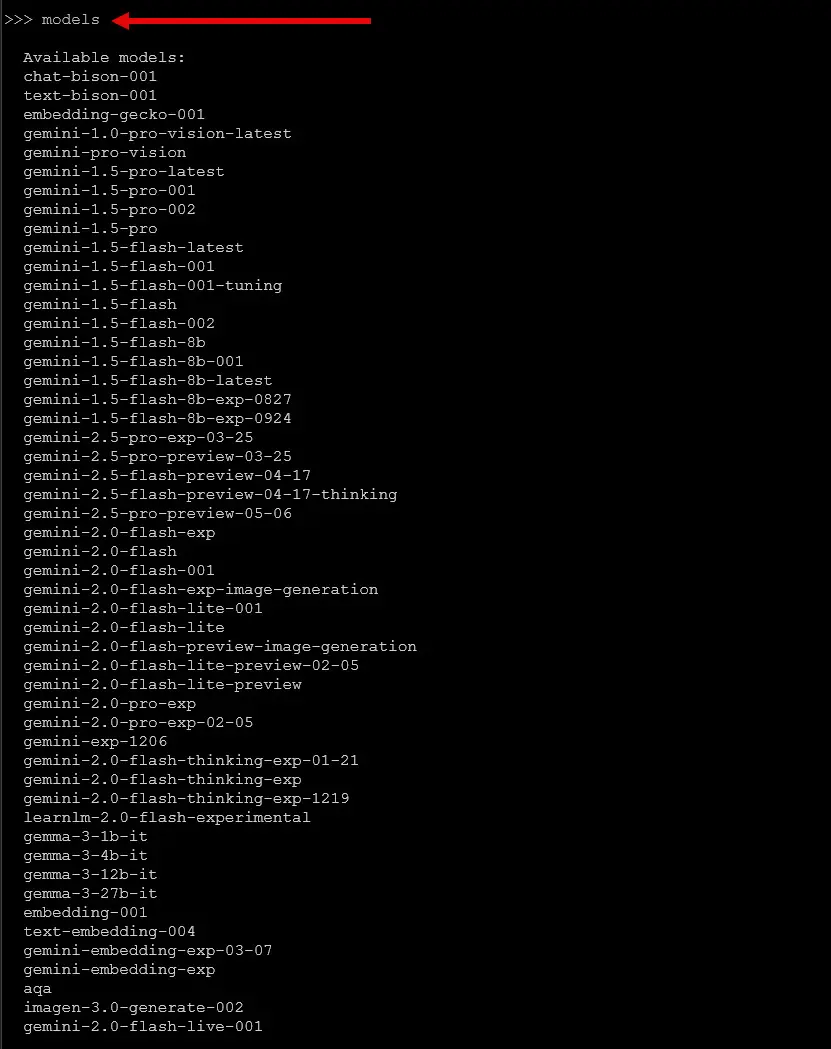

One of the easy ways to see if kubectl-ai is working is enter the models command:

modelsThis is the output pointed to Gemini:

Now you can test it out. If you created an alias like I did, you can use this to run your command.

kubectl ai "Show me the logs for the nginx pod in the hello namespace"The output might be something like:

kubectl logs -n hello $(kubectl get pods -n hello -l app=nginx -o jsonpath='{.items[0].metadata.name}')You’re now using AI to translate plain English into exact Kubernetes commands.

Real-World Use Cases

Here are some scenarios where kubectl-ai can be particularly powerful:

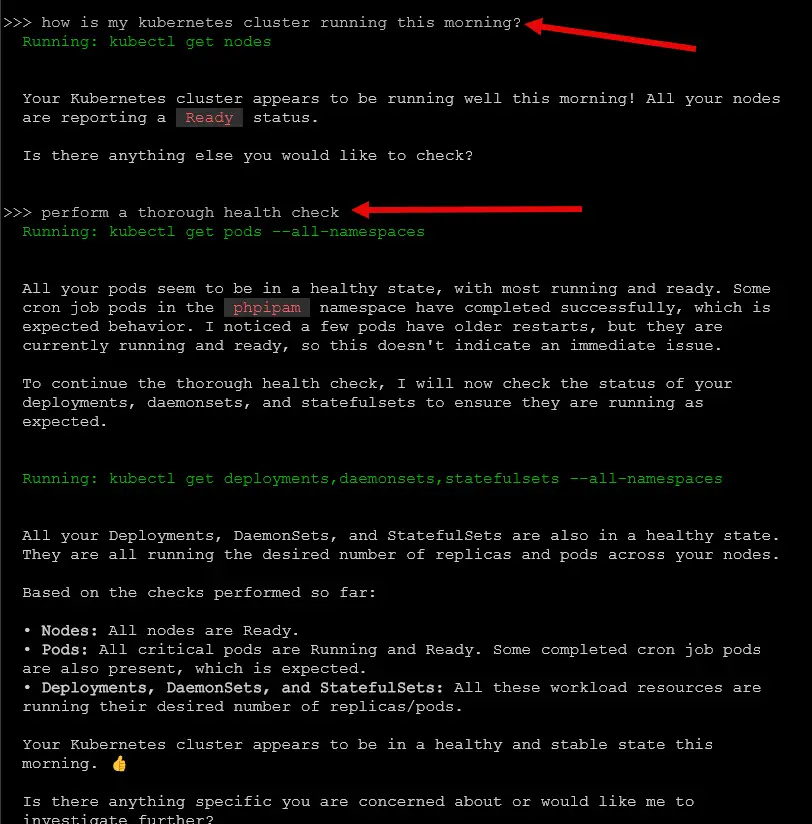

1. Perform health checks

One thing I have really liked to do so far with the kubectl-ai command is use it to perform health checks and look at the cluster and resources as a whole to help spot anything that needs attention. Below, you can see the prompts I used.

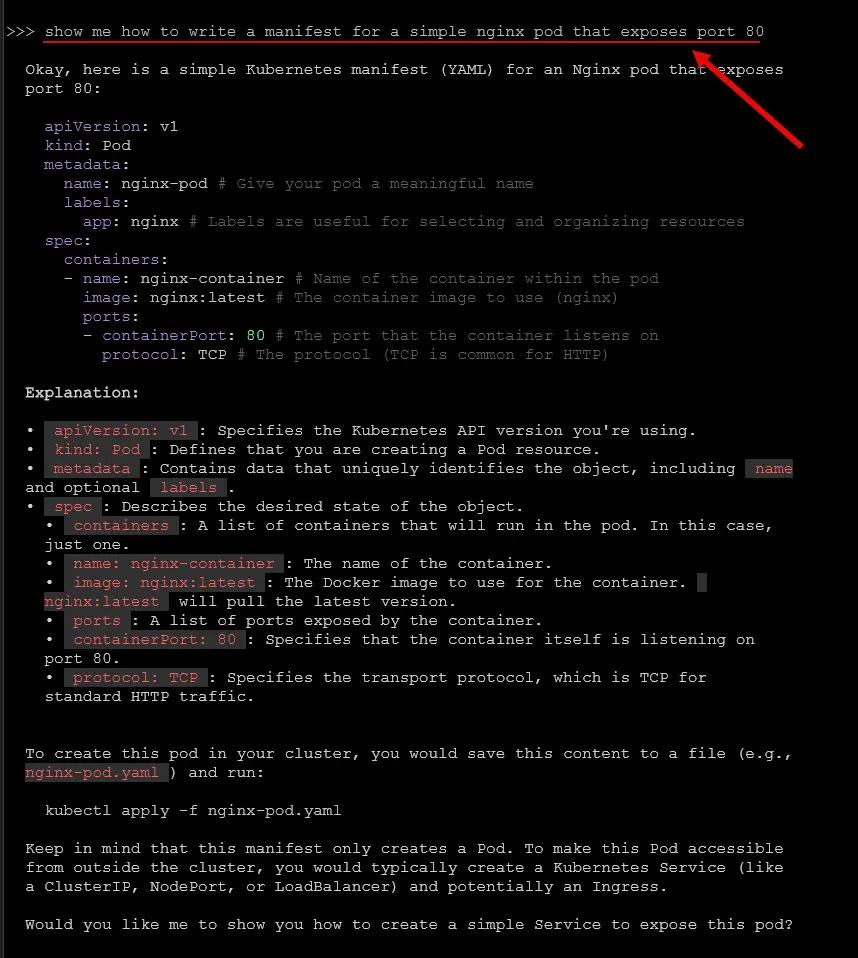

2. Writing YAML Manifests

Remembering the syntax for a CronJob, Ingress, or HPA can be tedious and challenging. You will love how kubectl-ai can generate boilerplate manifests for you that you can tweak or have it tweak as needed.

kubectl ai "show me how to write a manifest for a simple nginx pd that exposes port 80"

3. Troubleshooting and Diagnostics

Let’s say you need to find any pods that are stuck in a crash loop or that have crashed recently, below is an example of one of those prompts:

kubectl ai "List all pods that are stuck in a CrashLoopBackOff in any namespace"Google has designed the tool to be aware of Kubernetes terminology and patterns. With this it can provide correct commands that you need to use to find issues.

4. Simplifying Repetitive Tasks

If you want to scale a service or delete a specific deployment, this is easy work for kubectl-ai.

kubectl ai "Scale the payment-service deployment to 6 replicas"Or:

kubectl ai "Delete the NodePort service called test-service in staging namespace"What about the security of kubectl-ai?

Is it safe to use kubectl-ai in production environments? This is a good question. Here are a few things to note about the tool.

- No cluster access by the AI model: The AI does not interact directly with your Kubernetes cluster. It only generates commands locally.

- Context awareness is local: Your kubeconfig is not sent to Google. It’s used locally to form up the responses.

- Prompt is sent to Google: As with any generative AI tool, your prompts are sent to Gemini. Given this fact, you don’t want to include any sensitive information in your prompts.

For stricter or more controlled environments, kubectl-ai is probably still best used in dev/test environments instead of using it in production.

Still things to be aware of

As we all know by now, generative AI is a powerful tool that can do many things. Keep in mind though that kubectl-ai is still subject to the same limitations that all generative AI tools have at this point:

- Hallucinations: Like any LLM, Gemini or other AI LLM providers may occasionally give you wrong or even possibly risky commands. Always verify output before executing any suggestions from AI.

- Prompt clarity: Vague or ambiguous prompts can lead to unclear or outright bad results.

How kubectl-ai fits into the DevOps future

I think kubectl-ai is an example of how tools are getting natively integrated into the solutions we are managing. It means that we will see tighter and better AI assistance from these types of tools into the software and infrastructure we are managing.

We’re seeing this across:

- GitHub Copilot for code

- Amazon Q for AWS resources

- Microsoft Copilot for Azure CLI and services

- OpenAI DevDay tools for shell commands

Google’s kubectl-ai is proving itself already to be a powerful tool for Kubernetes environments. I think in the future, we can expect tighter integrations and other features like identity-aware features, and possibly auto-executed commands with confirmation.

Do try this in your home lab!

I do think this is an extremely valuable and important tool for learning and especially in the home lab. This tool lowers the bar for learning Kubernetes even further. One of the exciting things we are seeing is how AI tools are getting closer and closer to the source of what we need help with, even getting integrated natively.

Now it means we don’t have to copy and paste commands from other tools to try in our Kubernetes clusters at home. We can simply use AI natively at the command line and more quickly and efficiently get results.

Wrapping up

I am really excited about kubectl-ai and what it means for home lab environments as well as production kubernetes environments. It helps to make Kubernetes even easier to operationalize and troubleshoot without having the barrier of syntax and other nitty gritty details that stand in the way of getting to the root of a problem or figuring out how to do something in the cluster that you would like to do. It is super cool that the tool is free and open source and that Google has designed it so that it can be used with any LLM basically and haven’t locked it to only be used with Gemini. Let me know your thoughts. Have you used this yet?

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.