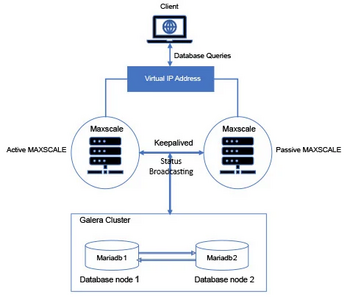

I am trying to setup a mariadb HA environment in my K8s kubernetes cluster. (So far I have 2 galera clusters and 2 maxscale running). I am trying to create this diagram:

Im struggling to setup a VIP using kube-vip for the maxscale loadbalancers.

I saw your youtube video about Easy k3s Kubernetes Tools with K3sup and Kube-VIP. But it left me with many questions. Do I need K3s? Can I not leverage my K8s cluster? From their docs it sounds like I can install as a static pod but I am unsure whether the video does it as a static pod or daemonset (and which one I need in my setup above).

Also wanted to note after looking back at my environment I have metallb using ARP. Could I instead create a virtual IP with metallb? Or should I really consider kube-vip for my HA?

PS. Please excuse my lack in devlops (im quite new to all this).

Im struggling to understand why we use kube-vip over metallb. It seems like a similar solution to kube-vip.

Also, MetalLB is really meant for handing out IP addresses like DHCP (not technically but it behaves like this) for your Kubernetes services that are self-hosted. However, this IP address should follow the service no matter which host it lives on.

Are you suggesting I should not use metallb? I dont intend to but without successful setup of kube-vip im beginning to struggle how components are setup. Im using Maxscale (in favor of the usual HAProxy as it provides optimizations for mariadb that we use).

Also, with Kubevip, only one host "owns" that virtual IP address at any one time. So you would be funneling your traffic to the one IP address of the master node.

Agree. That is how I interpreted it- one host with one backup to the virtual ip for automatic failover.

Kube-Vip should work with vanilla Kubernetes. Also, Kube-Vip functionality has been extended to include not only control plane load balancing, but also load balancing for any services of type LoadBalancer.

Can you clarify if I have this correct? Control Plane is a cluster of nodes separate from the docker nodes in my k8s cluster. When i type kubectl get nodes I see docker nodes in my setup. These docker nodes deploy containers whereas Control Plane nodes are a separate entity for VIP and loadbalancing. One control plane cluster = 3 control plane nodes = 1 VIP? In the future if we expand services I need to deploy another Control Plane Node to enable a second VIP?

What type of issues did you run into?

I dont know how to deploy Kube-Vip to my K8s cluster. I know we have 6 docker k8s VM nodes deployed. So it sounds like I need to deploy 3 ubuntu nodes, run k3sup on each as suggested in your video?

For ease I have compiled my notes the environment I am designing which is documented and made public at my github: https://github.com/advra/mariadb-high-availibility . Feel free to provide feedback. The design shares ideas from older articles I've came across to enable High Availability with Active/Active configuration in mind.

@brandonlee

You can have a separate control plane cluster from the workload cluster. However, this isn't necessarily a requirement. You CAN run workloads on control plane nodes. This is typically what most due with small clusters. However, for larger production deployments, you will see the control plane nodes designated for only that role.

Sorry still figuring this out. So I can designate kube-vip control plane of 3 of the top 4 docker nodes?

$ k get nodes NAME STATUS ROLES AGE VERSION sb1ldocker01.xyznet Ready <none> 599d v1.21.8-mirantis-1 sb1ldocker02.xyz.net Ready <none> 599d v1.21.8-mirantis-1 sb1ldocker03.xyz.net Ready <none> 599d v1.21.8-mirantis-1 sb1ldocker04.xyz.net Ready <none> 314d v1.21.8-mirantis-1 sb1ldtr01.xyz.net Ready <none> 587d v1.21.8-mirantis-1 sb1ldtr02.xyz.net Ready <none> 587d v1.21.8-mirantis-1 sb1ldtr03.xyz.net Ready <none> 587d v1.21.8-mirantis-1 sb1lucp01.xyz.net Ready master 700d v1.21.8-mirantis-1 sb1lucp02.xyz.net Ready master 700d v1.21.8-mirantis-1 sb1lucp03.xyz.net Ready master 700d v1.21.8-mirantis-1

Tagging back to this. I wasnt able to work on this until yesterday. The biggest confusion before was the deployment but now I now understand KubeVip configuration modes. You can configure KubeVIP as a single pod deployment (staticpod) or multipod loadbalancer (daemonset). You can also designate master or worker nodes for a total of 4 base configurations.

For HA we'd want daemonset. Since maxscale is on the service layer we only need daemonset on the worker nodes. So I now have successfully deployed in my environment a VIP pointed to maxscale where I can then access the galera cluster with multiple nodes.

Great!

Now I do have a question with Maxscale. I currently have one instance deployed with a custom helm statefulset helm chart I created. Is statefulset helm appropriate or should I be single deployment? To me, the Statefulset made most sense in a loadbalancer config as they all would have the same configuration and all point to the same VIP. This makes expanding/shrinking the number of maxscale instances approrpiate with statefulset. But I have doubts how the VIP manages connections (or perhaps thats something kubevip does in the background by routing IPs to the correct maxscale instance)