AI coding assistants are all the rage these days, from vibe coding, to helping to level the playing field for those who aren’t a developer. For those of us in the home lab, it is a great way to level-up with automation, Kubernetes, CI/CD, and other tasks that otherwise might be challenging. GitHub Copilot has AI-driven code completion directly integrated with many IDEs. It uses a cloud-based model that many might be concerned with, in terms of privacy, latency, and having to rely on external resources. However, there are several really good self-hosted alternatives. Let’s look at the best self-hosted Github Copilot AI coding alternatives

1. Tabby

One of the first we want to take a look at is called Tabby. It is a really powerful and highly capable AI coding assistant that you can self-host. Also, they have a free community edition that is totally free. This helps to bring privacy and customizability to your self-hosted AI coding assistant. It also helps with concerns about being reliant on cloud resources.

Official site is here: Tabby – Opensource, self-hosted AI coding assistant.

Key Features of Tabby:

- Local Model Hosting: Tabby runs entirely locally. It uses open-source language models such as GPT4All, Code Llama, or even custom-trained LLMs that you might want to use.

- IDE Integration: It integrates with IDEs like VS Code, Vim, IntelliJ IDEA, PyCharm, etc. With this functionality, it can provide real-time suggestions, completions, and inline documentation.

- Privacy: All data processing remains on your own hardware and network. This helps to make sure you have complete control and privacy over your source code.

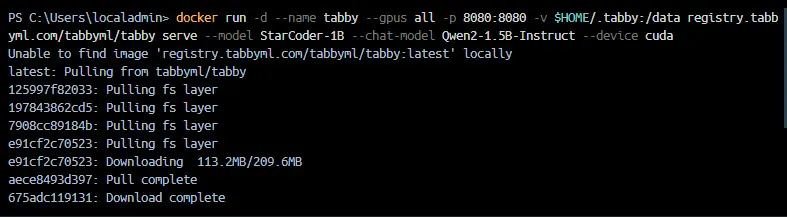

If you are familiar with self-hosting already and use Docker deployments, the Tabby install is straightforward.

The above screenshot uses this command:

docker run -d \

--name tabby \

--gpus all \

-p 8080:8080 \

-v $HOME/.tabby:/data \

registry.tabbyml.com/tabbyml/tabby \

serve \

--model StarCoder-1B \

--chat-model Qwen2-1.5B-Instruct \

--device cuda2. FauxPilot

The FauxPilot solution is a self-hosted GitHub Copilot alternative. It provides similar features as Copilot but is definitely committed to privacy of your code and data. Developers can use it with powerful AI models and not have to rely on cloud APIs, etc.

Visit the official GitHub site here: fauxpilot/fauxpilot: FauxPilot – an open-source alternative to GitHub Copilot server.

FauxPilot server setup

There is a setup script to choose a model to use. This will download the model from Huggingface/Moyix in GPT-J format. It will then be converted for use with FasterTransformer. Take a look at the official documentation on that here: How to set-up a FauxPilot server.

FauxPilot client setup

You can create a client by how to open the Openai API, Copilot Plugin, REST API. Take a look at the official documentation from FauxPilot here: How to set-up a client.

Key Features of FauxPilot:

- Works with Copilot API: FauxPilot can work with IDEs that are already compatible with Copilot. This makes sure you have easy integration.

- Models to choose from: Users can use many local language models. These include popular models like GPT-J, GPT-NeoX, and Code Llama.

- Community – An active community ensures consistent updates, improvements, and prompt troubleshooting for users.

FauxPilot works well for developers who want to use open-source environments for coding assistance with full control over their data privacy and model usage.

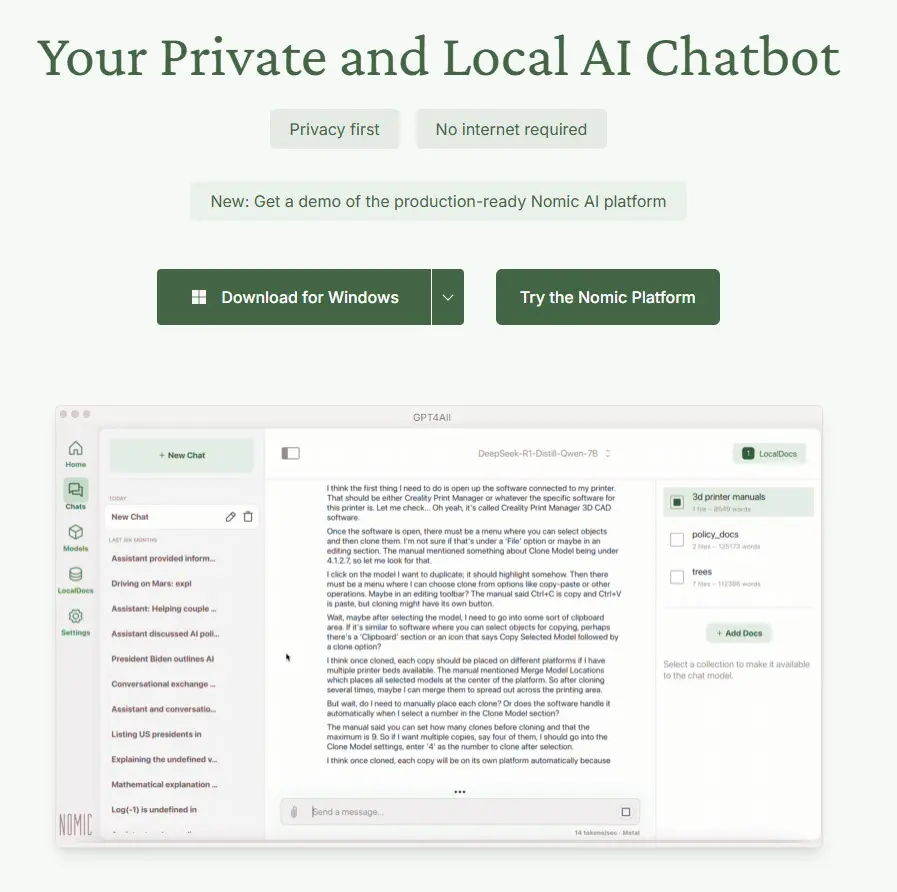

3. GPT4All

GPT4All is another really good solution that allows you to use a suite of open-source language models for local private chat use. It was focused initially on chat and generative AI. However, it also provides really good code completion and assistance for developers in their workflow.

View the official site here: GPT4All – The Leading Private AI Chatbot for Local Language Models. It looks to be free to download for multiple operating systems.

Key Features of GPT4All:

- Extensive Model Support: Offers a variety of open-source models optimized for different tasks, including coding-specific models like Code Llama.

- Offline Operation: Completely self-contained and offline-capable, GPT4All ensures your code stays secure, never leaving your local environment.

- Ease of Setup: With straightforward installation procedures and Docker-based deployments, GPT4All is highly accessible for various skill levels.

GPT4All shines in environments where internet access is limited or controlled, making it perfect for home lab enthusiasts or secure professional setups.

4. Continue

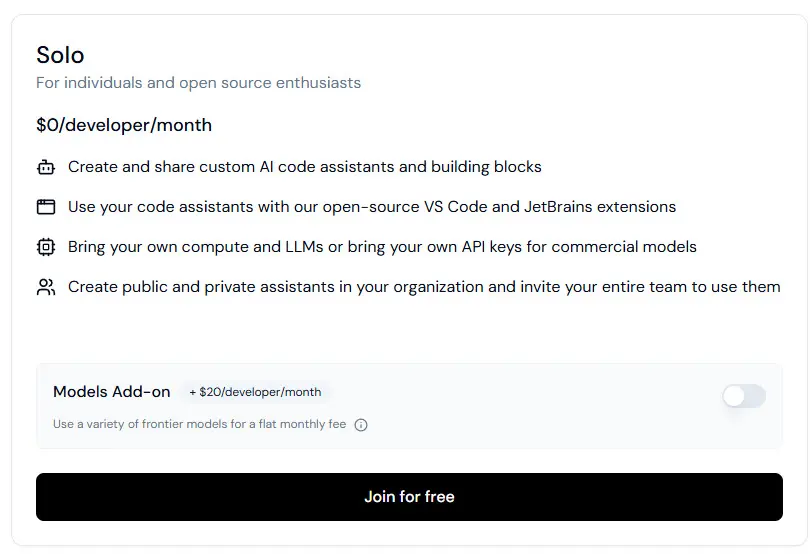

Continue AI is a solution that is built for developers. It allows then to create and share custom AI code assistants with open-source VS Code and JetBrains extensions. You have access to a hub of models, rules, prompts, docs, and other resources when using Continue.

Take a look at the official site here: Continue. For homelabbers and self-hosters, they have a “Solo” offering that is free:

Key Features of Continue:

- You can have chat to understand and iterate on code in the sidebar

- You can use autocomplete functionality to receive inline code suggestions as you type

- Edit allows you to modify code without leaving your current file

- The Agent solution to make more substantial changes to your codebase

- Local AI Models allows it to support different local language models like GPT4All, Code Llama, and other LLMs.

Continue is a solid choice for developers heavily invested in the VS Code ecosystem who seek a powerful, private coding assistant without cloud-based dependencies.

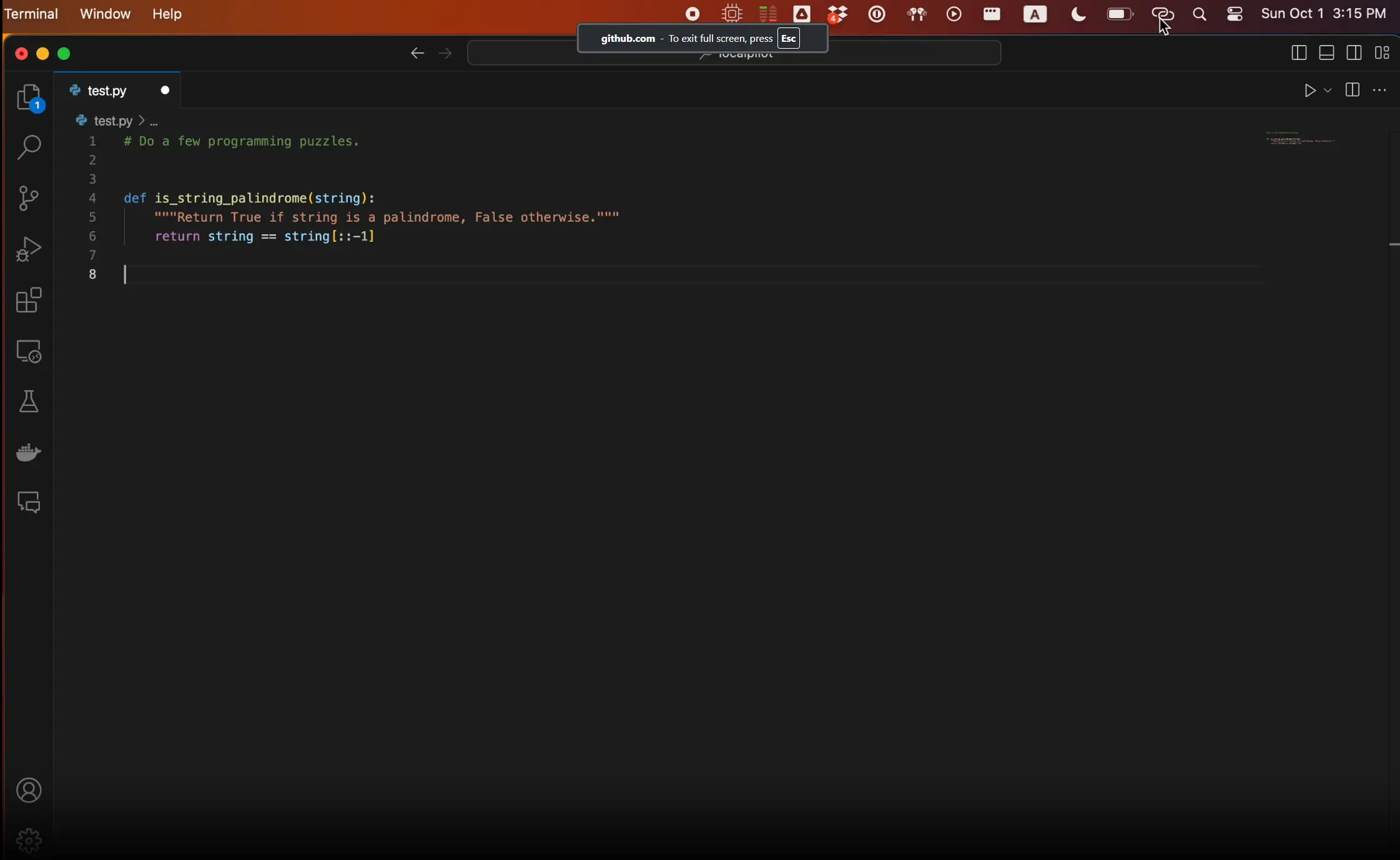

5. LocalPilot

Another very cool implementation for Mac environments is a solution called LocalPilot by Daniel Gross. It is designed to be a lightweight and privacy conscious alternative for a local GitHub Copilot implementation.

Visit the official GitHub repo here: danielgross/localpilot.

Key Features of LocalPilot:

- Privacy: It has priority on complete local deployment and operation to make sure of privacy and data security

- Open Source: Fully open-source and community-driven. This means it has transparent development and community involvement. However, it looks like the last commit was 2 years ago, so unfortunately, this project may have become stale.

- Mac only: At this time, it only looks to be for Mac devices only.

This is definitely one to check out and play around with. It looks like a very cool solution to running Copilot locally. I do wonder about the staleness of the project.

What is the best solution?

Best is always a subjective answer, depending on what you are trying to achieve and what your use case may be.

- Privacy and Security: Really all of the solutions, including Tabby, FauxPilot, GPT4All, Continue, and LocalPilot allow you to provide complete local hosting and control of your data

- Integration: Tabby and Continue arguably seem to provide the most broad IDE support. Also, FauxPilot is directly compatible with existing Copilot-compatible integrations

- Resource availability: Consider your available hardware resources. GPT4All and LocalPilot provide efficient deployment even on modest hardware.

- Community and support: Active communities behind these tools ensure continued improvements, troubleshooting, and updates.

Potential downsides

I am super excited about local LLM models and AI hosting, but, I think there are definitely potential downsides to mention about self-hosting your coding assistants. Running Local AI models and the performance of those models directly relies on your AI hardware, including GPUs. What I have found is that many local AI models on what most types of GPUs consumers may have access to in their home labs, doesn’t perform as well as cloud offerings. This may seem counterintuitive, but most in their self-hosted environments are limited by GPU resources, especially VRAM.

Saying all this to say that performance of cloud environments is going to be hard to beat for most. However, the balance here is that you totally control your data and the privacy of your code.

Wrapping up

The self-hosted GitHub Copilot alternatives like Tabby, FauxPilot, GPT4All, Continue, and LocalPilot are really good solutions for coding assistance without relying on cloud services. With these, developers can have complete privacy and control over their data.

Each alternative discussed here offers advantages for different workflows and requirements. As I would say with any solution, getting these into your own lab and self-hosted environments and determining which is best for you is always the best approach. I like to have hands on with any solution that I recommend or use in my environment. Let me know in the comments if you are self-hosting some of your own coding assistants and maybe even one of the ones on the list here.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.