If you have been following the news from Docker, they have introduced something called Docker Model Runner. I think this is a pretty big deal if you care about running AI locally in either a home lab or a corporate environment. Docker has been making a lot of strides along these lines and is incorporating some really cool tools in Docker Desktop that can be used for AI purposes.

At a high level, Docker Model Runner (DMR), gives you a way to pull, run, and distribute large language models (LLMs) using the same Docker tools and probably workflows that you already know using Docker. Instead of learning an entirely new ecosystem or setting up complex Python environments, you can treat models like OCI artifacts. You can then run them much like containers.

For home lab environments, I think this helps to lower the barrier to experimenting with AI in several ways. You can pull a model from Docker Hub or HuggingFace and be consuming an OpenAI-compatible API in just a couple of minutes. You can do this with just a simple command, which is what has popularized Docker in the first place. It comes with hardware acceleration support, whether you are on Apple Silicon, or if you have an NVIDIA GPU on Windows, or even ARM and Qualcomm devices.

It means older gaming rigs or modern mini PCs with decent GPUs instantly become useful for AI workloads. And let's face it, we all probably have these types of machines around the home lab that we are looking for a role they can fill. Since everything runs inside Docker, you are not adding bloat to your operating system with dependencies, and you can spin up and tear down models in a way that doesn't break anything. On top of that, Docker Hub already has a curated catalog of models packaged as OCI artifacts. With this being the case, you don't have to sort through repositories trying to figure out which ones are maintained and compatible.

I think this will be of value in the enterprise as well. The first is security and compliance. DMR runs inside the existing Docker infrastructure. So you don't need a totally new tool, just use docker. It respects guardrails like Registry Access Management and fits into enterprise policies that most already have in place any way. Treating models as OCI artifacts means you can store, scan, and distribute them through the same registries you already use.

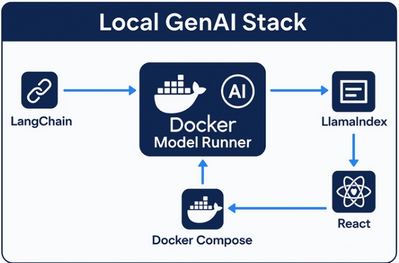

DMR also integrates with tools developers are already using, like Docker Compose, Testcontainers, and CI/CD pipelines. This will allow models to be wired into workflows without coming up with something totally new. Debugging and observability are also baked in, with tracing and inspect features that make it easier to understand token usage and framework behaviors. For enterprises tracking costs and performance the visibility they will get with this tool and framework will be very helpful. Looking ahead, Docker has made it clear that support for new inference engines like vLLM or MLX is coming. So teams are not locked into llama.cpp as the only option.

What is the big picture here? It is impossible to know the direction of AI and local AI models. However, we can see that Docker can be very helpful in solving many of the problems that AI models have today. Things like packaging, distribution, and compatibility. This is what Docker is purpose-built for. This will create a standardized approach using OCI formats, which could make local AI far more accessible because it fits into the workflows developers already know. For home labbers it means frictionless experimentation, while for enterprises. I think this will mean a consistent way to let teams start building with AI.

I am curious if anyone has already tested the Beta or GA release of Docker Model Runner? How does performance compare to running llama.cpp or Ollama directly if you have used it?