I have totally shifted my home lab over to using mini PCs in the past couple of years. Full-blown enterprise servers, while cool, are simply not needed to self-host or do very cool things as they might have been a few years back. Modern mini PCs have very powerful specs with some even having 32 threads of processing power. You may have noticed though as well most mini PCs these days are touting NPUs or Neural Processing Units. Lots of marketing is around lots of “TOPs” of AI performance. But if you are building a home lab with tools like Ollama, LM Studio, or other AI and virtualization software, you may be wondering: do these NPUs actually matter? The short answer for now is not really, but let’s dig more into this very marketed spec for mini PCs.

So what is an NPU anyway?

I know I started hearing about an NPU just a couple of years ago mainly in the context of mini PCs and manufacturers marketing mini PCs as “AI powerhouses”, etc. So, what is this mysterious little processor? Well an NPU is a dedicated processor designed to accelerate machine learning and artificial intelligence processes.

So, you can think of it as a processor that is similar to a GPU but instead of being built to render graphics, it is tuned for AI operations. Think of things like:

- matrix multiplication

- inference

- low precision workloads (INT8)

Also, different chip manufacturers have their own branding for their NPUs. These are becoming standard now in modern processors. The pitch for these processors (NPUs) is simple. They can handle AI tasks more efficiently than just having a CPU or GPU while using less power.

In devices like laptops, NPUs can indeed help as they can handle things like real-time transcription, AI upscaling in video calls or local Copilot functions in something like Windows 11 without draining your battery. This is one of the reasons why vendors push them as the next big thing.

Do NPUs help with Ollama and LM Studio?

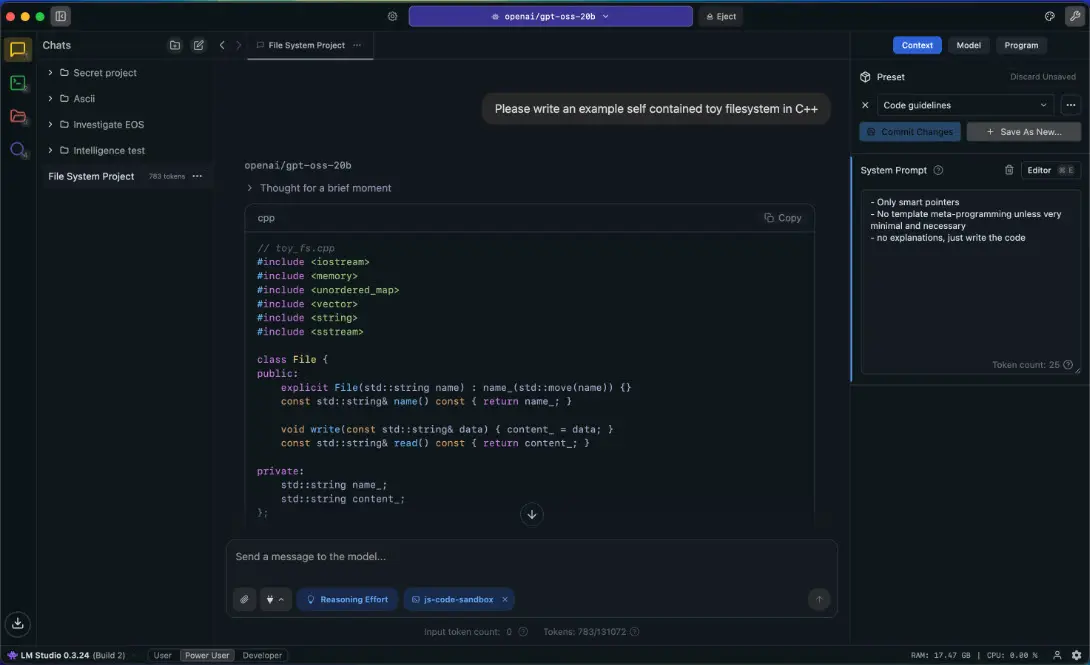

Most are not just wanting to run office AI assistants or webcam filters. Most are wanting to run things like Ollama with 13B or 70B models or use LM Studio to experiment with local inference with vibe coding for an example. These types of workloads are heavily reliant on CPU and GPU support, not NPU acceleration.

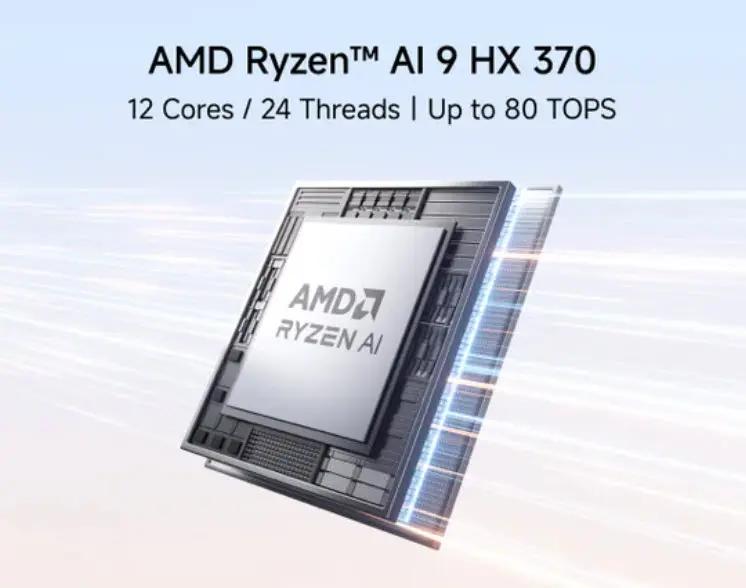

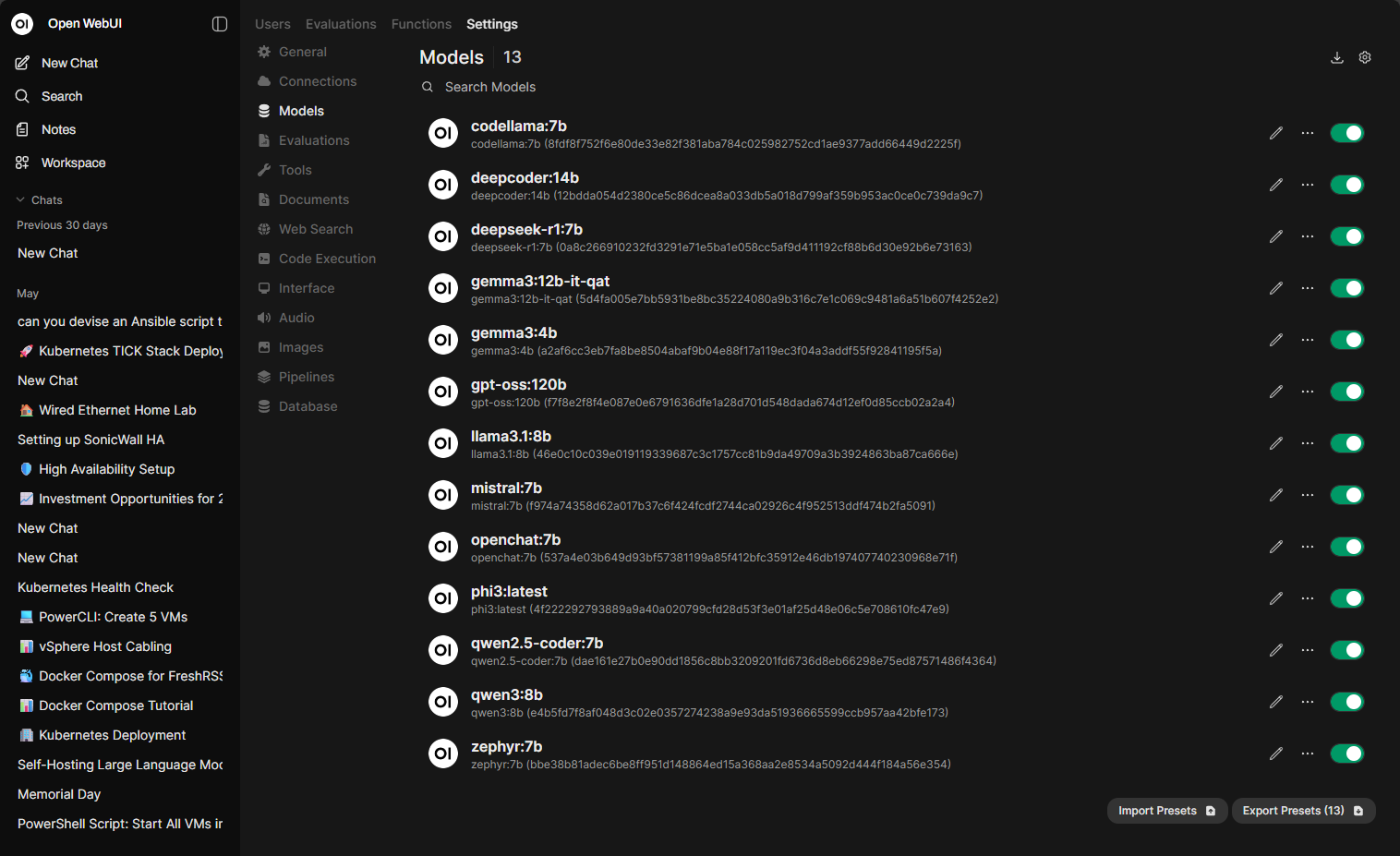

This is the question many home labbers have. If you buy a mini PC with an Intel Core Ultra CPU or an AMD Ryzen AI chip, will the NPU inside speed up your models in Ollama or LM Studio? Today, the answer is no at least if you are not on Apple Silicon. Ollama does use the Apple Neural Engine, and they have recently released an AMD AI chip version of LM Studio that is for Ryzen chips that is in “technology preview” status, see here: LM Studio on Ryzen AI. In general though, support is just not there. You can read the FOSS article on TPUs and NPUs with Ollama here: What is Ollama? Everything Important You Should Know.

Like many hardware devices, until the software (frameworks like PyTorch, TensorFlow, or ONNX Runtime) comes to support it, it is basically just hardware that can be used in marketing and not much else. As a result, you will probably be paying more for a chip with an NPU but see no difference in the performance of the AI stacks you actually use in a home lab running Ollama.

When will NPUs actually matter for home labs?

So will NPUs always be a worthless piece of hardware for home labs? No, I don’t think so. At some point, the major frameworks will work on ways to take advantage of them. Microsoft is currently pushing NPU support in Windows for AI-powered features.

ONNX Runtime is experimenting with NPU acceleration. Also, once PyTorch or TensorFlow begin to integrate NPU backends, this is when we will see third-party open-source projects like Ollama and then also LM Studio adopt them.

However, keep in mind, even when these do become utilized, they will likely only be powerful enough for lightweight inference or running some models with lower precision. Like most trends, the key is with adoption. The hardware is already here, but without the software software, it is just wasted silicon. For now at the present time, the home lab is just in a waiting period.

Should you pay more for an NPU-equipped mini PC?

Well, in some cases I say the answer may be yes. Especially if you are running Windows on a mini PC as your daily driver and then using it for light home lab tasks running in a type 2 hypervisor, it may come in handy and you will likely see benefits with things like Copilot.

However, in general, if you are looking for mini PC horsepower to run your home lab and self-hosted AI workloads, then NPUs aren’t worth much. The deciding factor in that case will still be CPU core count and GPU capability. These two hardware specs will make a direct impact on the performance of your self-hosted AI workloads running in Ollama or LM Studio which are the most common tools used for self-hosted AI.

One thing to note however is the fact that NPUs just come standard with many of the newest CPUs. CPUs like the Intel Core Ultra or AMD Ryzen 7000 AI series. So, in that case, you know you will be buying the NPU as part of the price of the CPU overall. That is ok. But, just don’t let that be the single reason you are paying extra for one of those specific processors.

What are better ways to boost AI performance in your home lab?

GPUs, GPUs, and more GPUs. Good or bad, the king of AI performance is a fast GPU. It is still the best way to accelerate your AI experience. Even an older NVIDIA RTX card will be much faster over CPU inference alone.

So, if you want to start experimenting with local AI, investing in a GPU with a good amount of VRAM is a better choise than relying on NPUs or buying NPUs just because they are marketed to speed up AI. Right now, probably the best consumer-grade card you can get is the 32GB DDR7-powered NVIDIA GeForce RTX 5090 card.

- https://geni.us/xvNnWm4 (affiliate link)

Learn how to self-host your own Ollama installation in Proxmox

I have done a couple of posts that can help with learning how to self-host your own Ollama installation in the home lab running in Proxmox. You can do this either with a dedicated virtual machine or LXC container with passthrough of your GPU enabled.

Take a look at these posts here:

- Run Ollama with NVIDIA GPU in Proxmox VMs and LXC containers

- How to Enable GPU Passthrough to LXC Containers in Proxmox

I also did a video that walks you through the process here:

Wrapping it

The bottom line is NPUs look good on paper and they are a great marketing spec that can be used to sell mini PCs. My honest hot take on this is that they are really not worth it, at least not yet. They can be mildly beneficial for very light AI tasks like Copilot in Windows. However, for most home labs that will be running Ollama or LM Studio, NPUs aren’t going to make much difference compared to a modern or even dated GPU like an older RTX series card. GPUs are still king in the home and production for AI workloads. What about you? Are you running self-hosted AI workloads that are benefiting from a GPU? Let me know what you are running in the comments.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.