Recently, Proxmox has released Proxmox 9 Beta which looks like it will prove to be a great new release for what has become arguably one of the most popular hypervisors in the home lab and even edging into the enterprise. Proxmox 9 Beta has many great features to note that are going to bring a wide range of improvements to the platform as well as updates across the board for the next iteration of the ever popular KVM-based virtualization platform. Let’s dig into all the major new features in Proxmox VE 9 Beta and see why Proxmox 9 is going to be one to look for.

What is new in Proxmox VE 9 Beta

In terms of a list of new features, here you go:

- Debian 13 “Trixie” base OS

- Linux kernel 6.14.8

- QEMU 10.0.2 with improved VM performance and live migration

- LXC 6.0.4 with cgroup v2 support and container isolation enhancements

- ZFS 2.3.3 with RAID-Z expansion and better snapshot handling

- Ceph 19.2.2 (Squid) support with GUI-based installation

- Shared LVM snapshot support (tech preview) for thick-provisioned volumes

- Network interface pinning for setting specific network names to physical NICs

- Software-defined networking (SDN) fabric improvements

- Transparent handling of renamed network interfaces during upgrades

- Dark theme now enabled by default in the web UI

- GlusterFS support removed

- Full removal of cgroup v1 (containers must use cgroup v2)

- NVIDIA GRID/vGPU support requires driver 570.158.02+

- Non-free firmware (microcode) repo enabled by default for CPU patching

- Updated installer with improved ZFS, TPM2 encryption, and boot device detection

- In-place upgrade support from Proxmox VE 8.x

Built on Debian 13 “Trixie” with Linux Kernel 6.14

New in this release of Proxmox 9 Beta is that it is built on the latest Debian 13 “Trixie” release and Linux Kernel 6.14. The new Linux kernel brings about many major enhancements. These include:

- Improved support for PCIe 5.0, NVMe, and newer CPU architectures

- cgroups v2 (now required)

- Expanded RDMA and networking stack for high-performance interfaces

Overall, this is a solid jump from Proxmox VE 8’s Debian 12 and kernel 6.2 and provides many new features, etc. You can find the release notes for Debian 13 here:

QEMU 10.0.2 + LXC 6.0.4 gives you modern virtualization

With the Proxmox 9.x release, QEMU 10 brings high-efficiency VM execution. This also has improved live migration, and better NUMA awareness. The following are key benefits of the new enhanced versions:

- Faster VM launches and migration across clusters

- Improved Secure Encrypted Virtualization (SEV/TDX) features

- Smarter CPU feature exposure and pass-through

For containers, there are some great benefits to the new LXC 6.0.4 version included in Proxmox 9 Beta. It introduces enhanced resource isolation. Also, you have cgroup v2 integration, and networking improvements that are great for container-first environments or hybrid clusters.

Newer ZFS 2.3.3 and Ceph Squid 19.2.2 versions

As expected versions of other underlying technologies have been upgraded in Proxmox 9 Beta. Storage is one of the areas where Proxmox really shines. Proxmox 9 Beta doesn’t disappoint either:

- ZFS 2.3.3 adds:

- RAID-Z expansion!

- Improved I/O management

- Faster metadata processing

- Smarter space estimation during snapshots

- Ceph 19.2.2 (Squid) is supported and is now installable directly from the Proxmox GUI installer with the better benefits noted below:

- Better NVMe performance tuning

- Better scalability and resource balancing

- Ongoing GUI management improvements

The Proxmox 9 Beta release makes it easier to deploy hyperconverged clusters with high-availability VMs. This of course with distributed storage by way of Ceph.

Shared LVM snapshot support (Tech Preview)

This is one that I am getting excited about the GA release of Proxmox 9 and that is shared LVM snapshot support on things like iSCSI and Fibre Channel-backed LUNs. You know that previously if you were running these traditional storage technologies, snapshots were not possible unless you switch to a different storage type which was a major bummer, especially for those that are coming from a VMware vSphere background where iSCSI and Fibre-Channel are the norm and you can do snapshots all day long.

With this tech preview as part of the Proxmox 9 Beta release, you can:

- Snapshot VMs that are stored on shared LUNs

- Chain volume snapshots for rollback and backup

- Keep legacy storage in play as you migrate to Proxmox and keep snapshot functionality

Also in tech preview is capabilities for snapshot support for file-based storage. This includes Directory, NFS, and CIFS storage. This tech preview adds a volume chain snapshot mechanism using QCOW2 files. This will allow snapshots without traditional LVM or ZFS underneath.

When enabled:

- Snapshots are persisted as separate QCOW2 files

- A new writable QCOW2 is created and linked to the previous snapshot

- VM downtime during snapshot deletion is reduced

This will be great for users leveraging NAS shares or non-clustered setups using CIFS/NFS mounts.

SDN Enhancements and network interface pinning

Networking also gets a lot of great enhancements in this release of Proxmox 9 Beta, including SDN enhancements and a new feature also called network interface pinning which is a cool new feature.

Software-Defined Networking (SDN) Fabric Support

The SDN feature set now supports fabric topologies. What is a “fabric” in the terms of Proxmox? According to their release notes, “Fabrics are routed networks of interconnected peers.”

This is useful for managing complex multi-node, multi-VLAN environments in home labs or datacenters. You can define custom topologies, segments, and bridge configurations for tenant segmentation. Also, a use case would be for test environments that may need isolated networks. And this allows for flexible routing between zones.

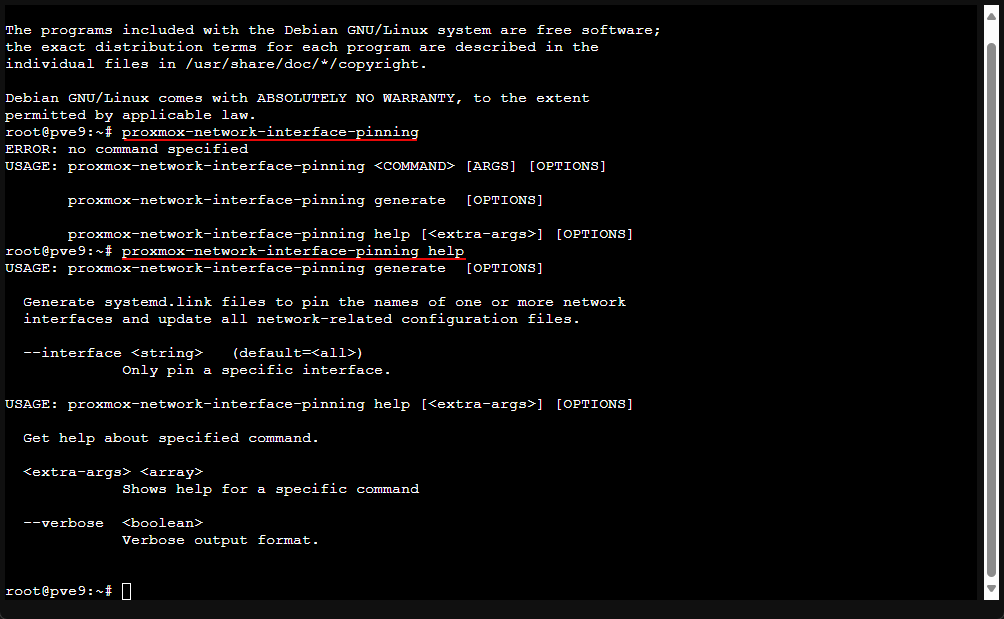

Network Device Pinning for VMs

New to 9.0 is the ability to pin a network name to a specific host NIC. This would probably come in handy for systems with multiple NICs and provide consistency across your interface names.

Below you can see me launching the tool with the command:

proxmox-network-interface-pinningNetwork rename handling with the pinning tool

Proxmox now handles network interface renames more gracefully, even when the underlying Linux interface names change.

Use the new proxmox-network-interface-pinning CLI tool to:

- Pin MACs to stable names like nic0, nic1, etc.

- Automatically update firewall and bridge configs

- Prevent configs breaking on reboot or kernel upgrade

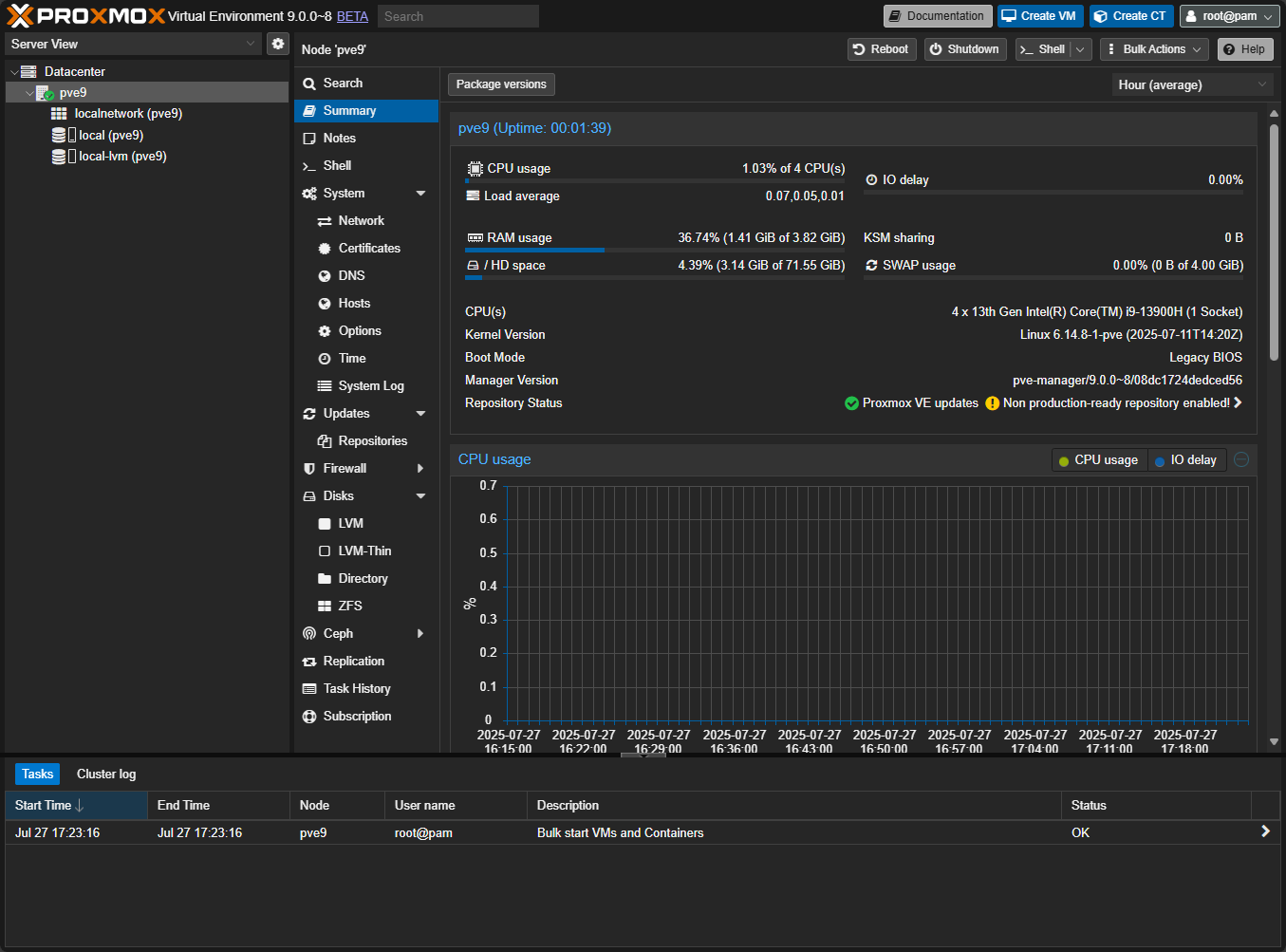

Dark mode is now the default

We all used to remember in Proxmox 7.x where the “bleeding eye” white was the only choice. You then had community git resources that were great and allowed us to have the dark mode. Thankfully, in version 8, dark mode was added. Now in Proxmox 9, dark mode is the default option and you have to make a deliberate effort to enable light mode now.

Below is a look after installation with no option changes, dark mode is now the default right out of the box:

GlusterFS support has been removed

This is not a surprise since the GlusterFS open source project has now gone stale. Development has slowed to a crawl and I think most are looking for GlusterFS alternatives. So, probably not a surprise with the removal of support here, but just a heads up for legacy storage users that may be using GlusterFS in their current Proxmox environment. Proxmox recommends that those who may be using GlusterFS now should plan on migrating to something like Ceph storage. Or if not Ceph, you can manually mount Gluster volumes using CLI tools and migrate to another storage technology.

Read this post on GitHub: Is the project still alive? · Issue #4298 · gluster/glusterfs.

Security upgrades and improvements

A few unseen improvements that matter in the realm of security with this release include the following:

- cgroup v1 is gone – containers must support cgroup v2 (it means OS’es like CentOS 7 and Ubuntu 16.04 will break)

- NVIDIA vGPU users must use driver >= 570.158.02 (GRID 18.3) for kernel 6.14 compatibility

- The non-free firmware repo is enabled by default, so microcode updates (like CPU security patches) are applied automatically

If you’re using passthrough, PCI devices, or GPU acceleration, this update will go a long way to help future-proof your Proxmox setup.

Performance improvements with Proxmox Backup Server

Proxmox VE 9 has huge performance enhancements when restoring from Proxmox Backup Server (PBS). Multiple data chunks can now be pulled in parallel per worker thread. Also, you can tune this with environment variables based on your network and disk speed

If you use PBS over a fast LAN or 10GbE setup, you’ll notice much faster restore times, especially when restoring large virtual machines.

Better VMware migration support for older ESXi versions

If you are migrating away from older vSphere setups, Proxmox VE 9 has a fix for the ESXi import tool to better list and detect VMs from legacy ESXi builds. This will greatly improve compatibility and help migrations from legacy VMware environments and older enterprise environments that are running VMware ESXi.

Installer updates and upgrading from 8.x to 9.x

The Proxmox VE 9 installer has been updated with:

- Support for ZFS root installs with RAID-Z2 and RAID-Z3

- LUKS encryption options with TPM2 integration

- Automatic detection of boot device and advanced storage layout customization

Also, Proxmox officially supports upgrading from Proxmox 8.x to 9.x. You can read through the official ugprade guide here: Upgrade from 8 to 9 – Proxmox VE.

Wrapping it up

Wow, Proxmox 9 is going to be a really great new release of the new favorite in home lab virtualization and for enterprise customers looking for a fully-featured hypervisor that can run production workloads. I personally am looking forward to the snapshot support for LVM shared volumes attached with iSCSI and Fibre-Channel. This will help remove a blocker that I have known some to have who want to keep their same storage technologies in play when migrating from VMware to Proxmox.

Let me know in the comments what you are looking forward to with this release and if you have plans to go up to 9.x as soon as it drops as GA or are you going for it with Beta? (probably not but wanting to see who is living dangerously).

Download Proxmox 9 Beta from here:

You can grab the official ISO from here: Download Proxmox 9 Beta ISO

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

I’m very excited and happily received version 9 of Proxmox. I learned about Proxmox during my postgraduate degree in computer networking. Proxmox for high availability. Very good.

Alexadre,

That’s awesome that you are getting into using Proxmox. It is a great platform for most and is continuing to gain momentum.

Brandon