Docker Desktop is a great tool to run containers locally. It also provides a great interface to work with your containers and many features. While we may choose to run Docker containers on a dedicated Docker host, there are many containers you might decide to run in Docker Desktop locally that you can spin up as part of your daily workflow as needed. Let’s look at the best Docker containers for Docker Desktop in 2025 and see which containers you might consider running.

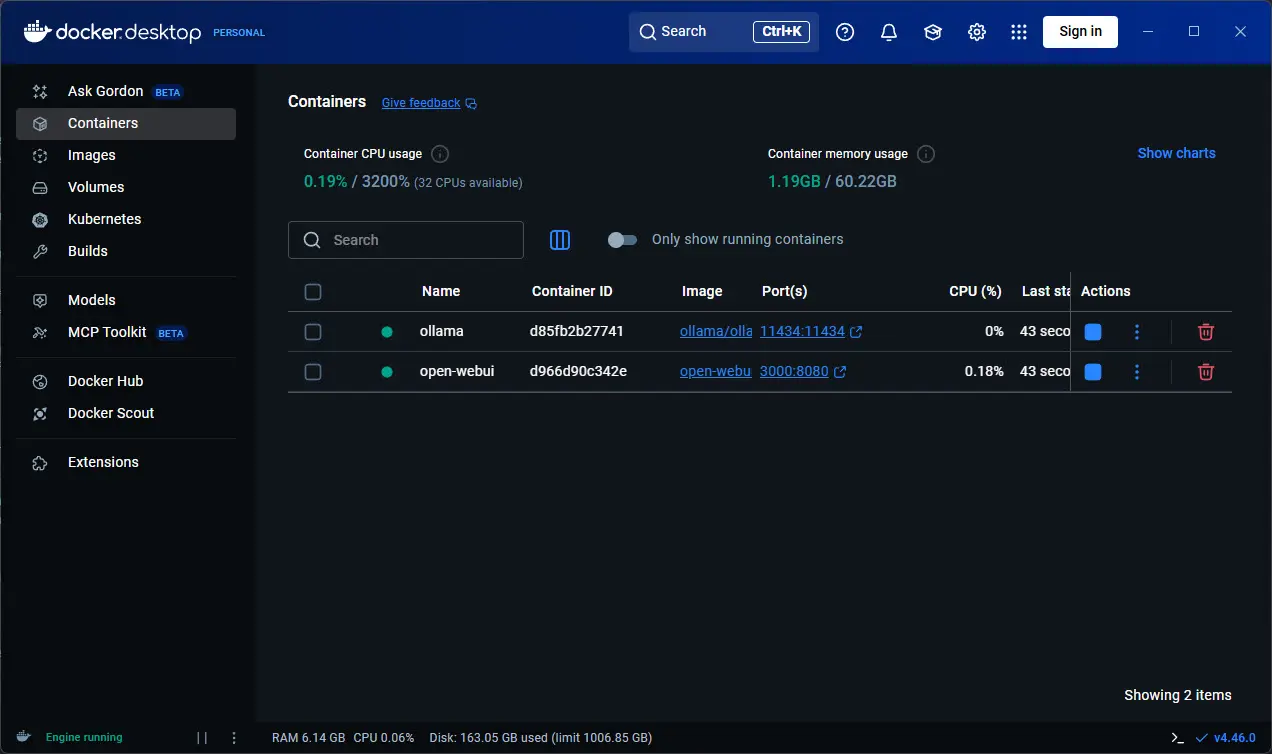

Docker Desktop is great for running local containers

With Docker Desktop, you don’t need a dedicated Linux Docker container host sitting on your network. All you need is your normal workstation that you are already using for your daily DevOps or other tasks. It also runs on Widnows, macOS, and Linux so regardless of your platform, you can download it and install it in a few minutes to run containers locally.

It also provides a really good dashboard interface that also has integrated Kubernetes support if you need it or want to play around with learning Kubernetes. It automatically updates and it is fairly beginner friendly.

If you are a developer, you can use it as a sandbox to try out code, APIs, and databases. If you are excited about AI and local AI models, it gives you a place to run models without relying on a remote GPU. The cool thing is that it can take advantage of your local GPU in your workstation where you are running it.

For sysadmins and homelabbers, it is a great place to test tools before you decide to host a container full-time on your dedicated Docker host. Or you might use your Docker Desktop as I do to run containers that I only need when I am actively using my workstation.

Ollama

There is not a container that I can think of that is better suited for running inside Docker Desktop on your workstation than Ollama, especially if you want to make use of your own locally hosted AI. Ollama has exploded in popularity and is one of the easiest ways to run LLMs locally. It works without issue on both macOS and Windows.

Ollama has exploded in popularity in 2025 as one of the easiest ways to run large language models locally. It is designed to work seamlessly with macOS and Windows, and a Docker container makes it possible to isolate and manage it without cluttering up your base system. With Ollama, you can pull down models like Gemma, Llama 3, Mistral, or Qwen and run them right on your workstation. The container image is published on Docker Hub.

services:

ollama:

image: ollama/ollama:latest

ports:

- "11434:11434"

volumes:

- ./ollama:/root/.ollama

OpenWebUI

When you run something like Ollama, OpenWebUI provides the browser-based web UI interface that looks like ChatGPT. Running OpenWebUI in a Docker container is simple to do and provides a polished experience. It also has features that we have grown used to like chat history, prompt management, and multi-model support.

services:

openwebui:

image: ghcr.io/open-webui/open-webui:main

ports:

- "3000:8080"

volumes:

- ./openwebui:/app/backend/data

depends_on:

- ollama

MCP servers

If you haven’t heard about Model Context Protocol (MCP) servers, these are one of the newest developments in the AI tooling space. MCP servers act like bridges between local resources and AI assistants. These let you securely expose databases, APIs, and files to models without giving them direct unfettered access. Docker Desktop is a great way to run MCP servers because they are lightweight services that can be launched and managed in isolation, locally on your workstation.

Here are a few popular and practical MCP servers you can try locally:

Follow the directions to spin up each of these locally in your Docker Desktop environment. However, in general, you can use something that looks like the following Docker Compose code.

services:

mcp-filesystem:

image: ghcr.io/modelcontextprotocol/filesystem:latest

volumes:

- ./workspace:/workspace

ports:

- "4000:4000"

Dozzle

Once you have many different Docker containers running locally, a great tool to keep an eye on the logs of all your containers you are running is a tool called Dozzle. It is a lightweight log viewer for Docker that lets you view your container logs in real-time. You can do this from a web-based UI. I think it is even easier that viewing your logs from Docker Desktop and it lets you do this from the web.

services:

dozzle:

image: amir20/dozzle:latest

container_name: dozzle

ports:

- "8080:8080"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

restart: alwaysNetdata

If you are looking for a great way to monitor your Docker containers you are running locally as well as your Docker Desktop host, you can use Netdata. I really like Netdata as you can install it locally even without the cloud dashboard. It gives you insights on what your CPU, memory, disk, and network metrics look like in real time.

The container is available on Docker Hub.

services:

netdata:

image: netdata/netdata:latest

ports:

- "19999:19999"

cap_add:

- SYS_PTRACE

security_opt:

- apparmor:unconfined

volumes:

- netdataconfig:/etc/netdata

- netdatalib:/var/lib/netdata

- netdatacache:/var/cache/netdata

- /etc/passwd:/host/etc/passwd:ro

- /etc/group:/host/etc/group:ro

- /proc:/host/proc:ro

- /sys:/host/sys:ro

- /etc/os-release:/host/etc/os-release:ro

volumes:

netdataconfig:

netdatalib:

netdatacache:

Watchtower

Keeping your containers updated manually can be a pain. You have to pull the latest images and then stop and start your containers with the same configuration to make use of the new image. Watchtower is a container itself that automatically monitors your other containers and updates them when new versions of images are released in the repo.

This is one that I have written about before. You can check out my other post here: Watchtower Docker Compose – Auto Update Docker containers.

services:

watchtower:

image: containrrr/watchtower

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: --interval 3600

Code-server

Another very cool Docker Desktop container you can run is called Code-server. It makes having a Visual Studio-like environment available from a browser. By running it in Docker Desktop, you can spin up a full development environment isolated from your host machine. And, you can access this from other machines on your network as well.

services:

code-server:

image: codercom/code-server:latest

ports:

- "8443:8443"

volumes:

- ./code-server:/home/coder/project

environment:

- PASSWORD=changemeWhoogle and SearxNG

If you want to try running a search engine locally for privacy, both Whoogle and SearxNG are excellent options. These open source metasearch engines let you query Google or multiple other providers without ads, tracking, or profiling.

services:

whoogle:

image: benbusby/whoogle-search:latest

ports:

- "5000:5000"

searxng:

image: searxng/searxng:latest

ports:

- "8081:8080"

JupyterLab

JupyterLab is one of the most versatile tools you can run in Docker Desktop, especially if you work with Python, data science, or machine learning. It provides an interactive, browser-based environment where you can write and run notebooks, visualize data, and test models. Running JupyterLab in a container ensures your development environment is clean and reproducible, with no need to install dependencies directly on your host machine.

services:

jupyterlab:

image: jupyter/base-notebook:latest

ports:

- "8888:8888"

volumes:

- ./notebooks:/home/jovyan/work

LocalAI

As an alternative to Ollama, LocalAI is another project that makes it easy to run open source AI models locally using Docker. It provides API compatibility with OpenAI, meaning you can point applications that expect the OpenAI API to your local LocalAI container instead.

services:

localai:

image: quay.io/go-skynet/local-ai:latest

ports:

- "8082:8080"

volumes:

- ./localai:/data

environment:

- MODELS_PATH=/data/models

Your daily containers may vary

Keep in mind these are only suggestions. There are many other great containers that can enhance workflows depending on what you need to accomplish and what tools are helpful. Running daily containers in Docker Desktop is a great way to make your workflows faster, more private and have tools at your fingertips.

Wrapping up

Docker Desktop is a great tool that can be used by developers, operations, and home labs alike. By using local containers like Ollama, OpenWebUI, MCP servers, Dozzle, Netdata, Watchtower, Code-server, Whoogle, JupyterLab, and LocalAI, you get access to some of the most useful tools available. The great thing is you can spin up many different solutions, use the tools while you need them and tear them down without cluttering up installed components, files, and other things on your host. What are your go-to tools in the home lab, development lifecycle, or just daily productivity that you use?

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.