Most likely when you start playing around with and using Docker containers in your home lab and production environments, you will eventually start becoming familiar with Docker Compose. Docker compose is generally the go-to tool that many use when working with Docker containers and especially “stacks” of Docker containers where you have more than one container. There are definitely some tricks with Docker Compose that help to make it more powerful, cleaner, and more reliable. Let’s look at these tips that I wish I would have known from the start.

Use a project directory structure for your Docker compose projects

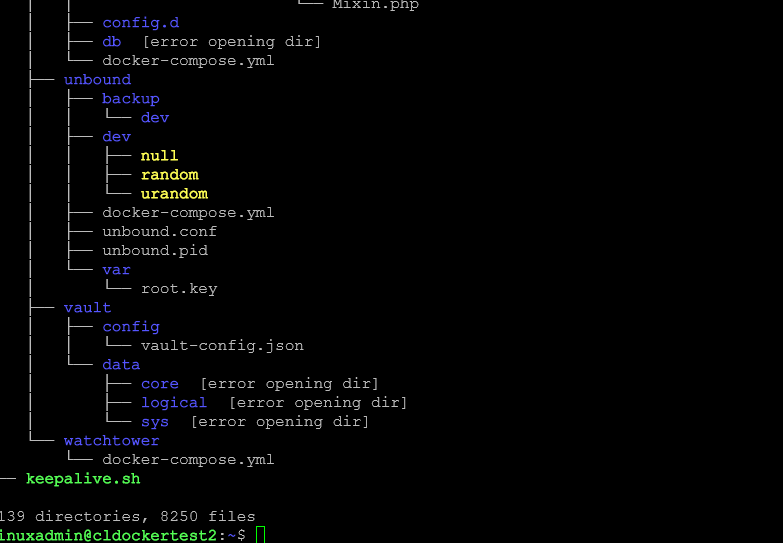

This is one thing that I started to learn fairly early on is organizing your Docker compose code in separate folders for each project on my Docker host running in Proxmox. When starting out, most will just dump all of their container configs into the same folder. However, this leads to confusion and stuff breaking. This is where I learned to create a parent project tree of folders with your project folders underneath.

I generally have something like a /home/linuxadmin/homelabservices folder and then have each project I am working on, in a subfolder underneath that. This really helps to keep things straight for your projects and I have found it keeps me sane when it comes to looking at different Docker compose code between projects.

Example folder structure:

home/linuxadmin/docker/

├── portainer/

│ ├── docker-compose.yml

│ └── .env

├── netdata/

│ ├── docker-compose.yml

│ └── .env

└── freshrss/

├── docker-compose.yml

└── .envWith this layout, you just cd into your project directory and run the expected:

docker compose up -dEverything stays self-contained and easy to work with.

Keep configuration out of the Compose file

Never hardcode values or passwords, ports, and things like that into your Docker compose code. This might be easy on the frontend, but it will definitely cause you pain in the long run. It’s also a security risk when it comes to placing passwords into your Docker Compose code.

It is better to use something like an .env file in the same directory. You store sensitive data inside the .env file and then make sure these files are part of your dockerignore and gitignore files.

Example .env:

MYSQL_ROOT_PASSWORD=supersecurepassword

MYSQL_DATABASE=appdb

MYSQL_USER=appuser

MYSQL_PASSWORD=anothersecurepasswordExample Compose file using the variables:

services:

mysql:

image: mysql:8

environment:

MYSQL_ROOT_PASSWORD: ${MYSQL_ROOT_PASSWORD}

MYSQL_DATABASE: ${MYSQL_DATABASE}

MYSQL_USER: ${MYSQL_USER}

MYSQL_PASSWORD: ${MYSQL_PASSWORD}Doing things this way keeps sensitive values outside of version control and makes it easy to update credentials without having to low-level edit your Docker Compose.

Use docker labels for organization and automation

You may be used to using labels with reverse proxies like Traefik or to add metadata (data about data) in things like Portainer.

Below is an example of using labels for Traefik:

labels:

- "traefik.enable=true"

- "traefik.http.routers.freshrss.rule=Host(`rss.example.com`)"

- "traefik.http.services.freshrss.loadbalancer.server.port=80"However, you can also use labels for simple organization. Note the following:

labels:

- "com.docker.compose.project=freshrss"

- "environment=production"With consistent labeling, you can search and filter containers easily with:

docker ps --filter "label=environment=production"Use health checks

Health checks are something that you should definitely learn about with your Docker Compose code. They allow you to make sure your Docker container only reports the container is healthy when it is ready based on checks that you define.

Here is a simple example health check:

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8080"]

interval: 30s

timeout: 5s

retries: 3This is really useful when you have services like databases or APIs that other containers rely on and you want to make sure they are healthy for your service to start and operate correctly.

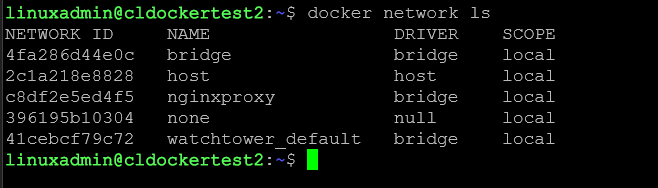

Use Docker networks for isolation

Like running containers with the docker run command from the command line, Docker Compose by default places everything on the default bridge network. This works, but especially when you get into reverse proxies and segmenting your traffic, you will likely want tighter isolation.

Here is an example of different networks for different containers in a classic three-tier application:

networks:

frontend:

backend:

services:

web:

image: nginx

networks:

- frontend

db:

image: mysql

networks:

- backendYou can use the Docker macvlan to put containers directly on your LAN with their own IP addresses. To do that, you can use something like the below in your Docker Compose code.

networks:

lan:

driver: macvlan

driver_opts:

parent: eth0

ipam:

config:

- subnet: "192.168.1.0/24"

gateway: "192.168.1.1"Use compose profiles

Have you heard about Docker compose profiles? They let you enable or disable parts of a Docker Compose stack and you don’t even have to edit the file. This works great if you have different “environments” but in the same Docker Compose code:

Here is an example:

services:

app:

image: myapp:latest

db:

image: mysql

profiles: ["dev"]Then, you run your Docker compose command with the profile parameter:

docker compose --profile dev up -dThe db service in this example only runs when you include that profile.

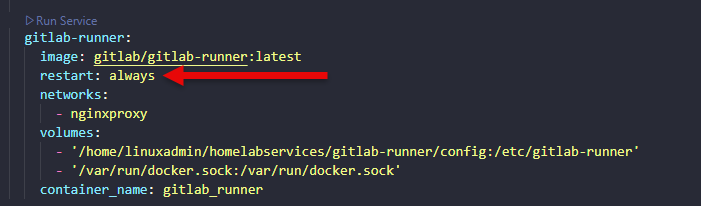

Always use restart policies

If you don’t set your container restart policies, the containers won’t automatically restart after a reboot or crash. You can set your restart policy to always restart or restart unless-stopped to control how they respond to restart events. Note the following common choices in Docker Compose:

restart: unless-stoppedor

restart: alwaysI usually use unless-stopped so a container I manually stop doesn’t get restarted after a reboot.

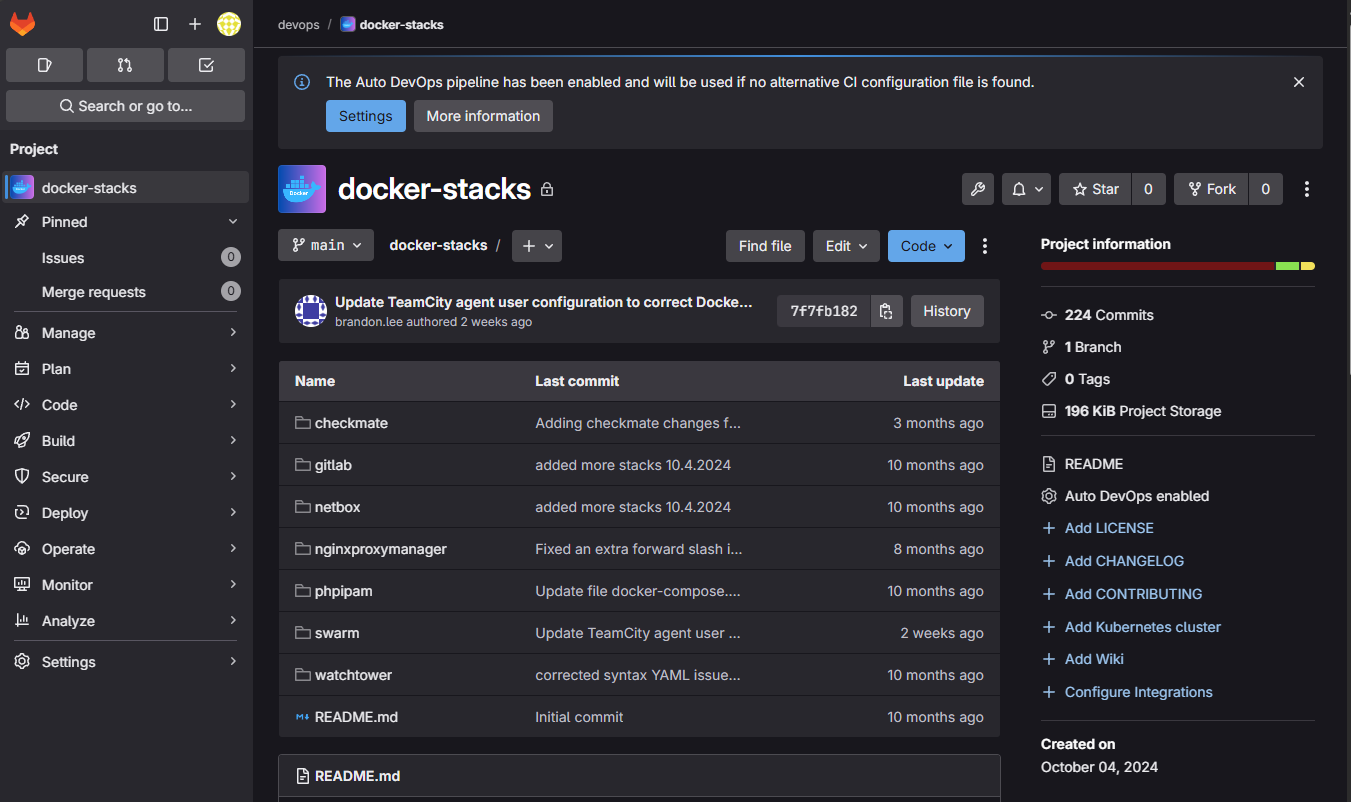

Version control your compose files

There are many great reasons for doing this. Have you ever been like me before I started putting my compose files in Git and change something and not understand what is broke, when it broke, or how to fix it? By placing your files in Git. You can track your changes and even more importantly roll back if you need to, to get things up and working again.

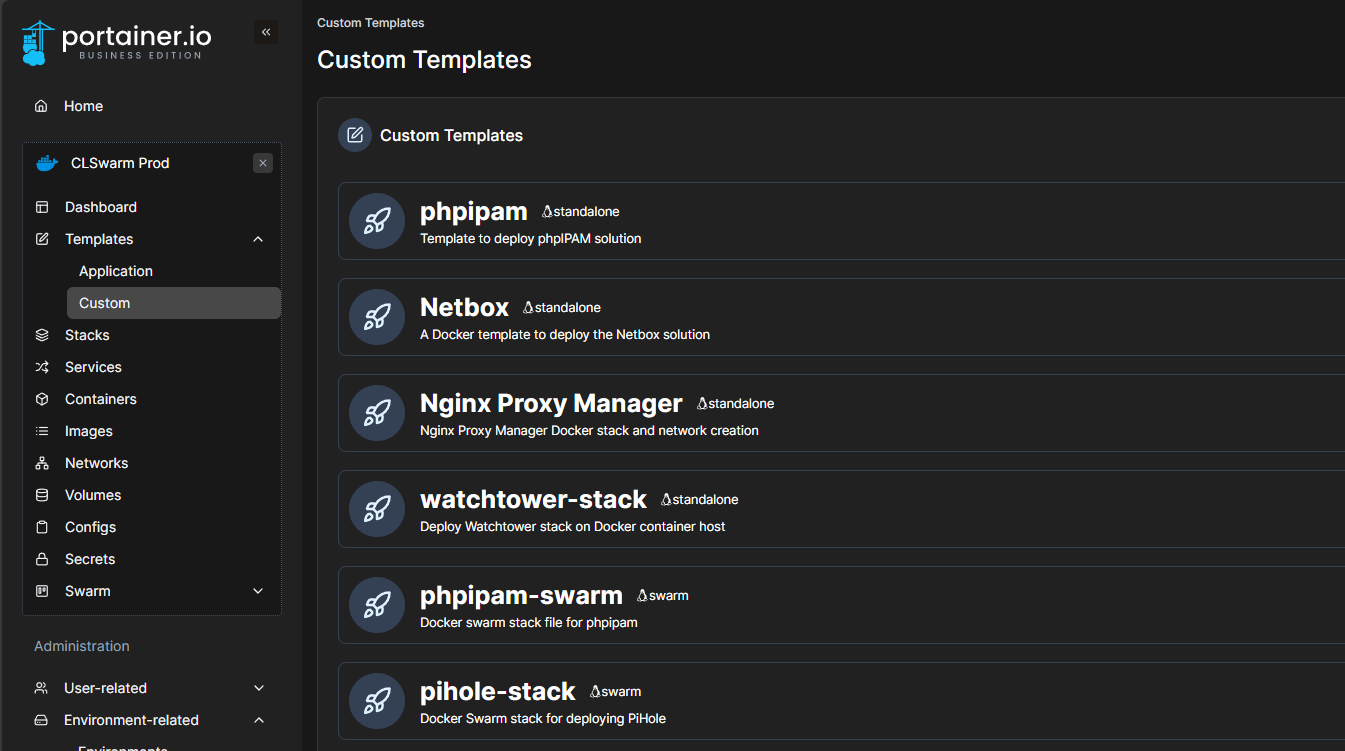

I self-host a GitLab instance in my home lab that works great as it also includes a container registry. However, you can use Gitea which is gaining momentum. And, you can also use Portainer to use versioning of your stack code.

I like the Templates feature in Portainer as well as it lets you define a template of your services so you can quickly and easily spin these services and apps up whenever you need to.

Quick git tutorial:

git init

git add docker-compose.yml .env

git commit -m "Initial commit"For private projects, push to a private GitHub or Gitea repo.

Use external volumes for shared data

Sometimes you need multiple Compose projects to share the same data, such as logs or database files. Generally this is not a good idea unless the app understands how to share data without corrupting it, like a database cluster, etc. Define the volume as external so it’s not tied to one Compose project. This is similar to defining an external network for multiple containers.

Example:

volumes:

shared-data:

external: true

services:

app:

image: myapp

volumes:

- shared-data:/dataCreate the volume once:

docker volume create shared-dataNow any project can mount it.

Organize multi-file configurations with overrides

Compose lets you use multiple YAML files so you can keep production and development differences separate and override these as you want with a special overrides file. The first file docker-compose.yml contains your base config or the default desttings you want in every environment. The docker-compose.override.yml fie contains only the changes you want to apply on top of the other file.

So this is like a layered approach where you put down your base config and then put another layer for your override YAML on top of that. It may replace settings or it may add to the existing settings.

Example of both files:

docker-compose.yml

services:

app:

image: myapp:latest

ports:

- "80:80"docker-compose.override.yml

services:

app:

image: myapp:dev

ports:

- "8080:80"

volumes:

- ./src:/app/srcRun with:

docker compose -f docker-compose.yml -f docker-compose.override.yml up -dThis makes it easy to swap out images, ports, or volumes without touching the base configuration. But, I also will say it can make things a bit more convaluted to troubleshoot or trace down.

Keep images up to date automatically

For home labs and small deployments, use Watchtower or Shepherd to automatically update containers when new images are pushed.

Watchtower example Compose service:

services:

watchtower:

image: containrrr/watchtower

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: --cleanup --interval 3600Shepherd works great in Docker Swarm environments and adds more granular scheduling.

Wrapping up

Docker Compose is more than just a simple orchestration tool. By using tricks like .env variables, labels, healthchecks, profiles, and proper project organization, you can make your containers more reliable, secure, and maintainable. The earlier you adopt these habits, the less time you’ll spend debugging and reorganizing later.

If you’re running more than a handful of containers, take the time to restructure your Compose files, set restart policies, and implement healthchecks. You’ll thank yourself the next time you restart your host or recover from a failure.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

When a docker-compose.override.yml is présent, docker compose will use it automatically, so ‘docker composé up -d’ is equivalent as ‘docker compose -f docker-compose.yml -f docker-compose.override.yml up -d’

Fabrice,

Nice point! Also, curious if you use the docker-compose.overrride.yml in some of your projects. Curious how many are using these various aspects of compose.

Brandon

Nice article! But why do you use version in the compose-file? It is obsolete.

pven,

Old habits die hard! 🙂 Thanks for the call out there.

Brandon