Running Docker containers is an absolute game changer for your home lab and production environments where they make sense. If you are wanting an easy way to spin up new environments and host apps and services, running containers has so many benefits. Learning Docker and containers in general is a great skill to have. However, there are some gotchas with containers that you will want to avoid. What are these? Let’s take a look at 10 docker container mistakes to avoid when working with Docker containers and how to do things the right way.

1. Using the “latest” tag in production

There are many mistakes that are made by beginners. However, one that is seen pretty often is using the latest tag when you pull your containers down. Using the latest release should be good right? Well, contrary to what you might think, latest may not be a guarantee that you are actually running the latest stable version. This tag usually just points to the image the publisher has marked as the latest. This can change unexpectedly without any warning.

If you rely on the latest tag, a rebuild or restart might accidentally pull the new version (latest) that might break your app!

What to do instead

It is better to pin your container images to a specific version, like:

image: nginx:1.27.1or

image: nginx@sha256:abcd1234...This way, you can update deliberately after testing a newer version in your dev or test environment.

2. Running containers as root

By default, I would say most containers run as the root user inside the container. While this doesn’t give direct root access to your host, this is one of the docker container mistakes that increases your risk if an attacker were to escape the container using a vulnerability or something else.

Better approach:

- Run containers as a non-root user whenever possible.

- Use the

USERdirective in your Dockerfile or specify theuserin yourdocker-compose.ymlfile

Example in Dockerfile:

RUN addgroup --system appgroup && adduser --system appuser --ingroup appgroup

USER appuser3. Not creating .dockerignore Files

When you build a container, every file in your build context is sent to the Docker daemon. It means you can possibly send gigabytes of unnecessary log files, node_modules, or other files like temporary files into your image. To avoid this, the .dockerignore file tells the docker build process which files to not include.

A .dockerignore file might have contents that look like this:

node_modules

.git

*.log

tmp/This keeps your builds lean and fast.

4. Using large, bloated base images

It is easy to inadvertently use large images that are way larger or have more features than you need. If you use a generic image like ubuntu:latest or debian:latest these are large and will result in images that are large. Container images that are large will take longer to download, more disk space to store them, and it will also take longer to deploy.

When possible, use smaller purpose-built base images like alpine where possible. For Python, you can use python:3.11-alpine and for Node.js, you can use node:20-alpine.

Multi-stage builds help

Also, something called multi-stage builds help to make the final image size smaller. What does a multi-stage build look like? Note the following:

FROM golang:1.22 AS builder

WORKDIR /app

COPY . .

RUN go build -o myapp

FROM alpine:latest

COPY --from=builder /app/myapp /myapp

CMD ["/myapp"]5. Not mounting persistent volumes for data

I know many years ago, I learned about persistent data with containers the hard way when I realized that data I assumed stayed with the container image would be persistent. However, rebuilding a container I realized the data I thought was persistent wasn’t persistent. If data is stored in the container’s writeable layer, it will be gone when the container is removed.

Using named volumes and bind mounts

There are a couple of ways to have persistent volumes, including named volumes with Docker or using bind mounts for persistent storage.

Example in Docker Compose of a named volume:

volumes:

db_data:

services:

postgres:

image: postgres:16

volumes:

- db_data:/var/lib/postgresql/dataThis makes sure that container restarts won’t wipe your database files in the example above. They will be stored in the db_data volume.

6. Hardcoding secrets in images or compose files

Another thing we may tend to get used to in the home lab that isn’t good is hardcoding secrets. There isn’t anything wrong with hard coding things if we are just testing, learning, or playing around. However, the challenge becomes remembering to properly handle credentials and get these out of hard coded clear text files as we transition to production or go from just testing to “production” even in the home lab.

Secrets like API keys, SSH passwords, and tokens should never be baked into Docker images or stored directly in docker-compose.yml. If you push your image or config to a public repo, then you are exposing those secrets forever.

What to do instead

There are better ways of handling secrets with Docker containers. These methods include doing the following:

- Use environment variables with

.envfiles that aren’t committed to source control. - Use Docker secrets for Swarm/Kubernetes deployments as they have ways to handle secrets built-in

Example .env:

DB_PASSWORD=SuperSecret123Example Compose reference:

environment:

- DB_PASSWORD=${DB_PASSWORD}As a tip, if you are storing your Docker-compose.yml file in source control (and you should be), always make use of a .gitignore file that you have your .env files listed in so these are never stored in git, when you do a git add, git commit, and git push.

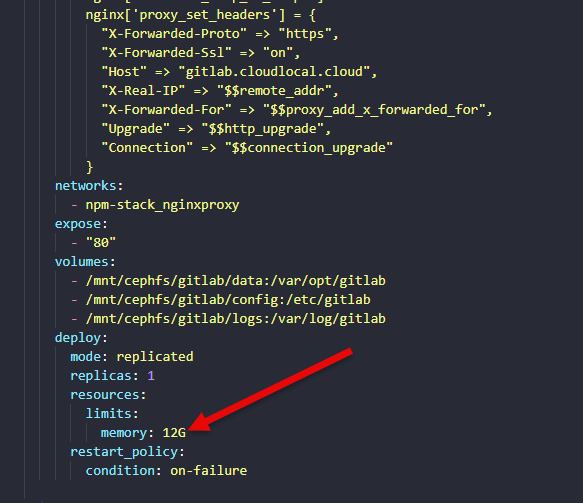

7. Not setting resource limits

If you leave settings at their defaults this is one of many docker container mistakes. Containers can use as much CPU and memory as they want to use or that the host allows. If a container starts to consume all the memory or CPU available on the host, all other containers that run on that Docker host are affected.

This is the better approach

Set CPU and memory limits in your Compose or docker run commands. I have to do this with GitLab as it is a beast of a container in the Omnibus configuration. This effectively sets the limit of what the container can take from the host.

deploy:

resources:

limits:

cpus: "0.50"

memory: 512MEven if you’re just in a home lab, this prevents runaway containers from taking down your system and causing everything else to become slow.

8. Neglecting container logging

By default, Docker uses the json-file logging driver, which can grow endlessly and fill your disk. This is dangerous in long-running containers that are chatty and produce a lot of logs.

Do this instead

- Set log rotation options:

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"Or use a central logging solution like Loki, ELK, or a SaaS log collector. These can store your logs and keep them pruned locally.

9. Not keeping images updated

This is just part of the best practice tasks that an admin needs to think about. Just like a physical server or virtual machine, patching is important. We don’t really “patch” container images. But, we can pull newer images down to remediate known vulnerabilities or other issues.

If you are building your own container images, these need to be rebuilt and incorporate the latest packages and patches into the underlying image OS. If you never rebuild and update, you’re leaving your containers open to security issues and is one of many docker container mistakes that can leave you open to a hack.

Best practices for keeping your images updates

- Use tools like Watchtower, Shepherd (for Swarm), or CI/CD pipelines to check and update images

- Subscribe to security bulletins for your base images

- Use tools like Trivvy, Snyk, and other tools that can scan for vulnerabilities in your container images

10. Treating containers like VMs

There can be a tendency to run a container like a VM. In other words, you house everything and the kitchen sink in one container. However, unlike virtual machines, containers are best suited to house on primary process and be stateless when possible.

If you try to cram multiple unrelated services into one container it makes it harder to manage and troubleshoot if things go down. Also, your “blast radius” expands if you take down a single container as it will affect more services.

Do this instead

- Follow the “one process per container” principle

- If multiple services need to talk, use Docker networks to connect them and still run these in separate containers

Example network in Compose:

networks:

app_network:

services:

web:

image: myapp

networks:

- app_network

db:

image: postgres

networks:

- app_networkWrapping up

It is easy to fall into bad habits when we start heavily using Docker containers to run applications. It requires a shift in the way we think about running our workloads and applications. However, if we run containers as they are meant to be run, they can be exceptionally powerful. Are there other docker container mistakes that you have found in your home lab or production environments? Please share these in the comments so we can all benefit.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.