DeepSeek has set the world buzzing again as it is gaining recognition as a powerful, open-source large language model (LLM) that challenges models from the major tech players. In their newest update to DeepSeek, deepseek r1-0528, they have signification improved performance and added new features. It continues to be a serious contender to other proprietary models like OpenAI’s GPT-4-o and Gemini 2.5 Pro. Let’s take a look at what’s new and what this means for the world of AI in general.

What Is DeepSeek?

In case you haven’t heard about DeepSeek, which is not likely, they are an AI research and development company in China. They have taken an open-source approach to LLM development. With the first release of DeepSeek-R1, they set the tech world on fire and raised eyebrows for their impressive performance relative to the cost of producing the model.

They have now released a minor upgrade to their model, version DeepSeek-R1-0528. With its open-source license, it means developers and businesses can use it, modify it, and even create their own paid offerings based on it without restrictions.

What’s New in DeepSeek-R1-0528?

This newest release is DeepSeek-R1-0528. Despite it being a minor release, they are pushing the boundaries of what it can do even further. It introduces many new improvements that will drastically enhance what it is capable of. Note the following new features and enhancements with the model:

- Better Reasoning: The logic ability and problem-solving performance of this latest release has been greatly improved. It has accuracy rising from 70% to 87.5% on the AIME 2025 test.

- Improved Coding Abilities: On the LiveCodeBench dataset, DeepSeek’s coding performance jumped from 63.5% to 73.3%. This means it can now compete more effectively with tools like GitHub Copilot, Gemini Code Assist, and GPT-4 Turbo.

- Distilled Qwen-Based Models: DeepSeek-R1-0528 is available in multiple sizes, including a distilled version called DeepSeek-R1-0528-Qwen3-8B. This is based on Alibaba’s Qwen3 architecture.

- Expanded Functionality: The new model supports JSON mode and function calling. These are key new features for developers who want to integrate DeepSeek into applications.

You can now try out the model easily through Ollama at this link: DeepSeek-R1 on Ollama.

Model training is low cost but produces high value

What has mainly set the world on fire about DeepSeek is not necessarily what the model can do, although it is a really great model. It is the fact that according to reports, DeepSeek trained their R1 models using only around 5 million USD in compute resources. This is far less than what is typically required to train models of this capability.

This feat was accomplished by optimizing training on smaller clusters, careful dataset curation, and using community-developed architectures like Qwen3. This produced distilled models that have high accuracy and dramatically reduce memory and compute overhead.

It also showed the tech community that top performing AI contenders don’t necessarily have to be produced by billion-dollar tech companies.

Real-World benchmarks

Benchmarks show just how far DeepSeek has come. On several academic and real-world evaluation tasks, DeepSeek-R1-0528 rivals the capabilities of top-tier models while remaining completely open-source.

For example:

- AIME 2025 (Math Reasoning): 87.5% accuracy (up from 70%)

- LiveCodeBench (Code Generation): 73.3% accuracy (up from 63.5%)

- GSM8K (Grade School Math): 85.3% accuracy

- MMLU (Multitask Language Understanding): 79.9%

These numbers put DeepSeek right on the heels of leading proprietary models. In some tasks, it even matches or exceeds GPT-4’s performance when given longer context windows or task-specific prompts.

Developers benefit

One of the biggest barriers to entry in AI right now is access. While commercial models have impressive capabilities, their use is often locked behind APIs with usage limits, paywalls, and license restrictions.

DeepSeek takes a different route. Their models are:

- Free to use

- Open-source under the MIT License

- Compatible with Docker, Hugging Face, and Ollama

- Supported across PowerShell and Linux CLI for easy deployment

This approach gives startups, indie developers, educators, and researchers access to high-quality LLMs without dealing with vendor lock-in or steep costs.

You can easily test this with Ollama

If you have an Ollama instance up and running, it is easy to self-host and test the new DeepSeek instance. Here’s how to get started:

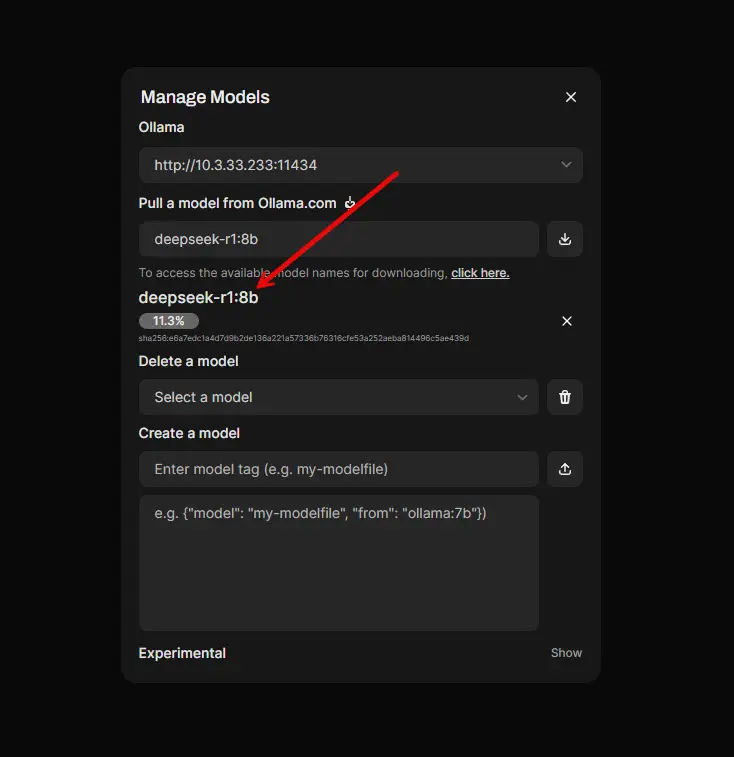

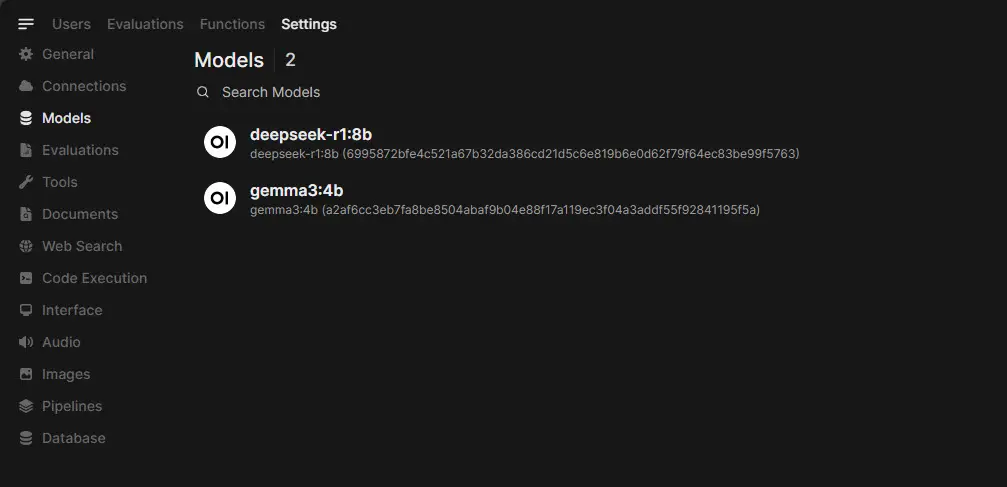

ollama run deepseek-r1:8bBelow, is after pulling down the latest DeepSeek model to Ollama via OpenWebUI.

If you are interested in the setup needed in Proxmox to self-host your own LLMs with Ollama and OpenWebUI, you can check out my blog post here: Run Ollama with NVIDIA GPU in Proxmox VMs and LXC containers. Also, check out my YouTube video for video walkthrough on the steps:

The model is definitely a challenge to Western AI companies

The global AI market is definitely accelerating. For many years now, OpenAI, Anthropic, Google, and Meta have dominated the AI race. However, DeepSeek is part of a new wave of open-source AI solutions companies like Mistral, Qwen, and Hugging Face that are helping to challenge this.

This will result in many benefits to the AI community and community in general:

- More innovation at the edge

- Better transparency

- Lower barriers to experimentation

- Competition that makes AI cost-effective

Wrapping up

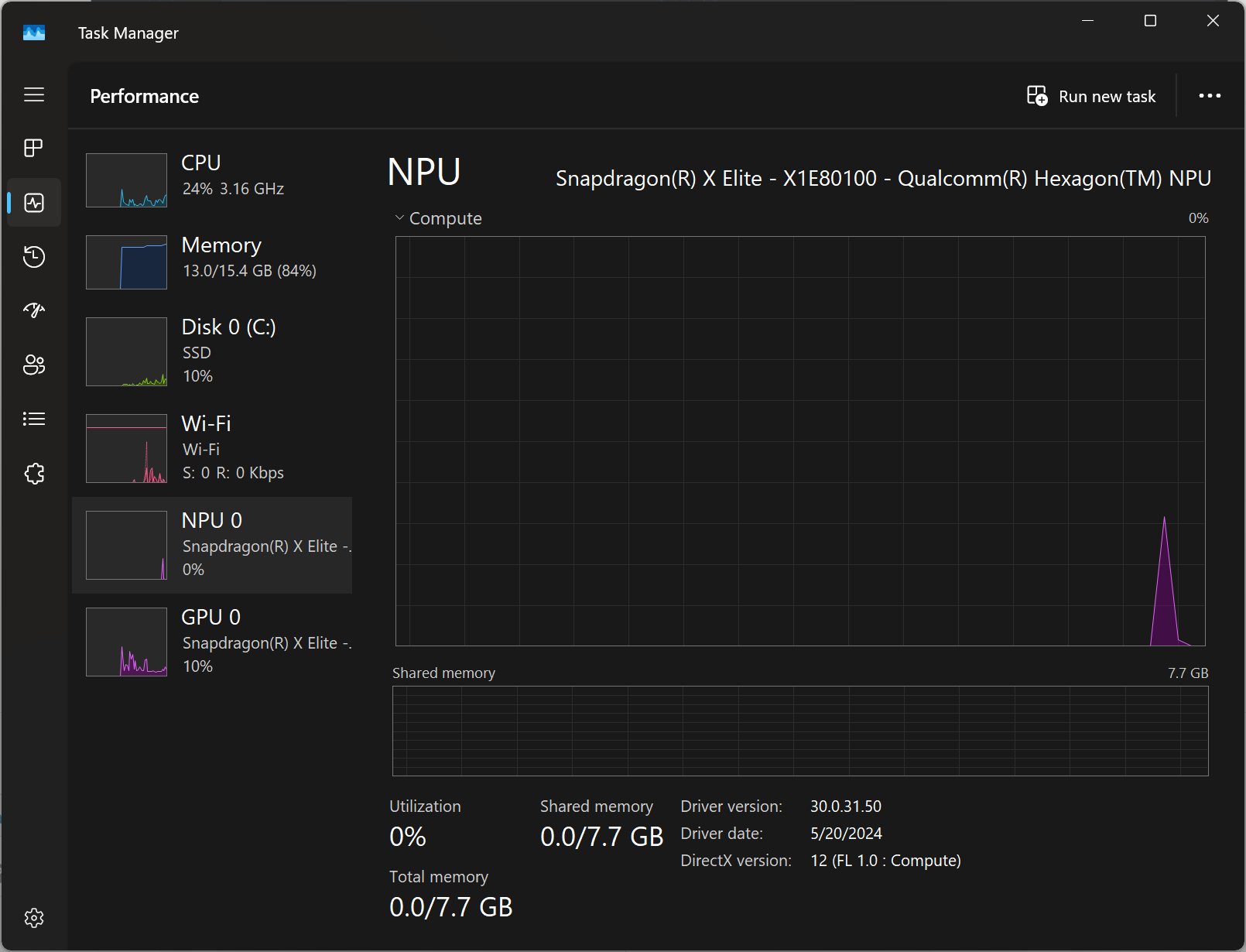

Each new version of models released by the major players continues to up the ante of what AI can do. However, DeepSeek’s latest release of R1 helps to show that it isn’t just necessarily the major tech companies that have a play in the AI race. Other companies and open-source solutions in general can be highly effective. What’s more, I love the fact that these open-source models are easy to download and self-host on our own home lab hardware with reasonable specs. We don’t have to have a datacenter full of GPUs and other compute to effectively use AI.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

A few points…

DeepSeek’s development cost of five million was only for the main run. The actual investment cost is unknown but assumed to be some hundreds of millions.

The distilled versions based on the Qwen architecture will not give the same performance levels as the main DeepSeek models – although the Qwen models are pretty good on their own.

There are two main DeepSeek models: chat and reasoner. The chat model does not reason.

Do note that if you run DeepSeek via API access from a front-end like MSTY, it is NOT free:

https://api-docs.deepseek.com/quick_start/pricing

It is, however, very cheap compared to other frontier models. Also note that the latest version of the reasoner model is twice the price of the previous version (but still cheap).